Effective Altruism Funded the “AI Existential Risk” Ecosystem with Half a Billion Dollars

The “AI Existential Safety” field did not arise organically. Effective Altruism invested $500 million in its growth and expansion.

“I was a fan of Effective Altruism. But it became cultish. Happy to donate to save the most lives in Africa, but not to pay techies to fret about AI turning us into paperclips.”

Steven Pinker, Harvard.

OpenAI’s Turmoil

On November 17, 2023, Sam Altman was fired by OpenAI’s Board of Directors: Ilya Sutskever, Adam D’Angelo, and two members with clear Effective-Altruism ties, Tasha McCauley and Helen Toner.1 Their vague letter left everyone with more questions than answers. It sparked speculations, interim CEOs (e.g., Emmett Shear), and an employee revolt (of more than 700 OpenAI employees). The board’s reaction was total silence.

A week after, on November 24, Steven Pinker linked to a Wall Street Journal article on how the OpenAI drama “showed the influence of effective altruism.”

The events that led to the coup saga are still unexplained. Nonetheless, it became a wake-up call to the power of the Effective Altruism movement, which is “supercharged by hundreds of millions of dollars” and focuses on how advanced artificial intelligence (AI) “could destroy mankind.” It looked clearer that “Effective Altruism degenerated into extinction alarmism.”

To make this reckoning more data-driven:

1. This article breaks down how the “AI Existential Risk” movement grew to be so influential.

TL;DR - The magnitude of the funding created this inflated ecosystem.

2. The next article, Your Ultimate Guide, describes the many players involved.

Effective Altruism, AI Safety, and AI Existential Risk

In a nutshell, according to the Effective Altruism movement, the most pressing problem in the world is preventing an apocalypse where an Artificial General Intelligence (AGI) exterminates humanity.2

With billionaires' backing, this movement funded numerous institutes, research groups, think tanks, grants, and scholarships under the brand of AI Safety. Effective Altruists tend to brag about their “field-building”: 1. They “Founded the field of AI Safety, and incubated it from nothing” up to this point. 2. They “created the field of AI alignment research” (“aligning future AI systems with our interests”/“human values”).3

The overlap between Effective Altruism, Existential Risk, and AI Safety formed an influential subculture. While its borders are blurry, the overlapping communities were defined as the AI Safety Epistemic Community (due to their shared values). Despite being a fringe group, its members have successfully moved the “human extinction from AI” scenario from science fiction into the mainstream.4 The question is: How?

Effective Altruism Funding “AI Existential Risk” = Half a Billion Dollars

The Main Funders

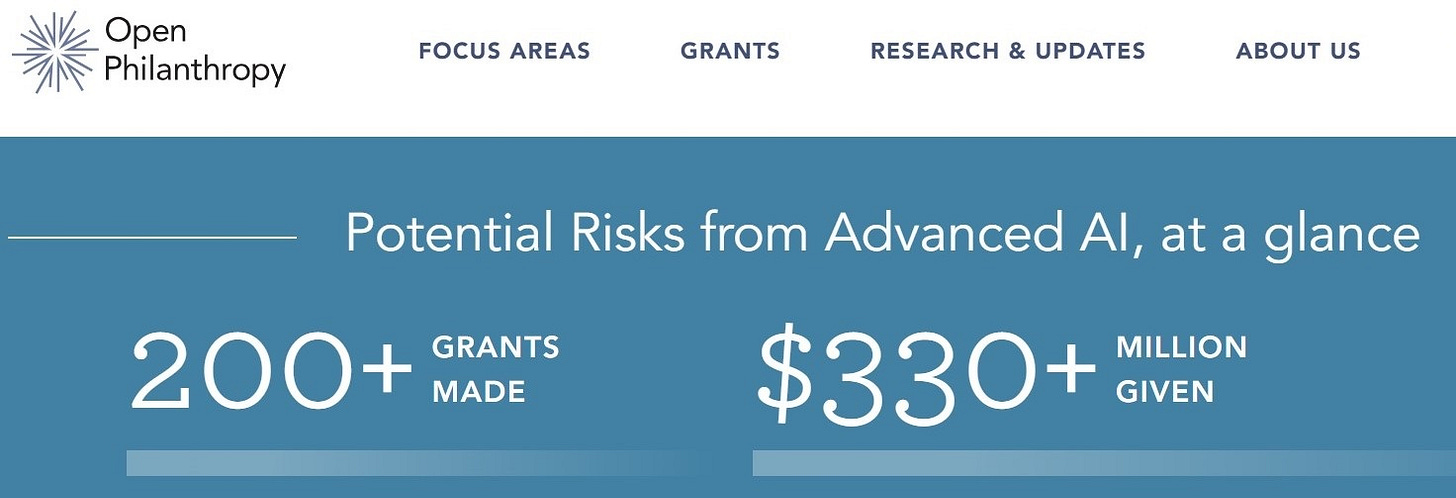

The largest donor in the Effective Altruism realm is Open Philanthropy. Dustin Moskovitz, Holden Karnofsky, and Cari Tuna founded this nonprofit to support effective altruism causes.

Its staff members who oversee the Potential Risks from Advanced Artificial Intelligence program are Luke Muehlhauser (formerly executive director of Eliezer Yudkowsky’s5 MIRI – the Machine Intelligence Research Institute in Berkeley, CA) and Ajeya Cotra (who founded the Effective Altruists of Berkeley student group).6 According to Open Philanthropy’s website, it fueled more than $330 million for the AI x-risk cause:

Next, we have the following well-known funders:

SFF – Survival and Flourishing Fund, which is mainly funded by Jaan Tallinn.

Sam Bankman-Fried‘s FTX Future Fund, which was shut down after FTX collapsed and went bankrupt. (Bankman-Fried donated approximately $32 million to AI Safety projects and invested more than $500 million in Anthropic).

LTFF – Long-Term Future Fund, which receives about half of its funding from Open Philanthropy (40% in 2022).

Among the other sources of funding are GWWC – Give What We Can, academic grants (e.g., NSF grants of $10.9 million in 2023 and anticipated $10 million in 2024, a partnership with Open Philanthropy and Good Ventures, both co-founded by Dustin Moskovitz and Cari Tuna), and individual donors (e.g., Elon Musk investing tens of millions of dollars in the Future of Life Institute, so “AI doesn’t go the way of Skynet”).

Follow the Money

The amounts of EA grants can be found in a variety of sources.

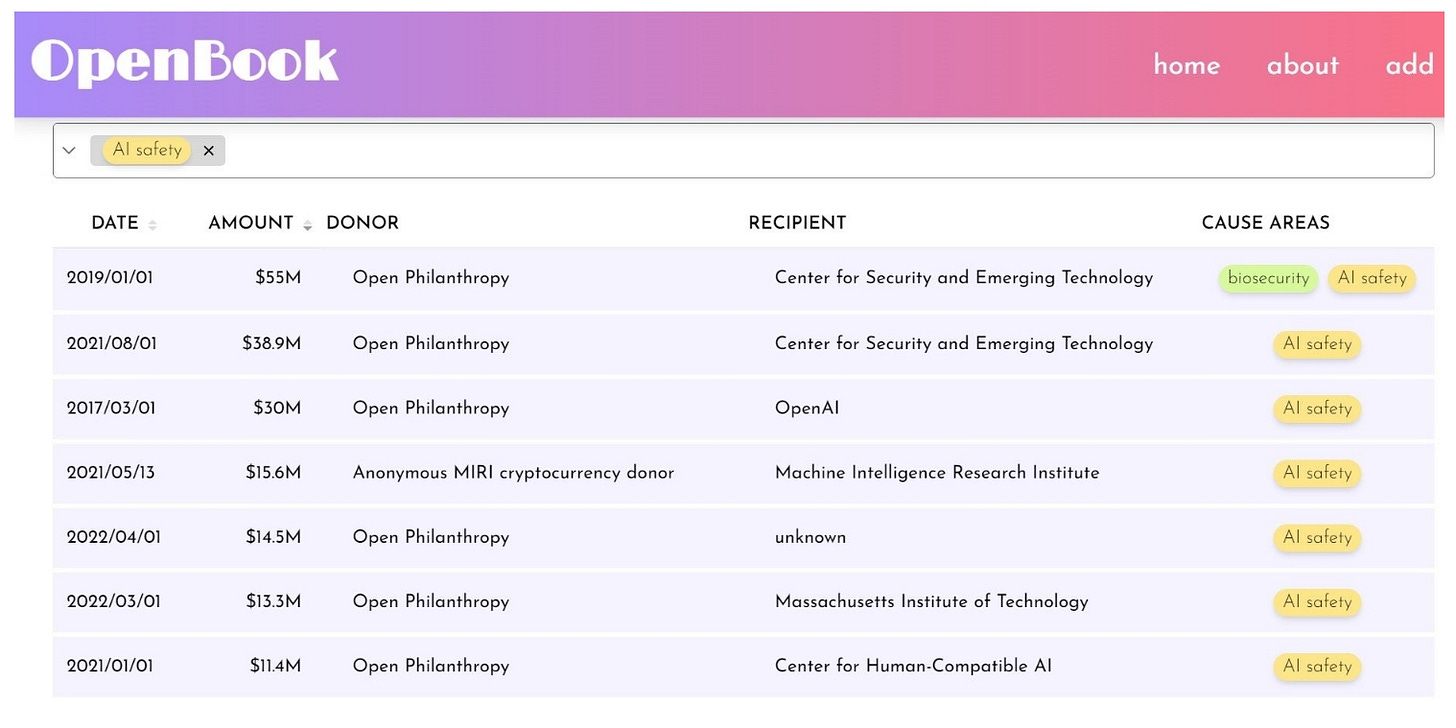

1. OpenBook

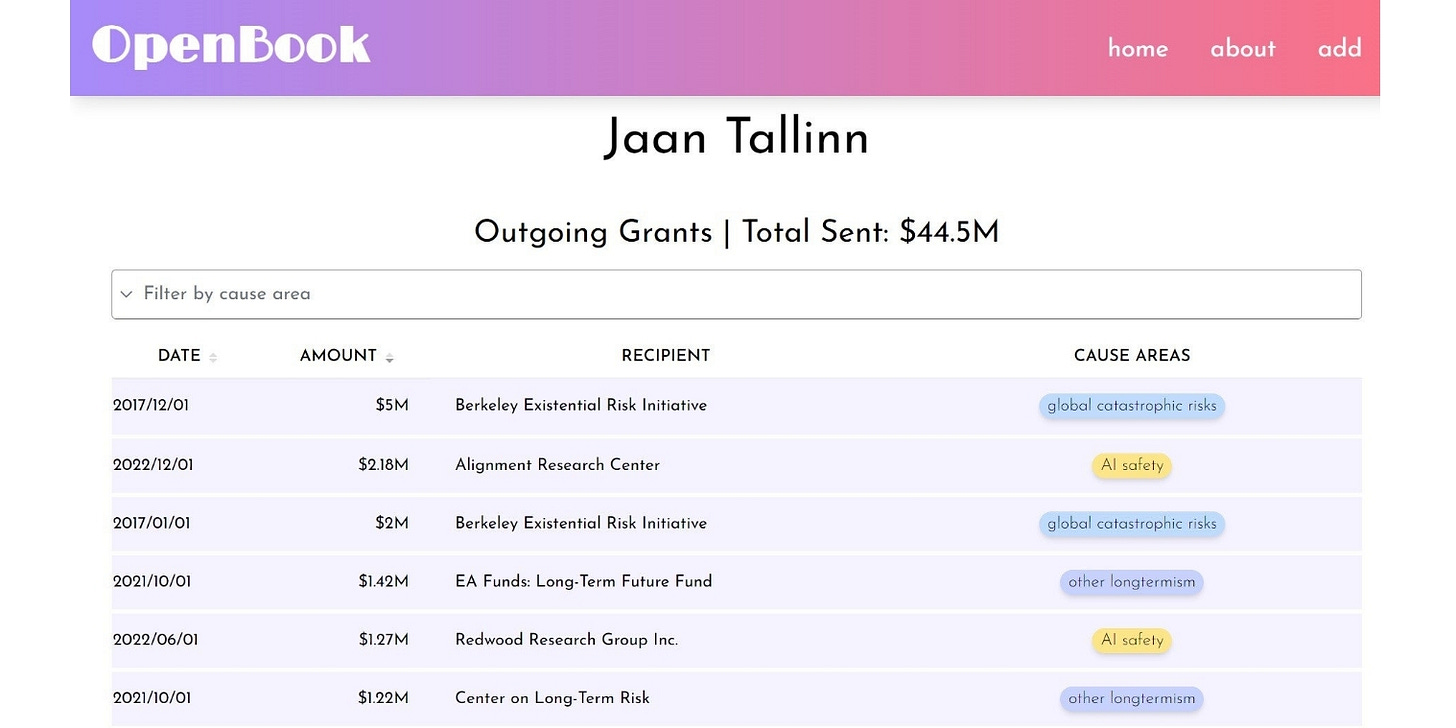

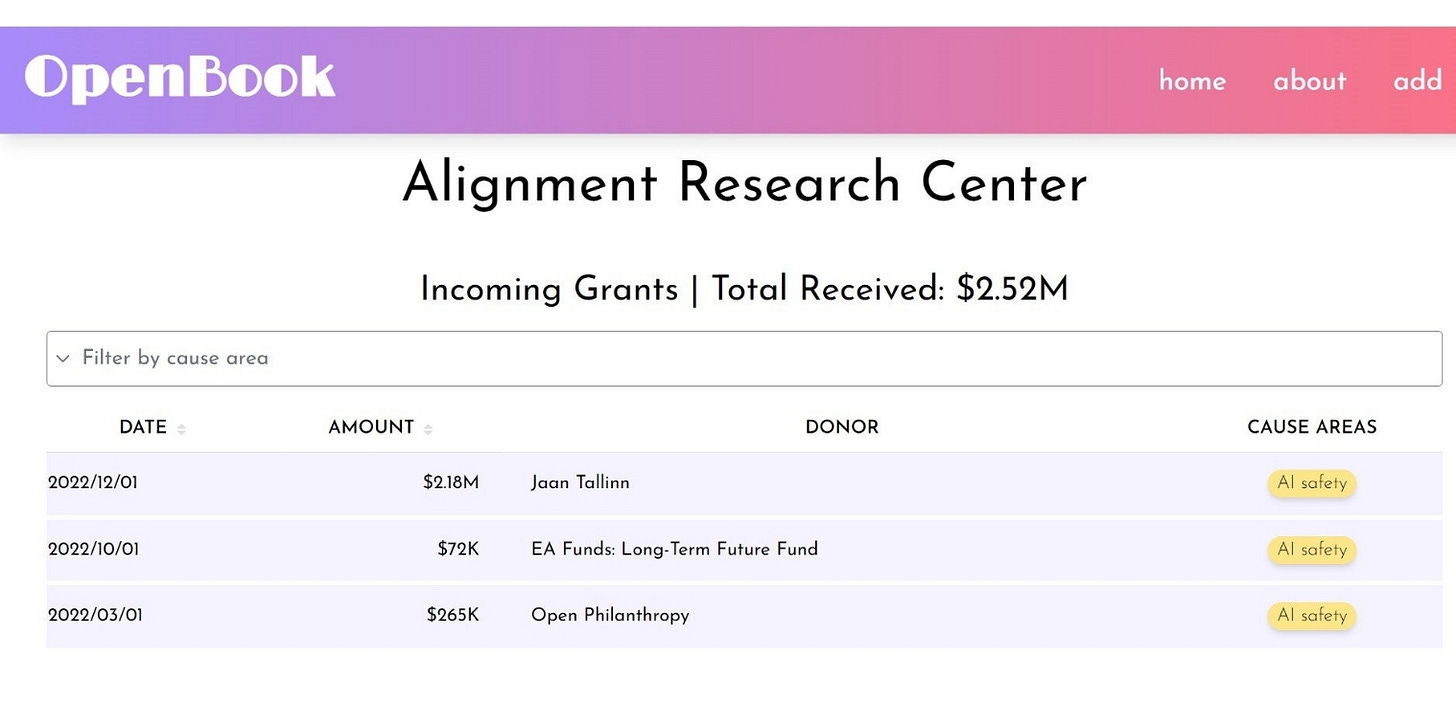

This database of Effective Altruism grants is compiled from the websites of Open Philanthropy, EA Funds, SFF (Survival and Flourishing Fund), and Vipul Naik’s Donation List.

It lets you review funding per cause (AI Safety)

per funder (e.g., Open Philanthropy, Jaan Tallinn, or FTX Future Fund before it collapsed)

And per recipients (e.g., ARC – Alignment Research Center):

The data spans until the end of 2022 (nearly none from 2023).

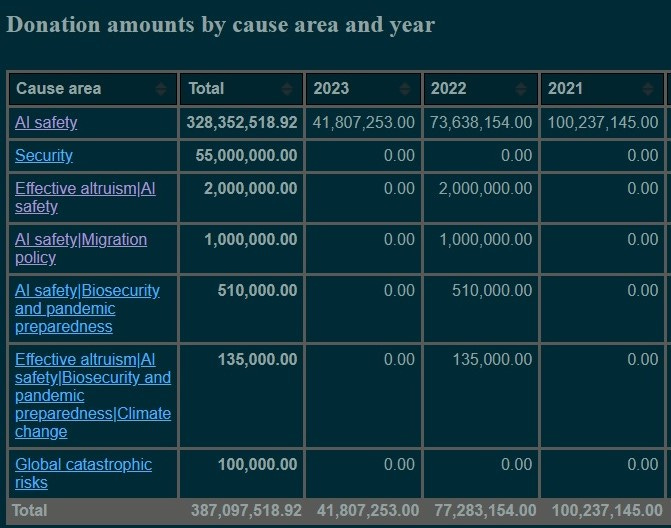

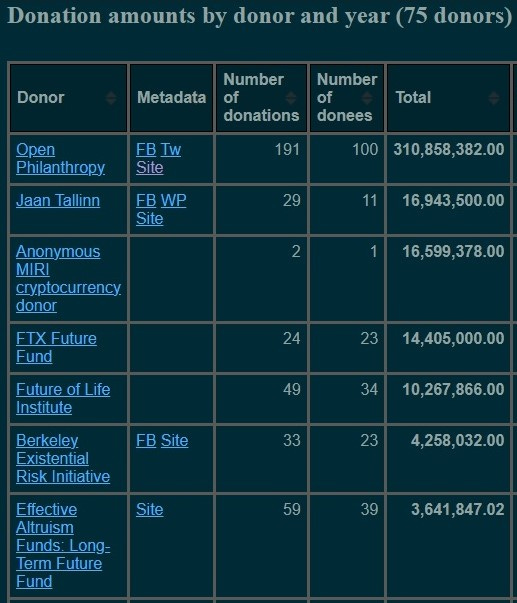

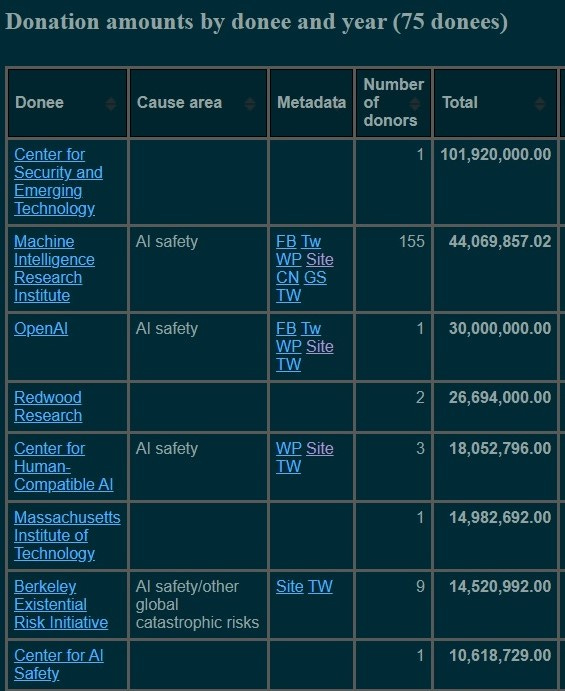

2. Vipul Naik’s Donation List website

This portal is also compiled from publicly announced donations to AI Safety, including entries from 2023. It amounts to $387,097,518.

We can see Open Philanthropy’s absolute dominance:

The leading recipient is CSET, the Center for Security and Emerging Technology at Georgetown University,7 which got from Open Philanthropy more than $100 million.

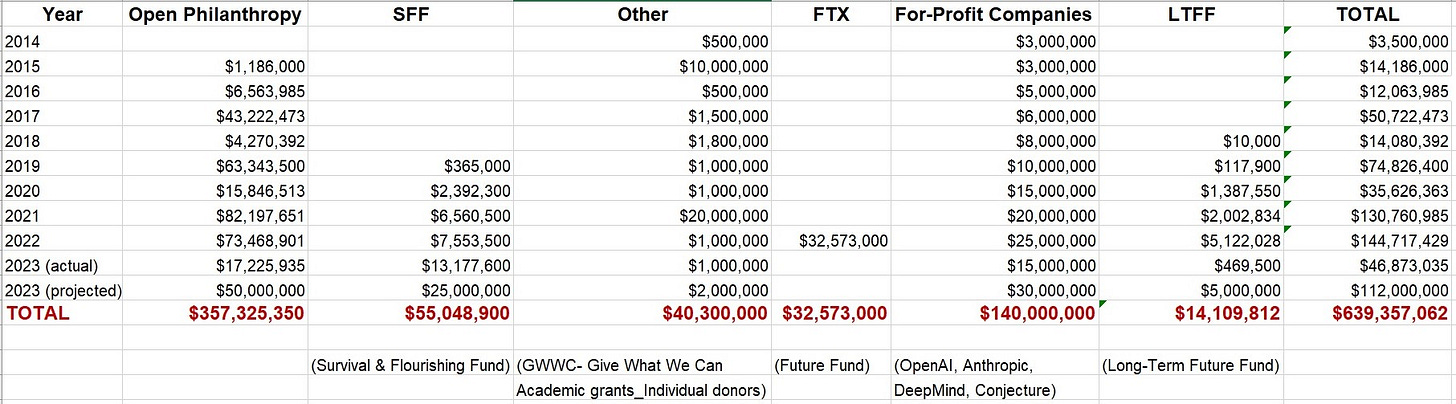

3. AI Safety Funding Spreadsheet

This Google Doc was posted on the Effective Altruism Forum under An Overview of the AI Safety Funding Situation.

Its creator made it clear that the funding data includes “AI Safety research that is focused on reducing risks from advanced AI (AGI) such as existential risks” and does not include investments in “near-term AI Safety concerns such as effects on labor market, fairness, privacy, ethics, disinformation, etc.”

Open Philanthropy, SFF, LTFF, and other funding sources were added based on publicly available data. It amounts to $499,357,062.

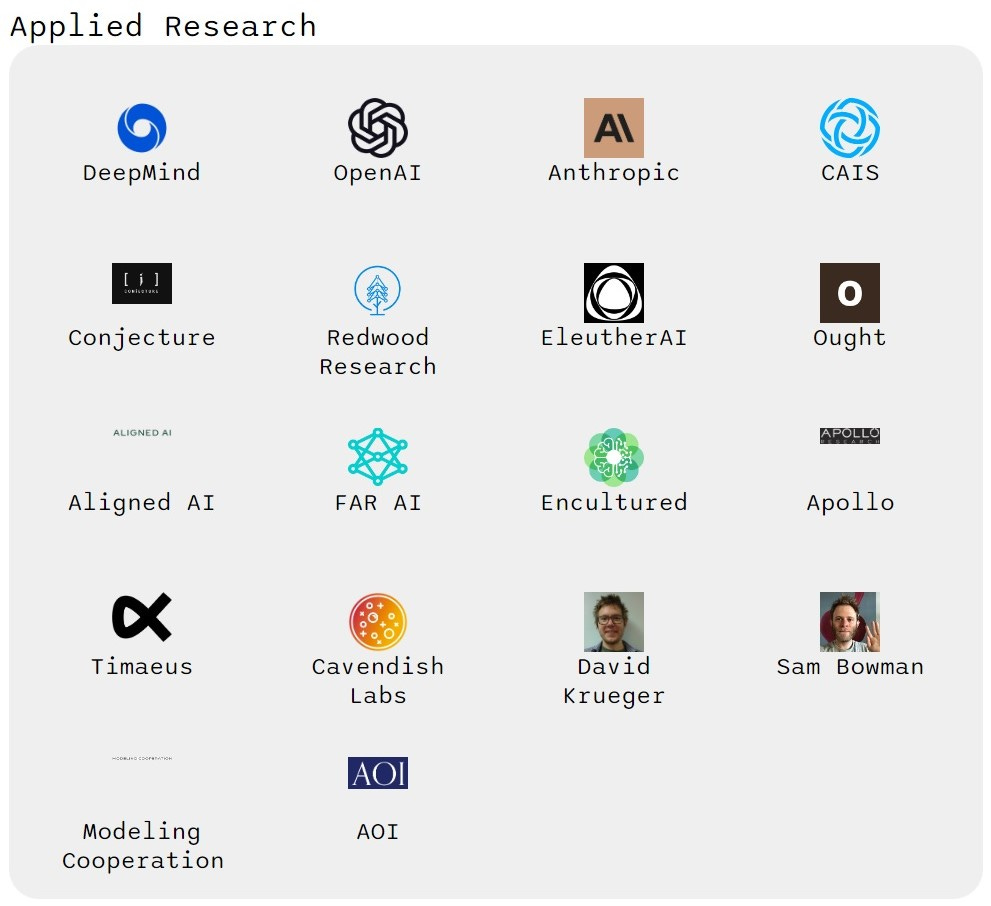

There was also a column for For-Profit Companies investing in AI Safety, such as OpenAI, Anthropic, DeepMind, and Conjecture. The calculation combines the estimated AI Safety teams (in those 4 companies) and the estimated total cost per employee.

When the estimated $140 million investment is added to the previous calculations, the total amount reaches $639,357,062.

However, since the for-profit companies’ estimated investments are unclear (and doomers tend to make up numbers), the figures below exclude those AI labs.

The two figures focus on publicly available funding data from the Effective Altruism organizations’ websites.

The following section details what this money was spent on.

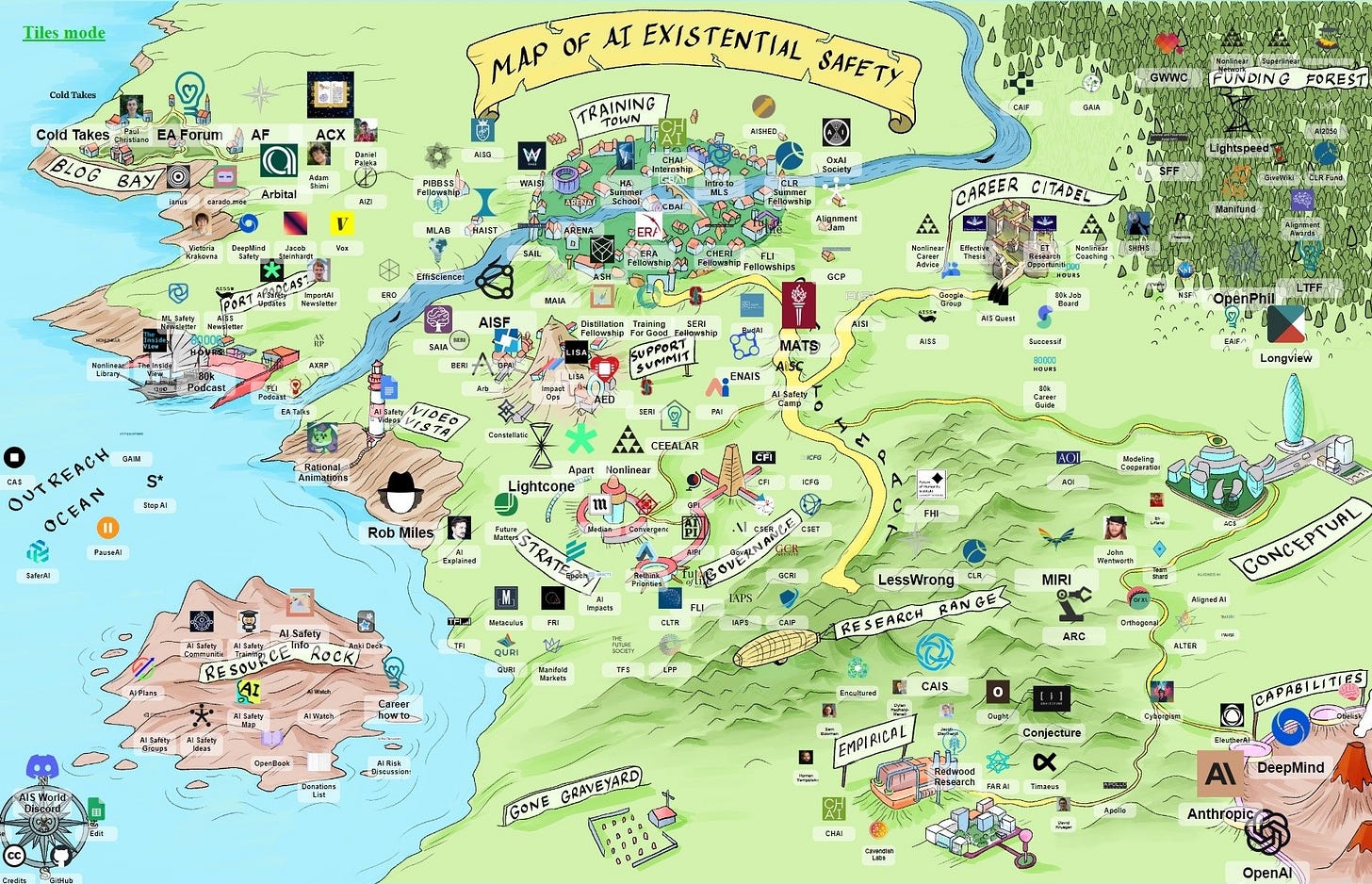

Ecosystem: Map of AI Existential Safety

The Map of AI Existential Safety was created as a service for effective altruists, students, researchers, and entrepreneurs interested in AI x-risk to become more familiar with funding and training sources.

The map illustrates the AI x-risk’s Funding; Strategy and Governance Research; Conceptual Research; Applied Research; Training and Education; Research Support; Career Support; Resources; Media; and Blogs.

The Criteria for inclusion in the map: “An entry must have reducing AI existential risk as a goal; have produced at least one output to that end; must additionally still be active and expected to produce more outputs.”

The map also has a tiles version. Screenshots from it are shown below.

Deteriorating Reputation

Effective Altruism has faced two significant backlashes, both involving a guy named Sam.

Sam Bankman-Fried’s Fall

Public perception of the Effective Altruism movement has declined in the wake of the accusations against its famous evangelist, Sam Bankman-Fried. Sam was an enthusiastic donor to EA organizations and AI Safety causes before being convicted of seven fraud charges (stealing $10 billion from customers and investors).

According to the Centre for Effective Altruism (CEA, backed by Effective Ventures) - “The alleged illegal and/or immoral behavior of FTX and Alameda Research executives associated with EA has caused CEA and others in the EA community to reflect on the principles we promote.” However, “We’re uncertain to what extent we mis-estimated this cluster of risks, and whether we could have or should have acted differently to mitigate them,” CEA added. “The team is reflecting on this.”

Is “the team” reflecting on the OpenAI’s events as well?

They should.

Sam Altman’s Firing

Jaan Tallinn offered one reflection.

“The OpenAI governance crisis highlights the fragility of voluntary EA-motivated governance schemes. So, the world should not rely on such governance working as intended,” he told Semafor. “I remain confused, but I note that the market now predicts that this was bad for AI risk indeed,” Tallinn added, citing one of its polls.

It's quite telling coming from a leading AI Existential Risk Philanthropist.

OpenAI told the WSJ: “We are a values-driven company committed to building safe, beneficial AGI, and effective altruism is not one of them.” Its spokesperson told VentureBeat that “None of our board members are effective altruists” and also that “non-employee board members are not effective altruists.”

The detailed Timeline of the OpenAI Board proves this statement is BS.

Dewey Murdick, executive director at CSET, praised the effective altruism movement on December 1, 2022. It was after Sam-Bankman-Fried’s fall. He argued that effective altruists have contributed to important research involving AI: “Because they have increased funding, it has increased attention on these issues,” he said, citing how there is more discussion over AI Safety.

On November 28, 2023, in an interview about whether OpenAI’s saga is “a wakeup call for AI Safety,” Dewey Murdick stated that “CSET is not an EA organization.” Regarding Helen Toner, he added, “I won’t even ask who subscribes to which philosophy within our organization.”

Has being associated with EA become so bad (even for an organization that gained $100 million from it)?

Current Vibe Shift

“If this coup was indeed motivated by safetyism, it seems to have backfired spectacularly, not just for OpenAI but more generally for the relevance of EA thinking to the future of the tech industry.”

Arvind Narayanan, Princeton.

There were a lot of worries in the Effective Altruism community as a result of this vibe shift:

“We need the EAs on the board to explain themselves,” wrote a participant in the Effective Altruism forum (referring to Helen Toner and Tasha McCauley). “It is a disaster for EA.”

“EA is not coming out well,” wrote another effective altruist. ”EA might find itself in a hostile atmosphere in what used to be one of the most EA-friendly places in the world.” The observation was that "Seemingly, all of Silicon Valley has turned against EA.”

There were even expressions of fear of a “McCarthy-esque immune reaction to EA people” and concerns about being “affiliated with EA organizations.”

Moving forward, it’s likely we will see even more backlash.

Conclusions

“A lot of effective altruism organizations have made AI x-risk their top cause in the last few years,” explains Sayash Kapoor from Princeton. “That means a lot of the people who are getting funding to do AI research are naturally inclined, but also have been specifically selected, for their interest in reducing AI x-risk.”

Thus, the interest in AI x-risk did not arise organically. “It has been very strategically funded by organizations that make x-risk a top area of focus.”

The next article contains an extensive guide with links and descriptions of all items on the “AI Existential Safety” map.

This makes it easy to see how inflated this ecosystem is. It’s pretty WILD.

We should ask these questions:

What are they doing with all that money?

¯\_(ツ)_/¯

How many lives could have been saved if the hundreds of millions had been donated to other causes, like global health?

GiveWell’s Malaria calculation ranges between $8,000 and $5,000 per life saved. Thus, the $500 million could have saved between 62,500 and 100,000 lives. (This is the Effective Altruism’s own calculation).

Is there going to be more reckoning from within?

Steven Pinker hopes Effective Altruism will “extricate themselves from this rut” (referring to the investment in “AI turning us into paperclips”).

Considering how much $ they’ve invested in it, I don’t see how they could.

Is there going to be more reckoning from outside?

This may be the best moment to reconsider letting EA-backed AI x-risk set the agenda for the AI industry and its upcoming regulation.

Tasha McCauley‘s background includes: RAND, Center for the Governance of AI, Centre for Effective Altruism- U.K. board of the Effective Ventures Foundation.

Helen Toner‘s background includes: GiveWell, Center for the Governance of AI, Open Philanthropy as a senior research analyst (responsible for Open Philanthropy’s scale-up from making $10 million in grants per year to over $200 million). In September 2021, she replaced Open Philanthropy’s Holden Karnofsky on OpenAI’s board of directors. She is the director of foundational research grants at Georgetown’s Center for Security and Emerging Technology (CSET).

Effective Altruism works on and donates to other causes like Farm Animal Welfare, Biosecurity & Pandemic Preparedness, and Global Health. This series is focused on EA’s growing obsession with the “#1 problem” of AI posing a threat to humanity.

You can work in AI Safety without being an effective altruist or an AI doomer.

You should care about the responsible and safe development of AI without being an effective altruist.

The point of this series is to show how the founders of this field have strong EA affiliation. They invested so much in the alignment problem “to make machines less likely to kill us all.” Leading voices in AI Safety adhere to that ideology. They discuss “takeover” scenarios and worry that advanced out-of-control AI systems will exhibit self-preservation or power-seeking behaviors.

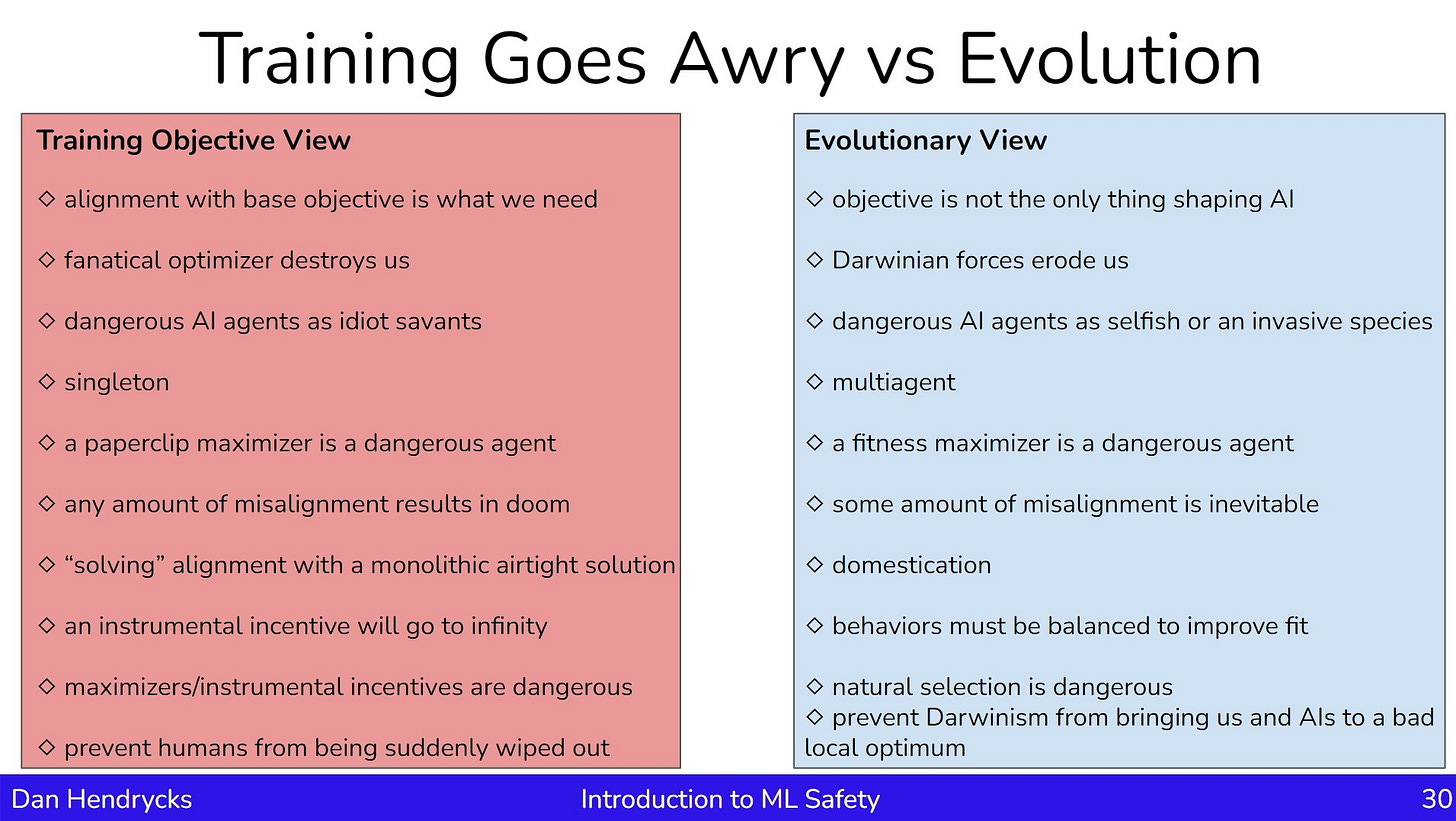

See, for example, the list of “views” that Dan Hendrycks, the director of CAIS (Center for AI Safety), teaches in his Introduction to ML Safety course (under Natural Selection Favors AIs over Humans):

The AI Panic Campaign - Part 1 revealed the market research efforts being done by AI Safety organizations in order to promote “human extinction from AI” and “AI moratorium” messages. They weren’t hiding their goal of persuading policymakers to surveil and criminalize AI development.

Eliezer Yudkowsky, one of the founders of “AI alignment,” admitted in September 2023: “I expected to be a tiny voice shouting into the void, and people listened instead. So, I doubled down on that.”

“Berkeley, Calif., is an epicenter of effective altruism in the Bay Area.”

If CSET sounds familiar to you, it’s because Helen Tuner from the attempted coup at OpenAI is CSET’s director of foundational research grants.

On November 17, Toner participated in the firing of Sam Altman because he “was not consistently candid in his communications with the board.”

After a long period of silence, on November 29, she tweeted a word salad that didn’t provide any explanation. Thus, she “was not consistently candid in her communications with the world.”

(At some point, we will get real explanations. Hopefully).

The first question to ask is: Are existential risks from AI real? Whether AI Safety merits funding rests on this point.

I liked this, fairly well informed.

Incidentally though, asking "How many lives could have been saved if the hundreds of millions had been donated to other causes, like global health?" is an EA question!

Welcome to the community!👋 You should show up at the next EA Global conference☺️