The $665M Shitcoin Donation to the Future of Life Institute

What we currently know: Getting the money | The reporting | The money trail.

#Shibagate - Executive Summary

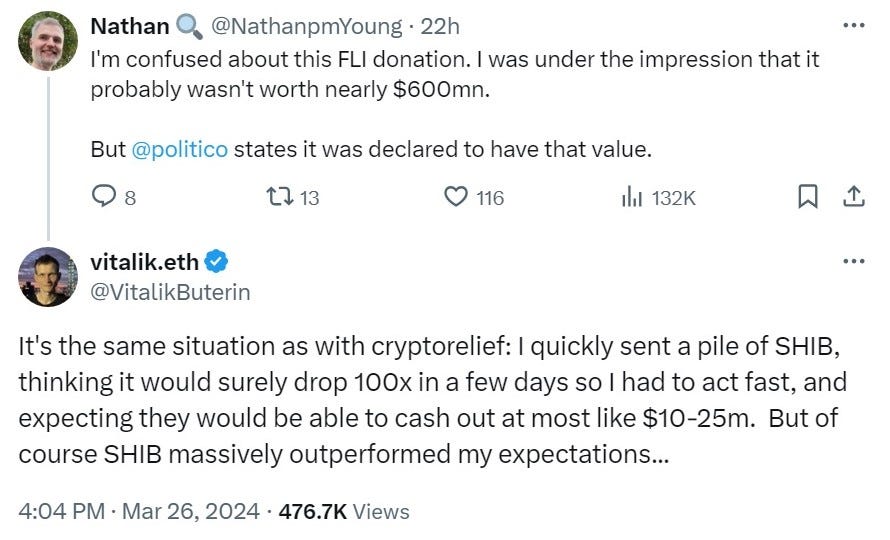

In May 2021, FLI received a large cryptocurrency donation from Ethereum co-founder Vitalik Buterin.

A year ago, in March 2023, during the “Pause AI” Open Letter news cycle, the number of dollars converted from the Shiba Inu tokens was unknown.

Based on the latest publicly available data, the media reported that FLI is “primarily funded by the Musk Foundation.”

It’s only now, in March 2024, thanks to Politico’s bombshell, that we finally learn the magnitude of Buterin’s donation. FLI spokesperson told Politico, “The value of that gift ultimately came to $665.8 million.”

So, actually, it was “primarily funded by Vitalik Buterin” and a dog-themed shitcoin.

“Tax documents provided by FLI show it had just $2.4 million at the start of that year. Now its assets dwarf those amassed by not just most other AI groups, but many high-profile policy organizations.”

The well-oiled x-risk machine:

- FLI used this donation to fund existing “AI Existential Risk” organizations (such as MIRI) and to create new ones (such as FLF and CARMA).

- FLI’s lobbying goals include: Pervasive Surveillance and criminalization of AI development.

This post is based on publicly available data. Currently, FLI is quiet about the details, so we can only discuss this accumulated data. Once FLI releases some clarifications with additional numbers, I’ll update this post accordingly.

Update - May 17, 2024

To mark the 3-year anniversary of Buterin’s donation to the Future of Life Institute, I shared FLI's "990 form" for 2021. The document was published by ProPublica.

We now know the exact amount FLI reported to the IRS:

$665,882,523.

There is more information in FLI’s IRS report for 2021, like the $123,904,047 investment in other cryptocurrencies.

At some point, FLI sold those coins (and reported the gains in 2022?)

FLI’s total gross receipts were (at the end of 2021): $674,314,532.

Still no word on what they're planning to do with it.

Update - April 2025

The Future of Life Institute’s IRS filing for 2022 was published by ProPublica.

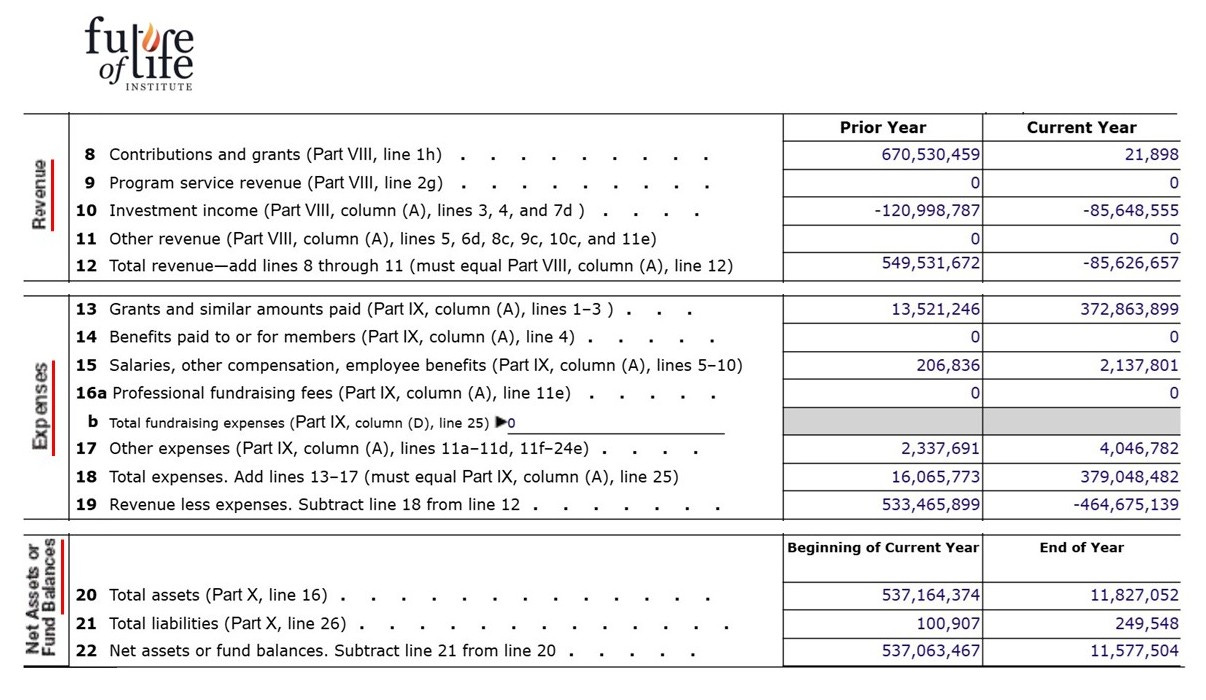

FLI in 2021:

Vitalik Buterin’s donation: $670,530,459

Investment loss: -$120,998,787

Total revenue: $549,531,672

Total expenses (mainly grants): -$16,065,773

Net assets, end of year: $537,063,467

FLI in 2022:

Investment loss: -$146,468,809

(realized = -$85,648,555 and unrealized = -$60,820,254)

Total expenses (mainly grants): -$379,048,482

Net assets, end of year: $11,577,504

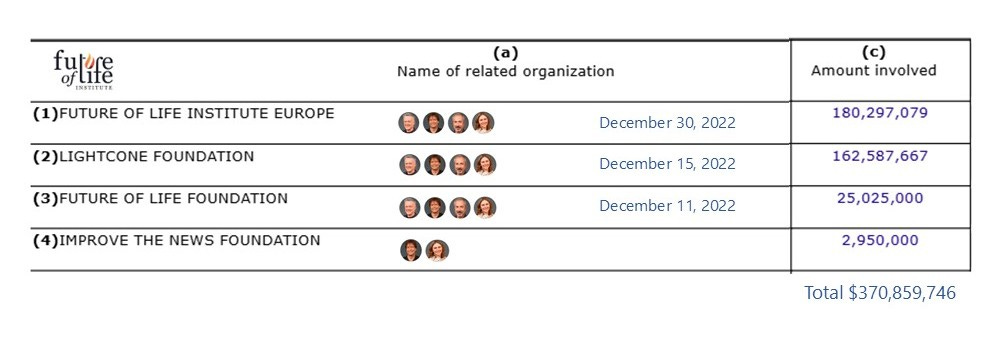

According to FLI’s new Form 990:

Between December 11 and December 30, 2022, FLI transferred $368 million into 3 entities, all governed by the same four people: Max Tegmark, Meia Chita-Tegmark, Anthony Aguirre, and Jaan Tallinn.

The Future of Life Institute’s explanations:

The transfer of $180.3 million from FLI US to FLI Europe was described as “establishing and funding Europe-based affiliate.”

The transfer of $162.6 million from FLI to Lightcone Foundation was described as a “grant to establish the Lightcone Foundation, a related organization to FLI.”

The transfer of $25 million from FLI to FLF was described as a “grant to establish and fund the Future of Life Foundation, a related organization to FLI.”

Update – January 2026

The Future of Life Institute’s IRS filings for 2023 and 2024 were published by ProPublica.

FLI in 2023:

Total revenue: $4,717,340

Total expenses: $16,317,712

Net assets, end of year: $1,207,674

FLI in 2024:

Total revenue: $21,273,381

Total expenses: $17,399,051

Net assets, end of year: $5,088,435

Background

”AI Existential Risk”

In the introduction to Vitalik Buterin’s book “Proof of Stake: The Making of Ethereum and the Philosophy of Blockchains” (2022), Nathan Schneider described:

“When Buterin first introduced Ethereum on stage, at an early-2014 Bitcoin conference in Miami, after a litany of all the wonders that could be built with it, he ended with a mic-drop reference to Skynet—the artificial intelligence in the Terminator movies that turns on its human creators. It was a joke that he would repeat, and like many well-worn jokes, it bore a warning.”

At the end of 2023, Buterin published a lengthy manifesto: My techno-optimism. It contains many nuanced and conflicting ideas. To understand #Shibagate, you need to consider this specific part of his long-term thinking:

Buterin stated that “Existential risk is a big deal” and that “it stands a serious chance” of Artificial Intelligence (AI) “becoming the new apex species on the planet.”

“One way in which AI gone wrong could make the world worse is (almost) the worst possible way: it could literally cause human extinction,” Buterin wrote. “A superintelligent AI, if it decides to turn against us, may well leave no survivors, and end humanity for good.”

According to a TechCrunch article from January 2015, when Elon Musk donated $10M to the Future of Life Institute, it was “to Make Sure AI Doesn’t Go The Way of Skynet.”

It made sense to Musk to fund FLI, as it was (and still is) devoted to existential risks. Its description in the Effective Altruism Forum is literally: “FLI is a non-profit that works to reduce existential risk from powerful technologies, particularly artificial intelligence.”

FLI’s co-founder, Max Tegmark, frequently shares AI doom scenarios. “There’s a pretty large chance we’re not gonna make it as humans. There won’t be any humans on the planet in a not-too-distant future,” Tegmark said in an interview. He also referred to AI as “the kind of cancer which kills all of humanity.”

Jaan Tallinn is a tech billionaire from Estonia. Whenever Tallinn is asked what led him to co-found FLI (2014) and the Centre for the Study of Existential Risk (2012), his response is always the same: Eliezer Yudkowsky. Upon reading Yudkowsky’s writing, he became intrigued by the Singularity and existential risks. He then decided to invest a portion of his fortune in this cause.

Three things to know about Jaan Tallinn’s wealth:

1. Jaan Tallinn makes grants directly through his Survival and Flourishing Fund (SFF) and indirectly through his donations to the Berkeley Existential Risk Initiative (BERI), the Centre for Effective Altruism (CEA), and its EA Funds - the EA Infrastructure Fund (EAIF) and the Long Term Future Fund (LTFF).

2. Jaan Tallinn was one of the earliest investors in DeepMind (before they were acquired by Google) and led Anthropic’s Series A round ($124M, with Dustin Moskovitz) and participated in the Series B round ($580M with Sam Bankman-Fried). Numerous other AI startups are in his portfolio.

3. In November 2020, Jaan Tallinn told Fortune Magazine that “he keeps most of his personal wealth” in the form of cryptocurrencies, mainly Bitcoin and Ethereum (ETH), since “converting it into cash would have resulted in an unnecessary capital gains tax bill, reducing the amount he could give.”

Act 1: Getting the Money

May 2021

Vitalik Buterin donates SHIB tokens to a charity with a “long-term orientation”

Shiba Inu (SHIB) is an Ethereum-based altcoin (a cryptocurrency other than Bitcoin) that features the Shiba Inu dog as its mascot. It’s often referred to as a shitcoin. Shitcoins, also known as memecoins, usually offer growth potential regardless of their utility. They are often created with no intention to have utility besides speculation on rising token price.

Shiba Inu’s price at the beginning of 2021 was very low ($0.00000001) until Elon Musk tweeted about Shiba Inu (in March 2021), and its price rose 300% in the hours following Musk’s tweet. Since then, “Shiba Inu has grown as one of the biggest shitcoins in the cryptocurrency space.”

On May 12, 2021, Ethereum co-creator Vitalik Buterin donated 50 trillion SHIB tokens (worth around $1.2 billion as of May 12) to an India Covid-19 Relief Fund. However, since it spurred a crash in the price, the tokens eventually amounted to $400 million (an estimate from July 2021). Thus, the crypto relief had “lost” more than half the estimated theoretical value before any had even been spent.

On the same day, Buterin also donated millions of dollars via ETH (Ether) to other causes, like GiveWell (transaction on Etherscan), Methuselah Foundation (transaction), and MIRI- Machine Intelligence Research Institute (transaction).

On May 16, 2021, Vitalik Buterin claimed he burned 90% of his SHIB holding, totaling around $6.8 billion (at the time of the transaction). He sent a stash of over 410 trillion tokens to a dead blockchain address in a single transaction. He effectively removed almost half of all SHIB from circulation forever. He marked the remaining 10% in his wallet for charitable causes.

In one of the burning transaction hashes, Buterin explained his plan forward:

“The remaining 10% will be sent to a (not yet decided) charity with similar values to cryptorelief (preventing large-scale loss of life) but with a more long-term orientation.”

On May 17, 2021, Vitalik Buterin sent 46,000,000,000,000 (Forty-Six Trillion) SHIBA—worth around $755,807,582 (as of May 17)—to wallet 1. This was to move tokens from his 'cold wallet' to his 'public (hot) wallet.' Buterin used it to transfer the token to FLI's wallet.

On May 19, 2021, FLI started to send the Shiba Inu tokens from its wallet to FTX, indicating the start of the liquidation process. A total amount of 44.4 Trillion SHIB tokens were gradually transferred to FTX until June 4, 2021.1 [Those blockchain transactions are visible, but we have no way of knowing when and at what price the SHIB was converted to dollars].

Act 2: The Reporting

June 3, 2021

FLI announces a $25 million grant program and expresses gratitude to Vitalik Buterin, the Shiba Inu community, and Alameda Research.

On June 3, 2021, the Future of Life Institute launched Vitalik Buterin Fellowships centered on AI Existential Safety (“The Vitalik Buterin PhD/Postdoctoral Fellowship in AI Existential Safety”). “The Future of Life Institute is delighted to announce a $25M multi-year grant program aimed at tipping the balance toward flourishing, away from extinction,” read the statement. “This is made possible by the generosity of cryptocurrency pioneer Vitalik Buterin and the Shiba Inu community.”

The closing remark added “the amazing team at Alameda Research” to the acknowledgments. Alameda Research was a sister company to FTX. This hedge fund was founded by Sam Bankman-Fried and was central to FTX’s finances.

“To conclude, we wish to once again express our profound gratitude to all who've made this possible, from Vitalik Buterin and the Shiba Inu Community to the amazing team at Alameda Research.”

The announcement specified two types of grants:

“We will be running a series of grants competitions of two types: Shiba Inu Grants and Vitalik Buterin Fellowships.

Shiba Inu grants support projects, specifically research, education, or other beneficial activities in the program areas.

Buterin Fellowships bolster the talent pipeline through which much-needed talent flows into our program area, tentatively including funding for high school summer programs, college summer internships, graduate fellowships, and postdoctoral fellowships.”

FLI only mentions the “Shiba Inu Grants” in the same month, in its June 2021 newsletter, and since then, not at all. Have they realized being associated with a dog-themed memecoin was not such a good idea?

Two weeks earlier

For comparison purposes, notice what else happened in mid-May 2021.

Eliezer Yudkowsky’s Machine Intelligence Research Institute (MIRI) announced two major donations: On May 12, 2021, Vitalik Buterin donated $4.3 million in ETH (Ether). On May 13, 2021, an anonymous donor donated $15.6 million. MIRI noted that the same donor "previously donated $1.01M in ETH to MIRI in 2017."

Most importantly, MIRI immediately reported the converted amount:

"Their amazingly generous new donation comes in the form of 3001 MKR [Maker], governance tokens used in MakerDAO, a stablecoin project on the Ethereum blockchain. MIRI liquidated the donated MKR for $15,592,829 after receiving it.

In other amazing news, the inventor and co-founder of Ethereum, Vitalik Buterin, yesterday gave us a surprise donation of 1,050 ETH, worth $4,378,159.”

This made MIRI’s list of donations look like this:

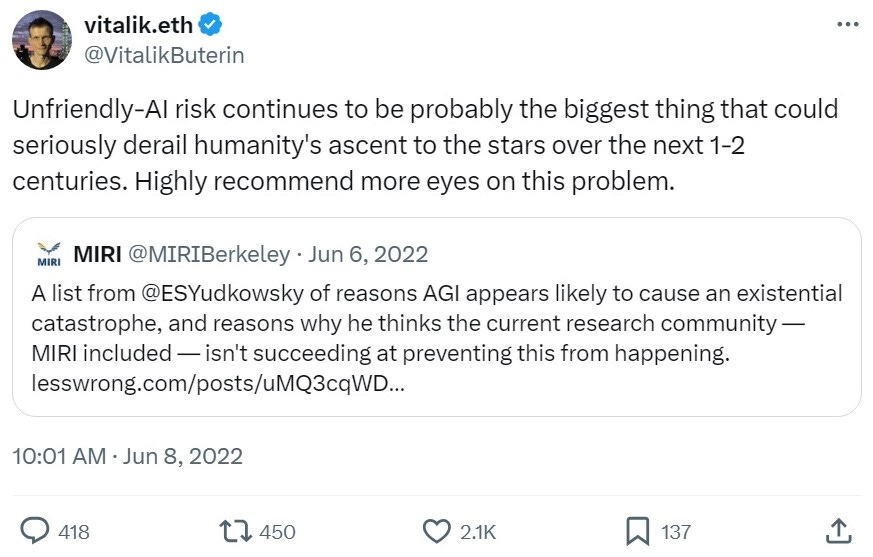

Vitalik’s donations to Eliezer Yudkoswky’s MIRI should not come as a surprise.

Vitalik Buterin’s interview: The SHIB fortune donated was not 10%, but double

Vitalik Buterin appeared on Lex Fridman Podcast on June 3, 2021, the same day FLI announced his “AI Existential Safety” grants.

The first segment of the show was about his Shiba Inu donations.

“I actually donated 20 percent and dumped 80 percent,” admitted Buterin nonchalantly. “The Covid India group got one batch, and there’s another group that got another batch, and I don’t want to say who they are because I think they want to announce themselves, at some point.”

“I was actually talking to some of these charities,” Buterin added. “And I was impressed by just how much money they managed to get out of selling some of these coins.”

July 20, 2022

Vitalik Buterin thanks Shiba Inu for making FLI’s “AI Existential Safety” fellowship fund possible.

In February 2022, the “Super Bowl” was nicknamed “Crypto Bowl.” The reason was the numerous crypto ads: Crypto.com’s commercial had Matt Damon implying that anyone not buying into crypto was missing out, and that “fortune favors the brave.” FTX had Larry David in a commercial that equated crypto to great inventions like the wheel or the lightbulb. eToro’s ad had people flying “to the moon.”

Crypto hype has reached new heights.

In July 2022, FLI’s Max Tegmark celebrated the “first class of Vitalik Buterin Fellows in AI Existential Safety.”

Vitalik Buterin, in reply, thanked the Shiba Inu community: “Big thanks to the Shiba Inu community, whose cryptocurrency made these fellowships possible!”

The tweet was shared and celebrated across the Shiba Inu community (also known as the “Shib Army”), with Shytoshi Kusama (the lead developer and co-founder of the Shiba Inu project alongside Ryoshi) replying to Vitalik Buterin, “Quite welcome fren!” (a Shiba Inu term for a friend).

Other replies celebrated it with “Shiba Inu to the moon!” and “Woof.”

In the Shiba Discord server, Shytoshi Kusama detailed how it was carried out:

“We changed the world with the first burn to his wallet and subsequent donation,” he wrote. “It's inspiring to be part of such a powerful movement and community and now brand.”

Being associated with crypto was fine, until it wasn’t.

November, 2022

The collapse of Sam Bankman-Fried’s FTX and Alameda Research due to fraud allegations.

The disgraced FTX founder, Sam Bankman-Fried (SBF), was one of the largest Effective Altruism donors. SBF was at the Centre for Effective Altruism board when Mac Aulay advised him that cryptocurrency is “the quickest way to raise money for the movement.”

With FTX, Sam was attempting to fulfill what William MacAskill taught him about Effective Altruism: “Earn to give.” It turned out that this concept was susceptible to the “ends justify the means” mentality.

In November 2022, his crypto exchange FTX collapsed alongside Alameda Research.

In November 2023, SBF was convicted of seven fraud charges (stealing $10 billion from customers and investors). Billions of dollars were funneled to his trading firm, Alameda Research, which spent them on Bahamian real estate (the total local property portfolio was estimated at $300M),2 startup investments, and political donations.

Bankman-Fried suggested that “Alameda was incompetent.” However, according to its managers’ admission at trial, they helped Bankman-Fried steal, defraud, and cover it all up. It’s not incompetence, it’s criminal activity.

“The sheer scale of Bankman-Fried’s fraud calls for severe punishment,” the Federal prosecutors wrote on March 15, 2024. “The amount of loss — at least $10 billion — makes this one of the largest financial frauds of all time.”

Eventually, on March 28, 2024, SBF was sentenced to 25 years in prison.

In FTX’s early days, the philanthropist Luke Ding loaned Alameda $6 million, and Jaan Tallinn loaned $110 million worth of Ether. This funding “gave the nascent crypto trading firm set up by Sam Bankman-Fried and cofounder Tara Mac Aulay, along with a handful of other Effective Altruists, a firm financial footing.” In March 2018, Tallinn recalled (most of) the loan. According to Semafor, “that origin story suggests that Bankman-Fried is a creation of the Effective Altruism movement and not, as has often been written, the other way around.”

Public perception of the Effective Altruism movement has declined due to Bankman-Fried’s fraudulent behavior. “In January and February of 2023,” observed the Centre for Effective Altruism, “the community and the outside world applied increased scrutiny to EA community issues.”

January 23, 2023

FLI’s 2021 EU Transparency Register lacks the amount liquated from the Shiba Inu donation since the “audit is in process.” The yearly budget presents the Musk Foundation’s donation as the prominent one.

According to the European Commission, the Transparency Register is “a database that lists organizations that try to influence the law-making and policy implementation process of the EU institutions.” Since the Future of Life Institute is trying to influence AI regulation, it sends annual reports to this publicly available database.

In January 2023, FLI’s 2021 report to the EU’s Transparency Register (covering Jan 2021-Dec 2021) showed that the biggest donation in 2021 was from the Musk Foundation. Specifically, €3,531,696 from the Musk Foundation out of €4,086,165 was nearly 90% of FLI’s funding (86.4% to be exact).

The “other financial info” section provided the bigger picture, in which there was an additional donation during 2021.

According to FLI’s report, “one 2021 donation is missing on this registry” because

“An independent valuation of the value was ongoing by the submission deadline (20 January 2023). We will add this donation in a partial update as soon as this has been completed.”

“When the aforementioned valuation has been completed, the budget will be revised to reflect the additional donation.”

March 22, 2023

FLI’s “Pause Giant AI Experiment” Open Letter. Media outlets rely on the EU report and mention Elon Musk as the leading donor.

When the “Pause Giant AI Experiment” Open Letter came out on March 22, 2023, news outlets covered the Future of Life Institute (that initiated this letter) as primarily funded by the Musk Foundation:

Why? Well, in March 2023, the reporters could only rely on the latest publicly available data, which was the report from January 2023 with the “one missing donation.”

In this intense news cycle, there were two exceptional moments (both on March 31, 2021).

In “AI experts disown Musk-backed campaign citing their research,” Reuters indicated it received a correction request from FLI that the Musk Foundation was ”a major, not primary donor to FLI.” This correction request also affected The Guardian’s reporting.3

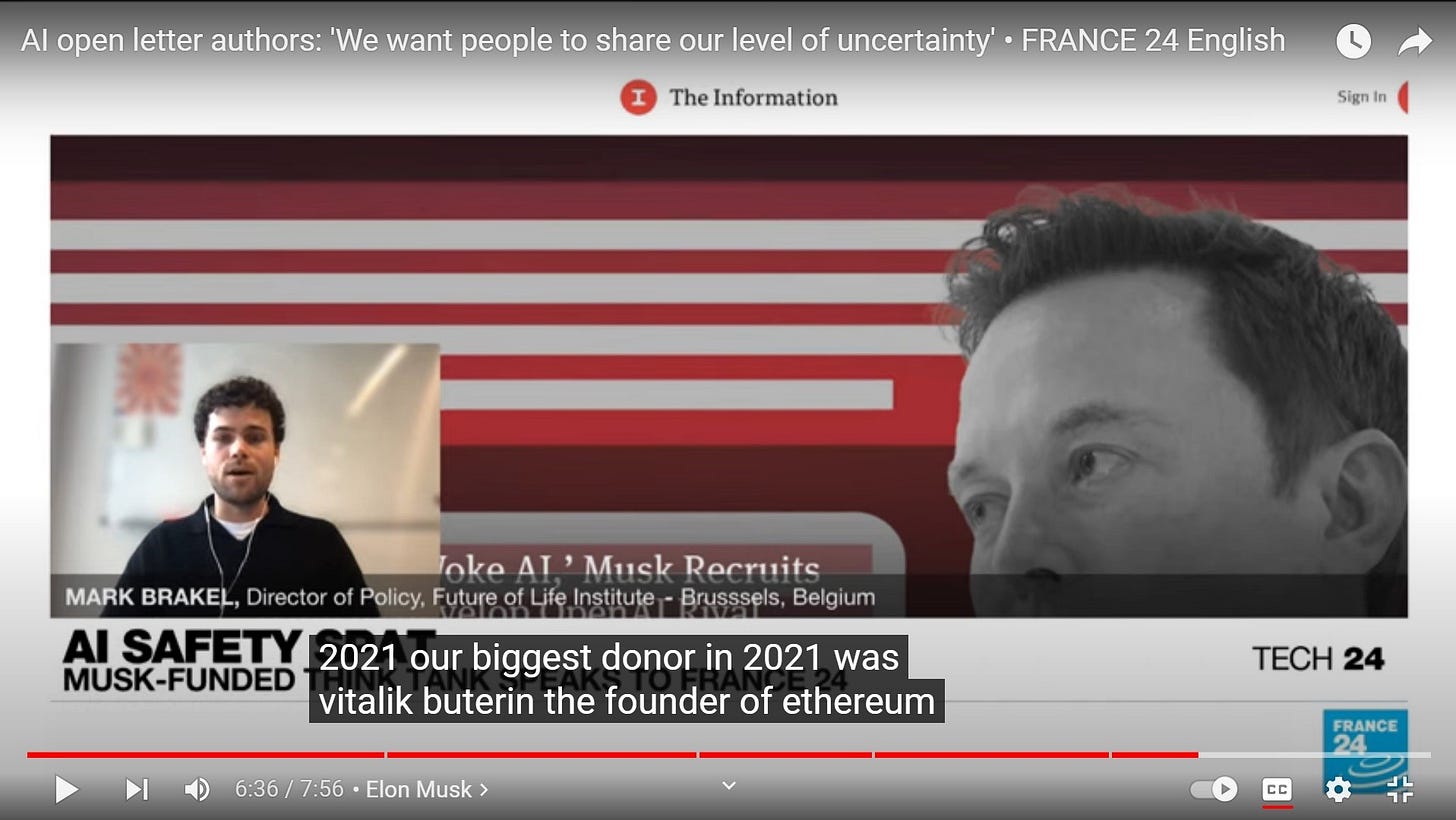

The correction did not specify the identity of the primary donor, just that it’s no longer Elon Musk. It was mentioned, though, in an interview with FRANCE 24 English.

Not liking the “Musk-funded think tank” framing, Mark Brakel, Director of Policy at the Future of Life Institute, refuted Musk’s dominance:

“Elon Musk wasn’t our biggest donor in 2021. Our biggest donor in 2021 was Vitalik Buterin, the founder of Ethereum. And he dwarfs the amount that Elon Musk has or his foundation has donated to FLI.”4

In the FRANCE 24 article, the reporter described:

“Brakel also said that Vitalik Buterin, founder of the Ethereum cryptocurrency, was the Future of Life Institute's biggest donor in 2021 and continues to be so, a fact not yet reflected on the European Union's Transparency Register, where Elon Musk appears as the organisation's top donor.”

May 15, 2023

The promised “partial update” about 2021 is available online. The “minor cryptocurrency” was valued at €603,156,352 at the date of the donation. “The vast majority of these received funds have been donated to other non-profit organizations.”

Two years after the Shiba Inu donation, the updated report about 2021 arrived (the promised “partial update”). It showed that Vitalik Buterin became FLI’s larger funder by donating €603,156,352.

FLI claimed it became “a much smaller” amount when the price of the “minor cryptocurrency” dropped:

“The donation from Vitalik Buterin was provided in a minor cryptocurrency, and due to illiquidity and price drops, the actual liquidated amount that FLI ultimately ended up with was much smaller than the nominal end-of-year value listed above … FLI converted this asset to dollars at a significantly lower average price than it held on the donation date.”

Interestingly, the amount was not specified as fellowships, but instead: “The vast majority of these received funds have been donated to other non-profit organizations.”

€603M was their “best estimate of the dollar value at the time of donation, converted to Euros using the exchange rate of December 31, 2021.”

Regarding the total budget of €607,219,940, the institute noted that the “annual audit is in process.”

First mention of Buterin’s “large donation” on FLI’s website – May 2023

FLI’s website did not help figure out the actual dollar amount liquated from the Shiba Inu donation. The “Funding” section on FLI’s website didn’t include the cryptocurrency donation in March 2023.

So, for the journalists intensely covering FLI - there was no mention of Vitalik Buterin.

According to the “Wayback Machine,” it kept that way until May 17, 2023, when FLI altered the URL from “Funding” to “Finances” and added:

“In 2021, computer programmer Vitalik Buterin provided FLI with a large and unconditional donation that in part serves as an endowment and helps to guarantee our independence from any one donor.”

So, in May 2023, FLI officially admitted on its website that Vitalik Buterin is its “largest donor by far,” but without specifying the magnitude of the amount.

June 5, 2023

FLI’s Transparency Register for 2022 is available online. It states the 2022 budget as €4,094,000. It does not include the “external regranting” (e.g., Vitalik’s $25M).

FLI’s next annual update was due on January 20, 2024.

However, on June 5, 2023, FLI files a new report on the financial year 2022 – overwriting the “old” filing with this new filing.

So, after 21 days, FLI’s Transparency Register shows a different budget: €4,094,000.

Vitalik Buterin appeared in the “Complementary information” section:

“Our budget deficit was covered entirely by our endowment, which has been provided by Vitalik Buterin in 2021. This funding has helped FLI maintain its independence.”

This time, there was no mention of any “vast majority” funds going to “other non-profit organizations.” FLI linked to the PhD/Postdoc grants, “We are also using his donated funds to support a multi-year grant program.”

In any case, FLI stated that the €4M budget “does not include our external regranting.” Thus, all other millions used for “external regranting” are excluded.

Act 3: The Money Trail

This section includes:

Grants to other organizations

Funding new organizations

AI regulation

Grant to Other Organizations

Interestingly, on FLI’s website, under “Archives: Grant Programs,” the archive jumps from 2018 to updates about 2022. There’s no “2021 Grants” page.

Besides Vitalik PhD/Postdoctoral “AI Existential Safety” fellowships, there are other “2022 Grants” that amount to $3,733,685. The ≈$3.7M was donated to: the Foresight Institute, University of Cambridge’s Centre for the Study of Existential Risk (CSER), UC Berkeley’s AI Policy Hub, USC’s Hollywood, Health & Society (HH&S), and Wise Ancestors.

The “2023 Grants” amount to $8,588,000. The ≈$8.6M was donated to: AI Impacts (based at the Machine Intelligence Research Institute [MIRI] in Berkeley), AI Objectives Institute, Alignment Research Center (ARC), Berkeley Existential Risk Initiative (BERI), the Center for AI Safety (CAIS), Centre for Long-Term Resilience (Alpenglow Group Limited), Collective Intelligence Project, FAR AI inc., Foresight Institute, Ought Inc., Oxford China Policy Lab (OCPL), Redwood Research Group Inc., The Future Society (TFS), the University of Chicago’s Existential Risk Laboratory (XLab), and UC Berkeley’s AI Policy Hub.

In 2023, there were also “nuclear war” grants, which amounted to $4,057,534.

Funding New Organizations

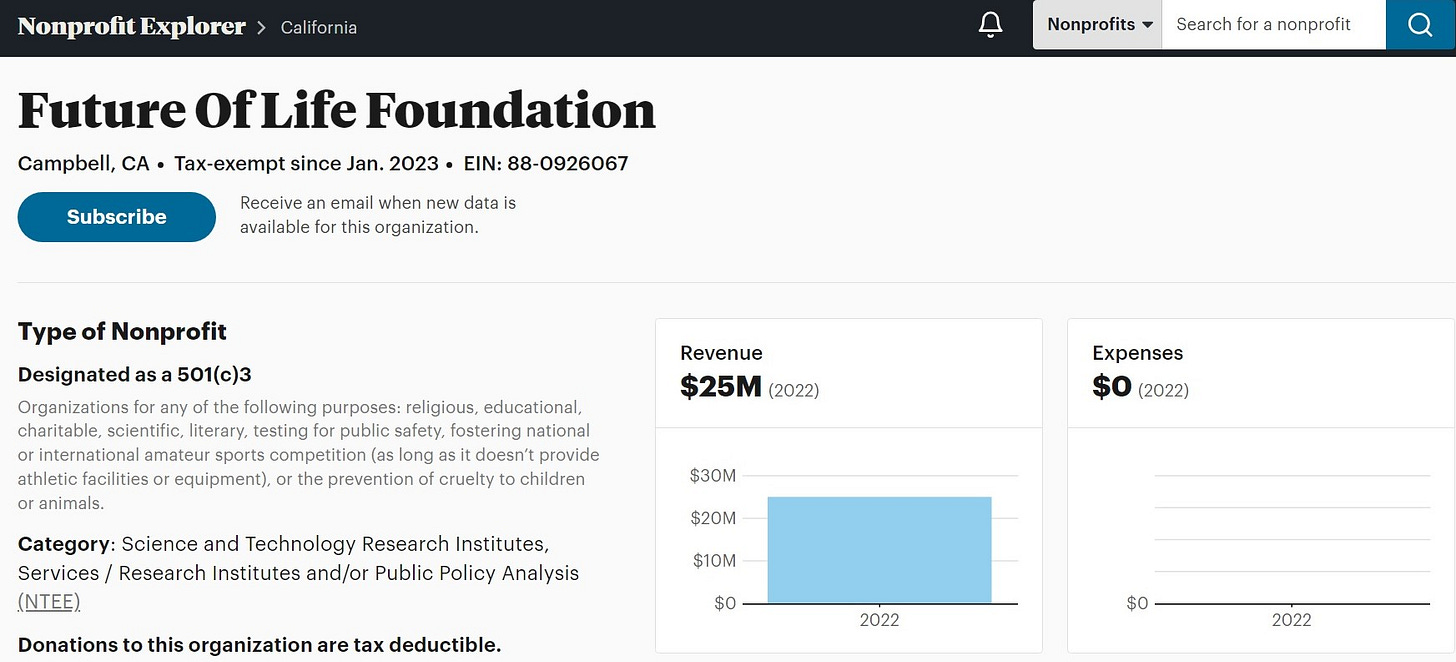

1. FLI’s affiliated non-profit: Future of Life Foundation (FLF)

According to FLF.org, the Future of Life Foundation is “a new nonprofit organization affiliated with the Future of Life Institute.” The two organizations share the same address in Campbell, CA, and the same management: Anthony Aguirre, Jaan Tallinn, Max Tegmark, and Meia Chita-Tegmark.5

In FLF’s 990 form, under “Related Tax-Exempt Organization,” it is stated that Future of Life Institute's primary activity is to “Support Future of Life Foundation.”

On FLI’s website, FLF’s focus is described as “incubating new organizations and major projects aligned to our mission.”6 The specific goal? “We aim to help start 3 to 5 new organizations per year.”

FLF was formed in 2022 with a revenue of $25M.

FLF, as a different non-profit, isn’t involved in lobbying, only grantmaking, and it doesn’t need to report to the “Transparency Register.” Thus, FLF’s tens of millions of dollars are kept out of FLI's reports to the EU.

FLF’s description says, “During its recent formation in 2022, the organization received funds to establish an operating budget for future charitable, scientific, and educational programming but had no expenditures during the tax year.” “The large cash balance at tax year end supports research and operations beginning in 2023.”

According to 80,000 Hours (an “Effective Ventures” project, mainly funded by Open Philanthropy), the Future of Life Foundation has 3 job openings: Researcher (General), Researcher (Specializing in AI Safety), and Founder Search and Recruitment Lead.

“Our primary plan for doing so is to work to identify gaps in the landscape of efforts to fulfill this mission, and to then determine what new organizations should exist to address these challenges. Once identified, we will recruit, fund, and provide significant support to founders who demonstrate that they can bring these organizations to life.”

“Our tentative plan is to help create an average of 3-5 new organizations per year over the next 5 years, providing each with significant operational support and, if recruited founders, further develop our research into a compelling proposal, substantial runway.” “As a new organization, we’re unsure just how narrow or expansive we’ll find our scope of activities to be.”

Among the Activities: “Analyze the likely impact of proposed organizations.”

Among the Likely Qualifications: “Passion for helping to steer transformative technologies away from existential risk and toward long-term flourishing.”

Then, FLI launched a new non-profit called CARMA.

2. The Center for AI Risk Management & Alignment (CARMA)

The Future of Life Institute’s new non-profit CARMA.org is currently hiring for 3 positions: AI Risk Management Researcher, Geostrategic Dynamics Researcher, and U.S. Legal & Policy Researcher.

CARMA’s mission & purpose:

CARMA’s mission is to markedly mitigate the risks to humanity and to the biosphere from unaligned transformative artificial intelligence, via tightly-integrated technical, strategy, and policy research competencies.

By growing technical safety, beneficent alignment, and coordinative governance, and by enabling others to better do so, we aim to help manage the environmental and existential risks from transformative AI through lowering their likelihood.

The “Head of CARMA” is Richard Mallah. He is Future of Life Institute’s “Principal AI Safety Strategist” and works in FLI’s “core team.”

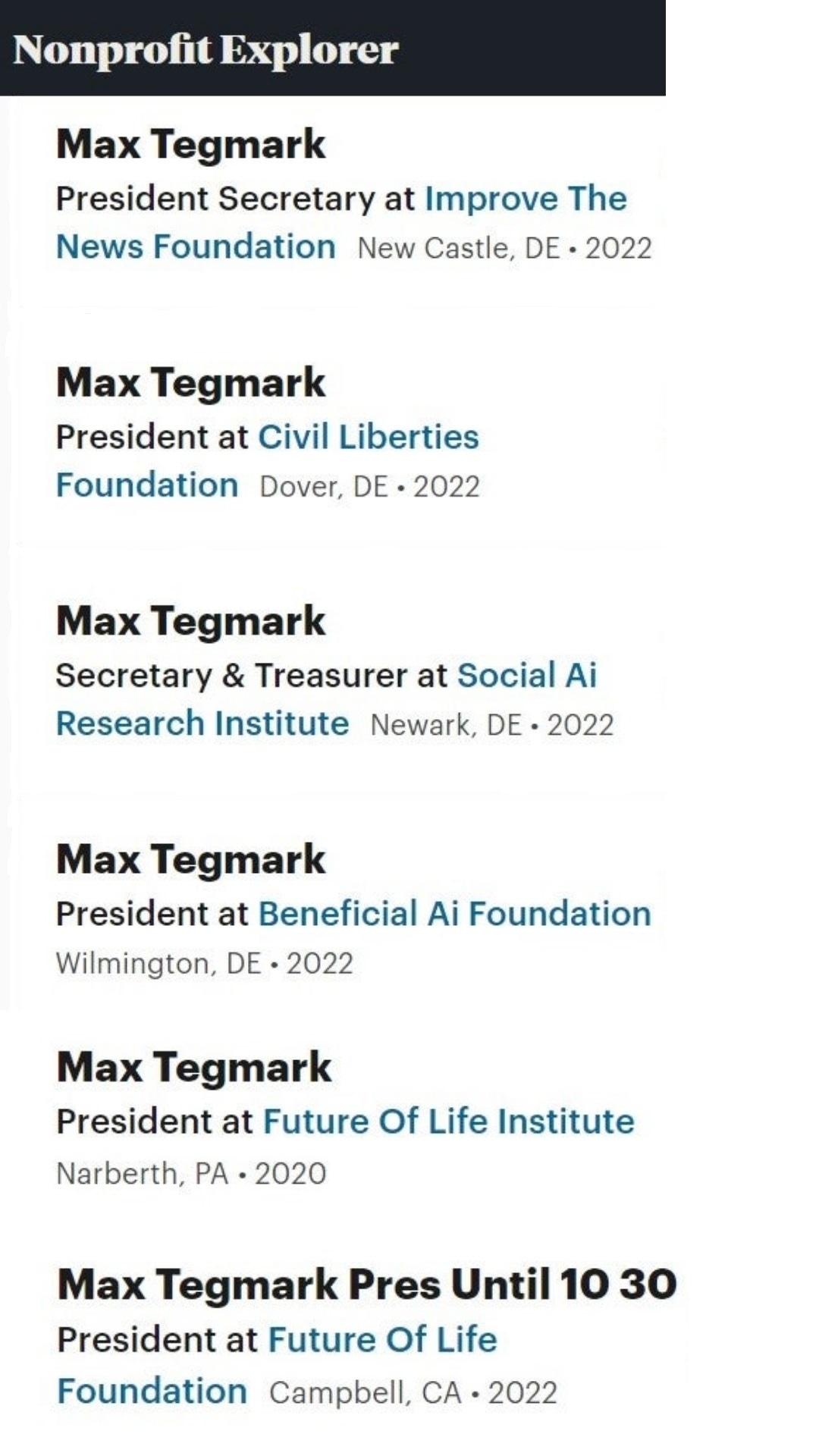

3. Tegmark’s additional non-profit organizations

Max Tegmark’s wife, Meia Chita-Tegmark, is both FLI and FLF Board Treasurer.

In ProPublica, under the name Max Tegmark, there are other organizations.

Alongside FLI and FLF, Max Tegmark and Mihaela Chita-Tegmark manage 4 additional non-profits:

- Spot the patterns?

Beneficial AI Foundation. Delaware. In 2022: Expenses $821.

Social AI Research Institute. Delaware. In 2022: Expenses $821.

Civil Liberties Foundation. Delaware. In 2022: Expenses $821.

“Beneficial AI Foundation” doesn’t have any designated website. What can be found is that Max Tegmark’s postdoctoral fellow, Peter S. Park (Vitalik Buterin Postdoctoral Fellow in AI Existential Safety), thanked the “Beneficial AI Foundation” in two of his papers in 2023: “He acknowledges research funding from the Beneficial AI Foundation.”

Improve the News Foundation. Delaware.

In 2020: Loan $6,506

In 2021: Revenue $3,250,200 (1 contribution from FLI)

In 2022: Revenue $2,950,000 (1 contribution from FLI)

So, since Buterin’s donation, Tegmark’s Improve the News Foundation received from Tegmark’s FLI = $6,200,200.

In 2023, “Improve the News” was rebranded as “Verity.” You can find it in Verity (improvethenews.org), Lite.Verity.News, Medium, Twitter (89.3K Followers), Facebook (627K Followers), and it also has a podcast.

One of its uses is for FLI’s agenda. For example, Tegmark shared an article from Verity in favor of FLI’s new open letter about deepfakes. Then, he shared a link to an Orwell Parody account (an independent satire site, "a free news publication created by the Improve the News Foundation”). It was against the use of AI in the U.S. military.

AI Regulation

AI Panic Campign

In a Techdirt article, “2023: The Year of AI Panic,” I summarized how the extreme ideology of “human extinction from AI” became one of the most prominent trends. We had open letters about the risk of human extinction from AI (initiated by FLI and CAIS) and “AI forums” calling for sweeping regulatory interventions (e.g., AI Safety Summit). Gradually, then suddenly, the AI panic was everywhere.

In February 2024, FLI’s director of operations, Ben Eisenpress, warned about the “5 easy ways artificial intelligence could kill off the human race and make mankind extinct.”

FLI’s Eisenpress claimed the Terminator scenarios need to be taken seriously: “Rogue AI, when AI escapes human control and causes widespread harm, is a real risk.” Referencing Nick Bostrom’s “paperclips thought experiment,” Eisenpress said, “Simply giving AI an open-ended goal like ‘increase sales’ is enough to set us on this path.”

Harvard’s Steven Pinker protested in November 2023 that he is not interested in paying techies “to fret about AI turning us into paperclips.” Don’t worry, Pinker, your donations aren’t funding it. It’s Vitalik Buterin’s donation that enables FLI to promote these scenarios all over the place.

In “The AI Panic Campaign – part 2,” I explained that “framing AI in extreme terms is intended to motivate policymakers to adopt stringent rules.”

“After using the media to inflate the perceived danger of AI, AI Safety organizations demand sweeping regulatory interventions in AI usage, deployment, training (cap training run size), and hardware (GPU tracking).”

The President and co-founder of FLI, Max Tegmark, frequently discusses the end of humanity. “I believe the artificial intelligence that we’re trying to build will probably annihilate all of humanity pretty soon.” In this context, his organization has recommended a set of policy recommendations. This is where the plot thickens.

FLI’s AI Policy

“I worry a lot about the influence on regulation, and how much influence these people have with respect to lobbying government and regulators and people who really don't understand the technologies very well.”

[Melanie Mitchell, AI researcher at the Santa Fe Institute, quoted in Politico’s article]

Lobbying highlights

2023 was a banner year: FLI participated in the UK AI Safety Summit, its co-founder Jaan Tallinn was appointed to the UN High-level Advisory Body on AI, and FLI President Max Tegmark addressed U.S. Congress at the “AI Insight Forum.”

According to the U.S. Senate Lobbying Disclosure LD-1 & LD-2 reports, shared by Politico, since 2020, FLI “has spent roughly $500,000 to lobby Washington on AI.”

FLI is currently recruiting a representative for the French AI Safety Summit.

EU AI Act: Pushing for regulation of general-purpose AI systems (GPAIS)

In April 2021, the European Commission's original draft of the Act did not mention GPAIS or foundation models. In August 2021, FLI provided feedback to the Commission that the draft did not address increasingly general AI systems “such as GPT-3.” In November 2021, the Council introduced an Article 52a dedicated to GPAIS. In March 2022, the JURI committee in the European Parliament essentially copied these same provisions into their position. In May 2022, the Council substantially modified the provisions for GPAIS. In June 2023, the Parliament introduced Article 28b, with obligations for the providers of foundation models.

FLI’s celebration

Risto Uuk is FLI’s EU Research Lead. He’s “leading research efforts on EU AI Policy,” “work on the EU AI Act,” “raised awareness of general-purpose AI,” and appears in the EU Transparency Register as an active lobbyist since August 2021.

As the member states endorsed the EU AI Act on February 2, 2024, FLI responded: “We are pleased that the AI Act has today been adopted by the EU’s Committee of Permanent Representatives.” The institute also mentioned “years of work and multi-stakeholder advocacy.”

March 13, 2024, marked the passing of the AI Act. Risto Uuk and FLI couldn’t hide their excitement:

Among Jaan Tallinn’s proposals: Ban training runs, surveil software, and make GPUs (above a certain capability) illegal

In November 2023, FLI’s Jaan Tallinn was interviewed in the “AI and You” podcast.

It was very revealing.

"I do think that, certainly, governments can make things illegal."

"Well, you can make hardware illegal.

You can also say that producing graphics cards above a certain capability level is now illegal. And, suddenly, you have much, much more runway as a civilization."

When he was asked, "Do you get into a territory of having to put surveillance on what code is running in a data center?" He replied: "Yeah. Regulating software is much, much harder than hardware." Later, adding, "If you let the Moore’s Law continue, then the surveillance has to be more and more pervasive."

"So, my focus for the foreseeable future will be on regulatory interventions and trying to educate lawmakers, and helping and perhaps hiring lobbyists to try to make the world safer."

In October 2023, Jaan Tallinn shared how he educates lawmakers in the “European high-level AI experts group at the European Commission” (that’s how he described the “UN High-level Advisory Body on AI”). In his example, he discussed something he previously called the “summon and tame” paradigm:

“Nasty secret of AI field is that AIs are not built, they are grown. The way you build a frontier model, ’build’ a frontier model is you take two pages of code, you put them in tens of thousands of graphics cards, and let them hum for months. And then you're going to open up the hood and see what creature brings out and what you can do with this creature.

I think the capacity to regulate things and kind of deal with various liability constraints, et cetera, they apply to what happens after. Once this creature has been kind of tamed, and that's what the fine-tuning and reinforcement learning from human feedback, et cetera, is doing. And then productized. Then how do you deal with these issues? This where we need the competence of other industries.

But how can avoid the system not escaping during training run? This is like a completely novel issue for this species. And we need some other approaches, like just banning those training runs.”

When FLI’s co-founder/one of the biggest x-risk billionaires lays out his plan – you should listen. Then, keep in mind that FLI’s AI policy echoes his views. The following is the most recent example:

FLI’s grant program for “global governance mechanisms/institutions”

In 2024, FLI initiated a new grant program for designing global governance mechanisms or institutions “that can help stabilize a future with 0, 1, or more AGI projects.” With this project, FLI intends “to support several proposals. Accepted proposals will receive a one-time grant of $15,000.”

Request for Proposal: “We expect proposals to specify and justify whether global stability is achieved by banning the creation of all AGIs, enabling just one AGI system and using it to prevent the creation of more, or creating several systems to improve global stability.”

Among the questions: “Verification mechanisms to ensure no one is cheating on AGI capability development- How to prevent others from cheating? Penalties? Surveillance mechanisms? How much does this need to vary by jurisdiction?”

March 26, 2024

Politico’s exposé and Vitalik Buterin’s response

“The vast majority of this gift has been transferred to a donor-advised fund and other non-profit entities that primarily provide asset management services. This is a routine setup for non-profits managing large resources.”

[The Future of Life Institute in response to Politico]

Politico’s article caused quite a stir.7 It led to a backlash against Vitalik Buterin. Some of it came in memes:

Vitalik’s response was: “Oops ¯\_(ツ)_/¯ ”

The Well-Oiled X-Risk Machine

Journalism’s role is to hold power to account

“Extinction panics are, in both the literal and the vernacular senses, reactionary, animated by the elite’s anxiety about maintaining its privilege in the midst of societal change.”

[Tyler Austin Harper, “The 100-Year Extinction Panic Is Back, Right on Schedule”]

- The RISE

“Over the last few years, a group of young, effective altruism-aligned, heavily white and male, somewhat cultish operatives… have gradually ascended in Democratic politics.”

[From Teddy Schleifer’s piece on Open Philanthropy’s Dustin Moskovitz, “DustinBucks”]

The growing “Epistemic Community of AI Safety” contemplated “takeover” scenarios in which out-of-control AI systems will exhibit power-seeking behaviors, while its members exhibited power-seeking behaviors themselves.

Leading Effective Altruists spread “x-risk” scenarios across mass media and, through lobbying efforts, into politicians’ talking points.

Even MIRI’s CEO, Malo Bourgon, couldn’t believe their success:

“Things that were too out there for San Francisco are coming out of the Senate Majority Leader’s mouth.”

It was years in the making. The EA movement created a network comprising hundreds of organizations that are led by a relatively small group of influential leaders and a handful of key organizations.8 Specifically, the “AI Existential Risk” ecosystem (institutes, think tanks, etc.) was empowered by half a billion dollars from Effective Altruism.

With this funding, AI doomers sought power.

They indeed rose to power as media heroes and policy advocates.

But the more power and influence you have, the more scrutiny you will face.

- The FALL?

In January 2024, in an article entitled “Weighing the Prophecies of AI Doom,” I described that behind the AI Safety organizations, “there’s a well-oiled ‘x-risk’ machine.” Therefore, “When the media is covering them, it has to mention it.” Especially since they would not mention it themselves.9

Politico’s exposé demonstrates that “the sunlight is the best disinfectant.”

That’s also why WIRED’s “The Death of Effective Altruism” by Lief Wenar is a MUST-READ for everyone.

Hopefully, more news outlets will speak truth to power, and political backlash will follow.

In spite of Effective Altruists’ considerable influence (in the industry, academia, media, and policymaking), we can expect the pendulum to swing in the opposite direction.

Hopefully, with a reckoning about how we let them become so influential in the first place.

Endnotes

“With the philosophers too, good-for-others kept merging into good-for-me. SBF bought his team extravagant condos in the Bahamas; the EAs bought their crew a medieval English manor as well as a Czech castle. The EAs flew private and ate $500-a-head dinners.”

Source: “The Death of Effective Altruism” by Leif Wenar, WIRED, March 27, 2024.

FLF added an executive, Josh Jacobson as CEO. Anthony Aguirre replaced Max Tegmark as the President of FLF on October 30, 2022.

Source: FLI website - Frequently Asked Questions (FAQ)

It became a known fact: Wikipedia – Shiba Inu (cryptocurrency)

See “Appendix B: EA Organizations” in “Effective Altruism and the strategic ambiguity of ‘doing good” by Mollie Gleiberman.

“Many E.A.s now disavow the label: I interviewed people who had attended E.A. conferences, lived in E.A. group houses … but would not cop to being E.A.s themselves. (One person attempted this dodge while wearing an effective-altruism T-shirt).”

[The New Yorker, March 2024]

Incredible work and research. What does it all mean? What conclusions can we draw from this? As Bitcoin's price continues to rise what other impacts might it have on Generative AI, AI trust and x-risk vs. Technological optimism.

BigTech and Venture Capitalism pouring money into hyping Tech optimism might directly lead to the political right demonizing AI now and in the future at a much larger scale. Meanwhile AI startups are already failing that were funded by the likes of Coatue and Microsoft. Many of which have been utterly poached for talent by none other than OpenAI and Microsoft!

Although the increasing replacement of humans by artificial intelligence can be daunting, it also signifies innovation.

With pioneers leading the way and nurturing this transition, humans can lead more prosperous lives with greater integration of AI. As AI starts to take over tasks humans rely on, people will naturally seek out new needs and desires.

Artificial intelligence isn't something to fear; rather, it marks the dawn of an era where humans and AI harmoniously coexist, a trend already in progress. As AI's significance grows in this era, interest in the keyword 'AI' is inevitably on the rise.

While 'DOGE' and 'SHIBA' represent memes of the human era, the anticipated inaugural meme keyword of the AI era could be 'KIBSHI,' the first native coin of AI.