What’s Wrong with AI Media Coverage & How to Fix it

In July 2023, Steve Rose from The Guardian shared this simple truth:

“So far, ‘AI worst case scenarios’ has had 5 x as many readers as ‘AI best case scenarios.’”

Similarly, Ian Hogarth, author of the column “We must slow down the race to God-like AI,” shared that it was “the most read story” in the Financial Times (FT.com).

The Guardian’s headline declared that “Everyone on Earth could fall over dead in the same second.” The FT OpEd stated that “God-like AI” “could usher in the obsolescence or destruction of the human race.”

It’s not surprising that those articles were successful. After all, “If it bleeds, it leads”:

We need to consider the structural headwinds buffeting journalism - the collapse of advertising revenue, shrinking editorial budgets, smaller newsrooms, and the demand for SEO traffic, said Paris Martineau, a tech reporter at The Information.

That helps explain the broader sense of chasing content for web traffic. “The more you look at it, especially from a bird’s eye view, the more it [high levels of low-quality coverage] is a symptom of the state of the modern publishing and news system that we currently live in,” Martineau said. In a perfect world, all reporters would have the time and resources to write ethically-framed, non-science fiction-like stories on AI. But they do not. “It is systemic.”1

The media thrives on fear-based content. It plays a crucial role in the self-reinforcing cycle of AI doomerism (with articles such as the “Five ways AI might destroy the world”).

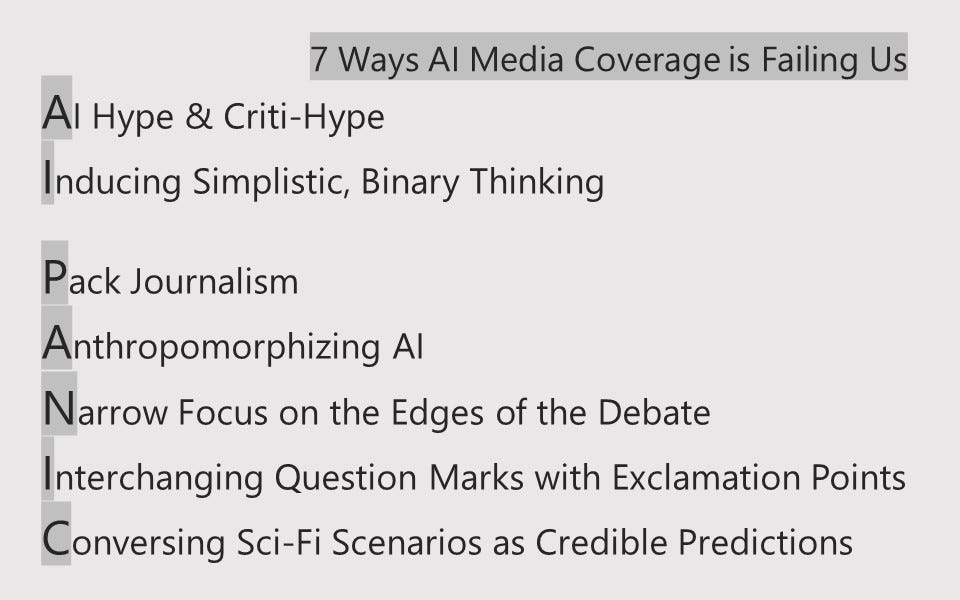

This is why I’ve outlined its main flaws in an “AI PANIC” acronym.

7 Ways AI Media Coverage is Failing Us

AI Hype & Criti-Hype

AI Hype: Overconfident techies bragging about their AI systems (AI Boosterism).

AI Criti-Hype: Overconfident doomsayers accusing those AI systems of atrocities (AI Doomerism).

Both overpromise the technology’s capabilities.

Inducing Simplistic, Binary Thinking

It is either simplistically optimistic or simplistically pessimistic.

When companies’ founders are referred to as “charismatic leaders,” AI ethics experts as “critics/skeptics,” and doomsayers (without expertise in AI) as “AI experts” - it distorts how the public perceives, understands, and participates in these discussions.

Pack Journalism

Copycat behavior: News outlets report the same story from the same perspective.

It leads to media storms.

In the current media storm, AI Doomers’ fearmongering overshadows the real consequences of AI. It’s not a productive conversation to have, yet the press runs with it.

Anthropomorphizing AI

Attributing human characteristics to AI misleads people.

It begins with words like “intelligence” and moves to “consciousness” and “sentience,” as if the machine has experiences, emotions, opinions, or motivations. This isn’t a human being.

Narrow Focus on the Edges of the Debate

The selection of topics for attention and the framing of these topics are powerful Agenda-Setting roles. This is why it’s unfortunate that the loudest shouters lead the AI story’s framing.

Interchanging Question Marks with Exclamation Points

Sensational, deterministic headlines prevail over nuanced discussions. “AGI will destroy us”/“save us” make for good headlines, not good journalism.

Conversing Sci-Fi Scenarios as Credible Predictions

“AI will get out of control and kill everyone.” This scenario doesn’t need any proof or factual explanation. We saw it in Hollywood movies! So, that must be true (right?).

The above flaws can help explain the prevalence of “X-risk” media coverage at this moment.2

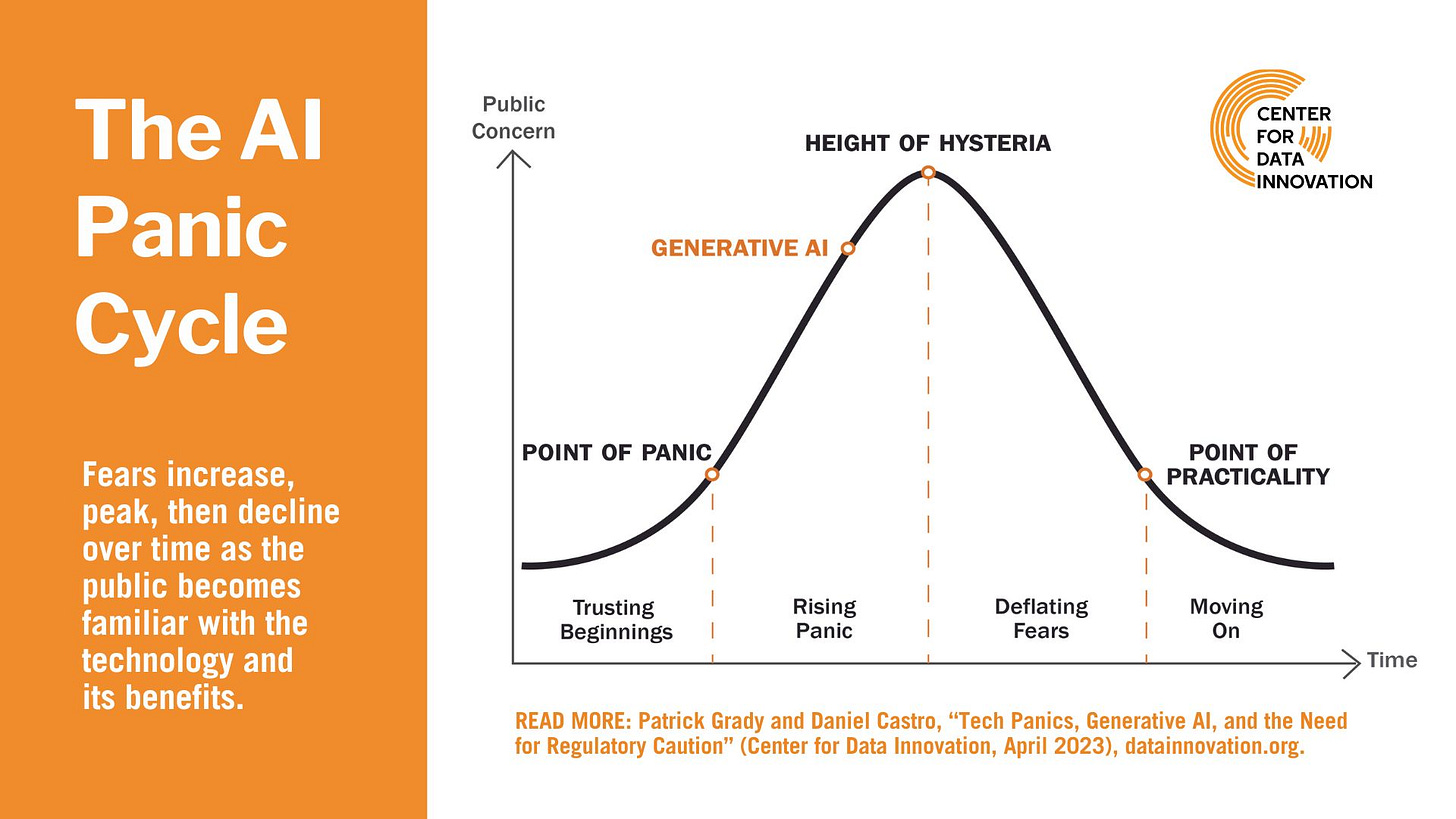

However, we can expect that the doomsaying will not stay this dominant.

The Path Forward

Panic cycles, as their name implies, are circular. At some point, the hysteria calms down (as demonstrated in the “AI Panic Cycle”).3

Below are some suggestions for ways to reach that point.

Media:

● The media needs to stop spreading unrealistic expectations (both good and bad). The focus should be on how AI systems actually work (and don’t work). Alongside discussing what AI is, we also need to discuss what it isn’t.

● Media attention should not be paid to the fringes of the debate. The focus should return to the actual challenges and the guardrails they require.

● There are plenty of AI researchers who would love to inform the public in a balanced way. Instead of reaching out to Eliezer Yudkowsky for yet another “We’re all gonna die!” quote, journalists should reach out to other voices. Doomers had a great run as media heroes. It’s time to highlight more diverse voices that can offer different perspectives.

Audience:

● Whenever people make sweeping predictions with absolute certainty in a state of uncertainty, it is important to raise questions about what motivates such extreme forecasts.

● We need to keep reminding ourselves that the promoters of hype and criti-hype have much to gain from the impression that AI is so much more powerful than it actually is. Rather than getting caught up in those hype cycles, we should be more skeptical and take a more nuanced look at how AI affects our daily lives.

● We need to look at the complex reality and see humans at the helm, not machines. It’s humans making decisions - designing, training, applications. Many social forces are at play here: Researchers, policymakers, industry leaders, journalists, and users, who shape this technology further.

Jem Bartholomew and Dhrumil Mehta, “How the Media is Covering ChatGPT,” Columbia Journalism Review, May 26, 2023, https://www.cjr.org/tow_center/media-coverage-chatgpt.php.

Nirit Weiss-Blatt, “Your Guide to the Top 10 AI Media Frames,” AI Panic Newsletter, September 10, 2023, https://www.aipanic.news/p/your-guide-to-the-top-10-ai-media.

Patrick Grady and Daniel Castro, “Tech Panics, Generative AI, and the Need for Regulatory Caution,” Center for Data Innovation, May 1, 2023, https://datainnovation.org/2023/05/tech-panics-generative-ai-and-regulatory-caution/.

Excellent piece and Substack. Thank you so much for writing this.

On the last letter of "AI PANIC," about sci-fi scenarios being treated as credible predictions, the best (fictional) treatment of this I know of is in Stanislaw Lem's "His Master's Voice," where attempts to understand a message from another galaxy are continually hamstrung by reliance upon concepts from science fiction, to the eventual failure of the entire project. Its a remarkable novel, not just for the quality of the writing (in Michael Kandel's translation, as I don't read Polish), but because I cannot recall any Western SF in which a scientific project just utterly and completely fails - not even in the sense of producing a disaster or monsters, but just not succeeding at all because it's investigators are stuck in inadequate concepts.

Discovered you through AI Inside podcast. Thanks for the cool head.