When Effective Altruism Takes a Dark Turn

Prologue

There’s not enough public knowledge about Effective Altruism’s controversial beliefs and how they manifest in extreme experiences. So, I decided to inform you about one such story - “Leverage Research.”

It is the tip of the iceberg, as some other concerning groups and events are excluded from this post.1

Contents

Background

Effective Altruism, Rationality, and AI Doomerism

How “The EA/Rationality Community Creates Crazy Stuff”

The Story of Leverage Research

Initial Introduction of Leverage Research

Leverage’s Alleged Abusive Psychological Experiments

- Leverage’s “Debugging” aimed to end the members’ “irrationalities”

“The Only Organization with a Plan” to Save the World

Ecosystem: Paradigm Academy and Reserve

- Paradigm Academy was the for-profit arm of the non-profit Leverage

- Reserve: The crypto startup for raising money

Leverage – The PLAN

From “Leverage 1.0” to “Leverage 2.0”

Effective Altruists Discuss “Leverage is a Cult”

Former Members Who are Active in the AI X-Risk Ecosystem

Epilogue

Background

Effective Altruism, Rationality, and AI Doomerism

First, let’s clarify the two relevant ideologies, Rationality and Effective Altruism.

Rationality: An ideology that emerged from the LessWrong community blogging website, founded by Eliezer Yudkowsky in 2009. Rationalists focus on “improving human reasoning and decision making,” and many members believe that advanced AI is “a very big deal for the future of humanity.”

Among the influential figures are Eliezer Yudkowsky, Nick Bostrom, and Jaan Tallinn, and among the organizations are the Machine Intelligence Research Institute (MIRI) and LessWrong.

Effective Altruism: A movement that could be seen as the twin sibling of the Rationality movement. Whereas Rationalists aim to maximize their rationality, Effective Altruists strive to maximize their “positive impact” on the world.

Among the influential figures are William MacAskill, Toby Ord, Sam Bankman-Fried, and Dustin Moskovitz, and among the organizations are the Centre for Effective Altruism (CEA) and Open Philanthropy.2

The third pillar is AI Doomerism. According to the Effective Altruism movement, the most pressing problem in the world is preventing an apocalypse where an Artificial General Intelligence (AGI) exterminates humanity.

A thorough investigation of the EA movement’s public-facing discourse versus its inward-facing discourse found that it used “Bait-and-Switch” tactics to attract new members, who were led from global poverty to AI doomerism. The guidance was to promote the publicly-facing cause (less controversial causes like “giving to the poor”) and keep quiet about the “core EA” causes (existential risk/AI safety). Influential Effective Altruists explicitly wrote that this was the best way to grow the movement.

At least $1.6 billion was poured into expanding the existential risk (x-risk) community (new organizations, grants, etc.), especially by Dustin Moskovitz (Open Philanthropy), Vitalik Buterin (his Shiba Inu donation to the Future of Life Institute), Jaan Tallinn (Survival and Flourishing Fund), and Sam Bankman-Fried (FTX Future Fund).

The “human extinction from AI” idea gained traction.

In early 2024, in the LessWrong Forum, there was a debate about “Stop talking about p(doom)” (probability of doom). It was after a wave of influential figures shared their “AI doom” predictions:

Paul Christiano, MIRI’s former Research Associate and currently NIST’s Head of AI Safety, said in that discussion that the “existential risk” term is not “very helpful in broader discourse” and “In most cases, it seems better to talk directly about human extinction, AI takeover, or whatever other concrete negative outcome is on the table.” (Yes, he wrote “concrete”).

And indeed, the members of this community speculate about such apocalyptic scenarios… non-stop.

Up to the point where it hurts the community members’ mental health.

Considering the above discourse, the following LessWrong post is quite eye-opening.

How “The EA/Rationality Community Creates Crazy Stuff”

“How do you end up with groups of people who do take pretty crazy beliefs extremely seriously?” asked Oliver Habryka, who runs Lightcone Infrastructure/LessWrong, and manages the LTFF (Long-Term Future Fund).

It was mid-2023, and Habryka tried to understand “what is going on when people in the extended EA/Rationality/X-Risk social network turn crazy in scary ways.”

His central thesis was: “People want to fit in.”3

Habryka’s opening examples were FTX and Leverage Research.

While FTX’s story is well known, Leverage Research’s story is less so.

The Story of Leverage Research

Please keep in mind, before we dive into the detailed story:

It’s all based on publicly available data (e.g., Zoe Curzi’s post on Medium, discussions on the Effective Altruism Forum, LessWrong Forum, etc.). Some of it was gathered using the “Internet Archive” tool “Wayback Machine.”

As you’ll soon see, when things get too extreme, the broader EA community distances itself from it. As it should.

However, the underlying causes of why it occurs in the first place - remain.

Initial Introduction of Leverage Research

In January 2012, Luke Muehlhauser introduced Leverage Research to the Rationalists of LessWrong Forum. He was then the Executive Director of the Machine Intelligence Research Institute in Berkeley, California (MIRI), and is now a senior program officer of AI governance and policy at Open Philanthropy. The post was written by Leverage Research founder Geoff Anders: “I founded Leverage at the beginning of 2011. At that time, we had six members. Now, we have a team of more than twenty. Over half of our people come from the LessWrong/Singularity Institute community.”

Leverage Research’s connection to Effective Altruism and Rationality communities was dominant from the start:

“Leverage ran the first two EA Summits in 2013 and 2014 before handing that over to the Centre for Effective Altruism (CEA) to run the event as EA Global from 2015 onwards.

Leverage was also important in encouraging Effective Altruism to become a movement and ran one of the earliest EA movement-building projects known as The High Impact Network (THINK).

On the Rationality community side, Leverage Research hosted a LessWrong Mega meetup in Brooklyn in February 2012, invited the Center for Applied Rationality (CFAR) to give aversion of their workshop at the EA Summit 2013, and provided volunteer instructors for CFAR’s workshops for more than a year.”

This alignment with Effective Altruism/Rationality was evident in Geoff Anders’ initial introduction post. “One of our projects is existential risk reduction. We have conducted a study of the efficacy of methods for persuading people to take the risks of artificial general intelligence (AGI) seriously,” described Anders. “We have begun a detailed analysis of AGI catastrophe scenarios.”

“A second project is intelligence amplification,” he added. “We plan to start testing novel techniques soon.”

Leverage’s Alleged Abusive Psychological Experiments

Leverage Research ran “a psychology and human behavior research program.” An old version of its website (Wayback Machine, June 2013) indicates that the program involved “the simultaneous execution of a number of difficult projects pertaining to different facets of the human mind.”

It was understood among members that they were signing up to be guinea pigs for experiments in introspection, altering one's belief structure, and experimental group dynamics. According to various allegations, it led to dissociation and fragmentation, which they have found difficult to reverse.

In 2021, Zoe Curzi published a detailed post entitled “My Experience with Leverage Research.” “I was part of Leverage/Paradigm from 2017-2019,” she wrote, and experienced “narrative warfare, gaslighting, and reality distortion.” Each day included hours of “destabilizing mental/emotional work.” She went through many months of “near constant terror at being mentally invaded.” Her psychological distress and PTSD were due to Leverage’s “debugging sessions,” which aimed to “jailbreak” the members’ minds and (through confrontations) to “yield rational thoughts.”

“Since leaving Leverage/Paradigm in 2019, I experienced a cluster of psychological, somatic, and social effects” that are typically associated with cults. She shared a cluster of almost every symptom “on the list of Post-Cult After-Effects.”

Leverage’s “Debugging” aimed to end the members’ “irrationalities”

The goal of Geoff’s psychological experiments sounded positive: mental or cognitive practices aimed at deliberate self-improvement. (Who would reject some self-improvement?) But, in practice, in a “debugging session”:

“You’d be led through a series of questions or attentional instructions with goals like working through introspective blocks, processing traumatic memories, discovering the roots of internal conflict, “back-chaining” through your impulses to the deeper motivations at play, figuring out the roots of particular powerlessness-inducing beliefs, mapping out the structure of your beliefs, or explicating irrationalities.”

“There was a hierarchical, asymmetric structure of vulnerability; underlings debugged those lower than them on the totem pole, never their superiors, and superiors did debugging with other superiors.” There was a pressure to “debug” other people. “I was repeatedly threatened with defunding if I didn’t debug people.”

You were also expected to become a “self-debugger.” “I sat in many meetings in which my progress as a ‘self-debugger’ was analyzed or diagnosed, pictographs of my mental structure put on a whiteboard.” Leverage’s members were pressured to mold their minds “in the ‘right’ direction.” The implicit goal was to eventually have no more “irrationalities” left.

In 2022, Leverage Research published a “Public Report on Inquiry Findings,” which summarizes that “approximately three to five of the around 43 members of Leverage 1.0 in question may have had very negative experiences overall, particularly towards the end of the project (around 2017-2019).”

Leverage’s inquiry report did not dispute Zoe Curzi’s recollections, as most of their interviewees “believed she was trying to honestly convey her own experience and that others had had experiences that were in some ways akin to what Zoe described.” Leverage’s own report did note that “many individuals felt some form of pressure to engage in training” and “instances of power imbalances […] likely only made this problem worse.”

Normally, such psychological research is conducted in a regulated manner under the oversight of an Institutional Review Board (IRB) and often under a strict ethical framework to prevent harm to participants. However, Leverage Research has confirmed to Protos' investigation that the research was not conducted with an IRB.

“The Only Organization with a Plan” to Save the World

A post in the LessWrong Forum, “Common knowledge about Leverage Research 1.0,” claimed that the stated purpose of Leverage was to discover more theories of human behavior and civilization by “theorizing” while building power and then literally taking over U.S. and/or global governance (the vibe was "take over the world"). The narrative within the group was that they were the only organization with a plan that could possibly work and the only real shot at saving the world; that there could be no possibility of success at one's goal of saving the world outside the organization. Many in the group felt that Geoff Anders was among the best and most powerful “theorists” in the world.

Leverage members “lived and worked in the same Leverage-run building, an apartment complex near Lake Merritt. In 2018, Geoff Anders wrote on the Effective Altruism Forum: “On residence, new hires typically live at our main building for a few months to give them a place to land and then move out. Currently, less than 1/3 of the total staff live on-site.” Further complicating things is that Geoff Anders had romantic relationships with multiple members. Anders dated three women employed by Leverage ecosystem.4

Ecosystem: Paradigm Academy and Reserve

Paradigm Academy was the for-profit arm of the non-profit Leverage

Paradigm Academy was Leverage’s sister company. It was co-founded by Nevin Freeman (also co-founder of Reserve). While Leverage was a non-profit, Paradigm was its for-profit arm.

Geoff Anders described Paradigm Academy as “a startup that trains EAs” (Effective Altruists). He elaborated in the Effective Altruism Forum: “I run both Leverage and Paradigm. Leverage is a non-profit and focuses on research. Paradigm is a for-profit and focuses on training and project incubation. The people in both organizations closely coordinate […] I think this means we’re similar to MIRI/CFAR. They started with a single organization, which led to the creation of a new organization. Over time, their organizations came to be under distinct leadership, while still closely coordinating.”

Leverage Chief Operating Officer (COO) Larissa Hesketh-Rowe once described (in the Effective Altruism Forum) that “Leverage's mission was essentially conducting research. Paradigm's focus was much more on training. This means that there is some natural overlap and historically the two organisations have worked closely together. Paradigm uses Leverage’s research content in their training, and in return, they provide practical support to Leverage.”

Paradigm Academy described itself as “an education company based in Oakland, CA. We do research & development and offer workshops from our offices on Lake Merritt.”

Paradigm Academy's LinkedIn page states, “Paradigm aims to equip ambitious, altruistic people with tools to face the world's greatest challenges.” Under “Clear Thinking,” it says, “Joining Paradigm Academy is like putting your mind under a microscope – you get to see the way your thoughts work and make changes that impact your plans and actions.”

Paradigm Academy Head of Training, Miya Perry, had a talk in EA Global 2016 on How to Change Your Mind. One of the session’s attendees described, “We learned about changing our behaviors by digging deep into our System 1 and System 2 beliefs.”

In her revelations on Leverage, Zoe Curzi pointed out that Leverage and Paradigm, “while legally separate entities, operated as an enmeshed social/cultural unit.”

The profit model: According to an old version of Paradigm Academy (Wayback Machine, August 2018), “Paradigm Academy is a for-profit, investment-funded company with a venture studio model. It periodically spins off ambitious companies, and the profit from those companies is what earns a return for our investors.”

Reserve: The Crypto Startup for Raising Money

Daniel Colson, Nevin Freeman, and Miguel Morel co-founded a crypto startup, Reserve. Freeman was also a co-founder of Paradigm Academy, and Morel was there as “Entrepreneur in Residence” while Colson worked on “Talent Discovery.”

An older version of Morel’s LinkedIn profile (Wayback Machine, November 2021) listed the investors of their crypto startup: “Coinbase, Peter Thiel, Sam Altman, Digital Currency Group, Blocktower Capital, GSR.io, Rocket Fuel, NEO Global Capital, Fenbushi, PreAngel, CryptoLotus, Arrington XRP Capital, and others.”

A post in the Effective Altruism Forum on Leverage clarified that “Reserve (a cryptocurrency) was founded by ecosystem members, with a goal of raising money for Leverage/Paradigm.”

According to Zoe Curzi, ex-Leverage/Paradigm member, “the least harmful associated organization was Reserve (a crypto startup which emerged as a funding strategy for the ecosystem) […] mostly it seemed like a regular company, albeit with a weird financial agreement with Geoff.” When Geoff Anders, a shareholder in Paradigm, invested some of his time into helping Reserve, he received tokens for that.

In 2021, a former Paradigm Academy member wrote on the LesssWrong Forum, “Reserve was founded with the goal of funding existential risk work. This included funding the Leverage ecosystem (since many of the members thought of themselves as working on solutions that would help with X-Risk) but would have also included other people and orgs.”

In 2022, a former Paradigm Academy COO (Cathleen Kilgallen) shared, “I think Reserve is poised to feed a large amount of money into EA/x-risk causes (through the tokens allocated to investors and other individuals in the EA & x-risk communities).”

Another Reserve co-founder, Tyler Alterman, shared that the Effective Altruism movement has led to his depression. “I felt like I’d drunk the kool-aid of some pervasive cult, one that had twisted a beautiful human desire into an internal coercion […] I quit my role at the earn-to-give startup I’d co-founded, Reserve, mostly by failing to show up. A controversial organization called Leverage Research, which was full of similarly hardcore EAs, rented me a relatively low-cost room. (Edit: It's much more accurate to say this instead: I was receiving a salary from Paradigm, a Leverage affiliate that incubated my company, Reserve, and part of that salary went to renting a room.)

I participated in a few of their experimental therapies […] the culture behind them made my problem worse: their aim was to help people remove psychological blocks to working on the world’s biggest problems.”

Leverage – The PLAN

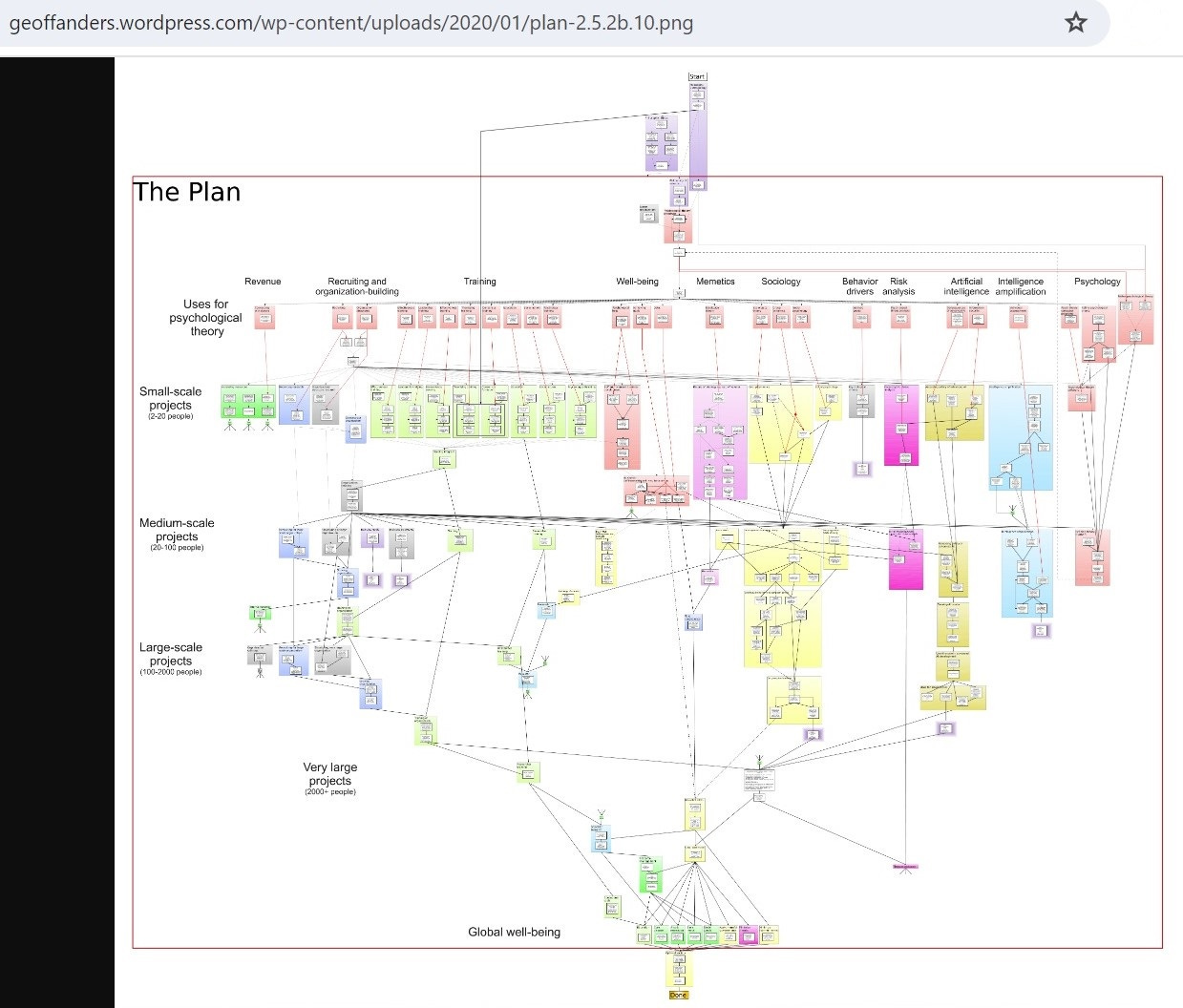

Geoff Anders launched his research group with an ambitious plan to save the world. The problem? His “Theory of Change” plan is a pepe silvia looking plan for world domination.

It is available to download as a PDF and has many details. Here are the most relevant ones:

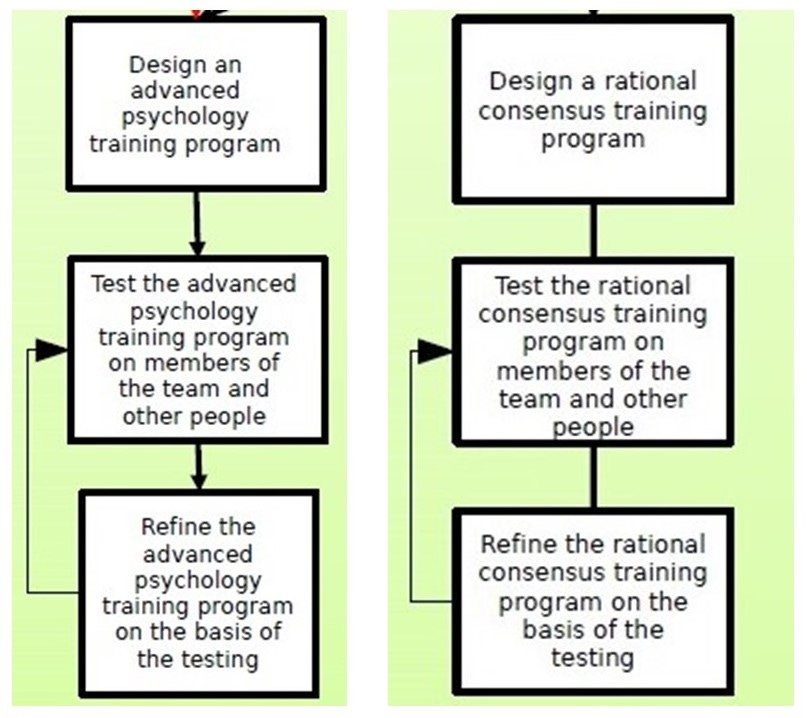

1. Planning the psychological experiments

Leverage studied the mind through introspection. One of the goals was “improving rationality.” Its psychological toolset included: 1. Belief reporting. 2. Charting – organizing the beliefs into charts that display belief dynamics. 3. Connection theory (CT) – producing “psychological changes.” Designing “interventions that the theory predicts will yield change in a person’s beliefs and actions.”

2. 100s of projects aimed at shaping the world

Geoff Anders’ proposed projects included “forming political organizations, winning elections,” and “averting catastrophes.” The goal was to “achieve global coordination by sufficiently benevolent and powerful people” and to design “optimal societies.” “Every step is meant to be beneficial and entirely non-violent.”

According to Curzi’s recollections, 1. People genuinely thought, “We were going to take over the US government.” 2. “The main mechanism through which we’d save the world was that Geoff would come up with” a theory of every domain of reality.

3. Having “enough power” to “stably guide the world”

If everything goes as planned, the members of Leverage will have “enough power to be able to stably guide the world” and prevent catastrophes. (Feel safer?)

4. Organization splitting

“When useful, split the organization into multiple independent organizations with the same goal.” It's exactly what Geoff Anders did with Leverage Research and Paradigm Academy. It’s also what Eliezer Yudkowsky did with MIRI (Machine Intelligence Research Institute) and CFAR (Center for Applied Rationality).

5. Internal funding

“Have members of the organization help financially support the organization.” Indeed, its members launched “Reserve” to help fund Leverage Research and Paradigm Academy.

From “Leverage 1.0” to “Leverage 2.0”

In June 2019, the Leverage ecosystem was disbanded. The various projects and organizations involved split to operate independently.

To clean up the mess, “some of the key things” Leverage “ended up removing were:

content on Connection Theory (CT).

a long-term plan document.

a version of our website that was very focused on ‘world-saving.’”

The group rebranded to “Leverage 2.0” and shifted its focus.

Now, it is working on other projects, like bottlenecks in science and technology and the history of science (e.g., the history of electricity).

Effective Altruists Discuss “Leverage is a Cult”

Many of the “Characteristics Associated with Cults” (also referred to as “high-demand groups” or “high-control groups”) seem to fit “Leverage 1.0”:

Mind-altering practices (the “Debugging”); dictating how members should live, and shame and guilt; elitism (“The Plan”); socializing within the group (the apartment complex); loaded language (e.g., EA Glossary and Geoff Anders’ theories); radical beliefs (the only group with a plan to save the world).

There were several discussions in the Effective Altruism Forum and LessWrong Forum regarding Leverage’s cult-like behavior (especially between 2017 and 2019).

“The secrecy + ‘charting/debugging’ + ‘the only organization with a plan that could possibly work, and the only real shot at saving the world’ is (if true) adequate to label the organization a cult (in the colloquial sense). This are all ideas/systems/technologies that are consistently and systematically used by manipulative organizations to break a person's ability to think straight. Any two of these might be okay if used extremely carefully (psychiatry uses secrecy + debugging), but having all three brings it solidly into cult territory.”

Another forum member compared the “Belief Reporting” and debugging to the “auditing” in Scientology, “except instead of using an e-meter they rely on nerds being pathologically honest.”

“I wonder if some early red flags with Leverage (living together with your superiors who also did belief reporting sessions with you, believing Geoff's theories were the word of God, etc.) were explicitly laughed off as ‘Oh, haha, we know we're not a cult, so we can chuckle about our resemblances to cults’.”

“It's been helpful for me to think of three signs of a cult:

Transformational experiences that can only be gotten through The Way.

Leader has Ring of Power that gives access to The Way.5

Clear In-Group vs. Out-Group dynamics to achieve The Way.

Leverage 1.0 seems to have all three:

Transformational experiences through charting and bodywork.

Geoff as Powerful Theorist.

In-group with Leverage as ‘only powerful group.’

I've never met Geoff, but I have heard a few negative stories about emotional ~trauma from some folks there.”

The word “cult” refers to “a well-defined set of practices used to break people's ability to think rationally. Leverage does not deny using these practices. To the contrary, it appears flagrantly indifferent to the abuse potential.”

“I have spoken with 4 former interns/staff who pointed out that Leverage Research (and its affiliated organizations) resembles a cult according to the criteria listed here.”

It linked to an article entitled “How to Identify a Dangerous Cult.” Among the criteria:

Narcissistic personality: Dangerous cult leaders usually hold grandiose notions of their place in the world. Claims of special powers: “If a leader claims he’s smarter, holier, and more pure than everyone else, think twice about signing up.”

Isolation: Separating group members from family and friends forces them to rely on fellow cultists for all emotional needs. This is why many cults are based around communal living.

Ability to read others: Cult leaders can size you up, realize your weaknesses, and get to your buttons. Confession: Dangerous cults are notorious for making members confess to past sins, often publicly, and then using those confessions against them.

Language control: Special jargon, new words to describe complicated abstractions. This makes conversations with outsiders tedious.6

After the testimonies about Leverage’s alleged abusive psychological experiments became public, CEA was criticized for not disclosing that its “Pareto Fellowship” was run by Leverage. “Yep, I think CEA has in the past straightforwardly misrepresented […] and sometimes even lied in order to not mention Leverage's history with Effective Altruism,” wrote Oliver Habryka. “I think this was bad and continues to be bad.”

Oliver Habryka’s exploration into “How do you end up with groups of people who do take pretty crazy beliefs extremely seriously” also included the assertion that “The people who feel most insecure tend to be driven to the most extreme actions, which is borne out in a bunch of cult situations.” In the end, he added: “There are also a ton of other factors that I haven’t talked about (willingness to do crazy mental experiments, contrarianism causing active distaste for certain forms of common sense, some people using a bunch of drugs, high price of Bay Area housing, messed up gender-ratio and some associated dynamics, and many more things).”

John Wentworth, an independent AI alignment researcher who is partly funded by LTFF, replied to Habryka that his default model is that “some people are very predisposed to craziness spirals.” For example, “’AI is likely to kill us all’ is definitely a thing in response to which one can fall into a spiral-of-craziness, so we naturally end up ‘attracting’ a bunch of people who are behaviorally well-described as ‘looking for something to go crazy about.’” Wentworth added that “other people will respond to basically-the-same stimuli by just… choosing to not go crazy.” “On that model,” he concluded, “insofar as EAs and rationalists sometimes turn crazy, it’s mostly a selection effect. ‘AI is likely to kill us all’ is a kind of metaphorical flypaper for people predisposed to craziness spirals.”

Former Members Who are Active in the AI X-Risk Ecosystem

Aside from the leading figures mentioned thus far, another Reserve employee was Jeffrey Ladish. He was a Security Researcher at Paradigm Academy and the Chief Information Security Officer at Reserve. He moved to Anthropic, then Palisade Research, the Center for Humane Technology, and is on the Center for AI Policy (CAIP) board.

In April 2024, CAIP released a draft bill, a “framework for regulating advanced AI systems.” This “Model Legislation” suggested establishing a strict licensing regime, clamping down on open-source models, and imposing civil and criminal liability on developers. This proposal was described as “the most authoritarian piece of tech legislation.”

Another interesting case study is Daniel Colson.

In August 2016, Daniel Colson was a speaker at the Effective Altruism Global conference. He described himself as “A sociology researcher at Leverage Research and co-director of EA Build, a CEA project to create highly effective EA student groups at every college.”

Colson’s workshop, “How to Save the World While Still at School: EA Student Chapters,” was “particularly aimed at students and those interested in EA movement building.”

According to a document by the Centre for Effective Altruism, “EA Build” was their project for “working on building a community” and “working on student and local groups.”

Daniel Colson’s LinkedIn indicates he was part of the sociological research group (NOT the more controversial psychological research group).

According to his recollection, Daniel Colson became interested in AI in 2014, thanks to Nick Bostrom’s book “Superintelligence.”

Nine years later, his organization, the AI Policy Institute (AIPI), said in a press release that “the threat to humankind is alarming.” “While much of the public discussion has been oriented around AI’s potential to take away jobs, AIPI will be focused on centering the importance of policies designed to prevent catastrophic outcomes and mitigate the risk of extinction.”

Daniel Colson founded AIPI because, in his words, public polling can “shift the narrative in favor of decisive government action.” “The AI space lacks an organization to both gauge and shape public opinion on the issue – as well as to recommend legislation on the matter – and AIPI will fill that role.”

Colson’s agenda: “Making it illegal to build computing clusters above a certain processing power.” In March 2024, Nik Samoylov, the Campaign for AI Safety founder and advisor to AIPI, shared Colson’s “Policy Scorecards.” This table showcased the desired goal: Preventing or Delaying Superintelligent AI.

A Politico article on AIPI’s launch mentioned one of Daniel Colson’s advisors, who is unmentioned on AIPI’s website. Progressive pollster Sean McElwee, an expert in using polling to shape public opinion—best known for his relationships with the Biden White House and Sam Bankman-Fried—advises Colson behind the scenes.

The Politico reporter also mentioned Daniel Colson’s ties to the Effective Altruism organization “Rethink Priorities” and that Colson “distances himself from the movement.” Why? Because Effective Altruists’ vision for containing AI is too focused on technological fixes while ignoring the potential for government regulation, said Colson. “That motivated the launch of AIPI.” Regarding his funding, Colson only said it came “from a handful of individual donors in the tech and finance worlds” but declined to name them.

Colson called his work “public polling” when the more accurate description is “push polling.” Daniel Jeffries, CEO of Kentaurus AI, explained on Twitter that “Push polling” is when people deliberately ask a polling question to get the answer they want. Basically, you ask leading or loaded questions designed to sway the respondents’ views in a specific desired direction, while concealing important information that would change their answers. Lauren Wagner, an advisor to the Data & Trust Alliance and a term member at the Council on Foreign Relations, also pointed out that AIPI’s polls are not valid. A social scientist at NYU specializing in tech policy evaluated AIPI’s survey questions and said: “The wording is wild... also, polling on low-salience issues is notoriously uninformative. ‘What do you think about this topic that we’ve introduced to you in the question’ doesn't really provide useful information, and often leads to significant swings in polling over time.”

Nonetheless, AIPI’s ill-written polls became very influential. They are frequently quoted in Politico, Axios, TIME magazine, and The Hill. Accordingly, they are being used by politicians to justify their bills. The latest example is Senator Scott Wiener (CA), who pushed the controversial SB-1047 bill with AIPI’s biased results.

Hopefully, from now on, people will look at AIPI’s efforts to “shift the narrative“ with a critical eye.

Epilogue

What has been done thus far:

The Good: The EA community’s discourse around its darkest artifacts is lively and takes place in public forums.

The fact the movement distanced itself from some of its “high-control groups” (Leverage, Zizians, Vassarites) is also encouraging.

These are essential steps toward improvement.

The Bad: The community still generates “craziness spirals.” In part thanks to its “AI existential risk” obsession.

Its discourse does not fully address the underlying causes that fuel such sub-groups.

The Ugly: There are also allegations of sexual harassment and abuse that are not in the scope of this piece.7

What should be done moving forward?

Whistleblowers: We need more testimonies and accountability to this movement’s leaders.

Scrutiny: The effects of this movement’s beliefs and practices across the AI industry and academia need to be examined more closely. The damages are real.

Endnotes

For example, the Zizians/Jack LaSota or Vassarites/Michael Vassar. It’s quite a rabbit hole.

The definitions are taken from The TESCREAL bundle of ideologies (Table 1).

In the Bloomberg article on how the EA obsession with killer rogue AI “helps bury bad behavior,” Hesketh-Rowe (Leverage 2.0 team) and the former CEO of the Centre for Effective Altruism, said that when high-status people in the community said “AI risk” was a vital research area, others deferred. “No one thinks it explicitly, but you’ll be drawn to agree with the people who, if you agree with them, you’ll be in the cool kids group,” she says. “If you didn’t get it, you weren’t smart enough, or you weren’t good enough.” “Hesketh-Rowe, who left her job [as CEO of CEA] in 2019, has since become disillusioned with EA and believes the community is engaged in a kind of herd mentality.”

“Our Executive Director had three long-term, consensual relationships with women employed by Leverage Research or affiliated organizations during their history,” admitted Leverage’s COO.

What mistakes has the AI safety movement made? “Hero worship”: “Some are too willing to adopt the views of a small group of elites who lead the movement (like Yudkowsky, Christiano and Bostrom).”

What mistakes has the AI safety movement made? Using too much jargony and sci-fi language: “Esoteric phrases like ‘p(doom),’ ‘x-risk’ or ‘HPMOR’ can be off-putting to outsiders and a barrier to newcomers, and give culty vibes.” This insular behavior was not by mistake but on purpose: In order to “avoid too many dumb people… too many stupid or irrational newbies flooding into AI safety.”

After Zoe Curzi’s testimony about Leverage, Jessica Taylor, a former CFAR (Centre for Applied Rationality) employee, posted about her traumatic experience: “My Experience at and around MIRI and CFAR.” “Most of what was considered bad about the events at Leverage Research also happened around MIRI/CFAR, around the same time period (2017-2019),” she wrote. “Zoe begins by listing a number of trauma symptoms she experienced. I have, personally, experienced most of those on the list of cult after-effects in 2017, even before I had a psychotic break.”

A Bloomberg article, “The Real-Life Consequences of Silicon Valley’s AI Obsession” (March 2023), detailed her and other former members’ experiences with the practice of “jailbreaking” their minds. There were also allegations of sexual harassment and abuse. “Women who reported sexual abuse, either to the police or community mediators, say they were branded as trouble and ostracized while the men were protected.” A similar article was published in TIME magazine (February 2023): Effective Altruism Promises to Do Good Better. These Women Say It Has a Toxic Culture of Sexual Harassment and Abuse. “This story is based on interviews with more than 30 current and former effective altruists and people who live among them.”

Note: For clarity, “Rationalism” has been replaced with “Rationality.”

Good piece. More background can be found here: https://aiascendant.substack.com/p/extropias-children-chapter-2-demon-haunted-world including more details on the rationalist/EA community's response to the Leverage revelations, and how "debugging" was practiced at other, non-Leverage institutions as well.