The “AI Existential Risk” Industrial Complex

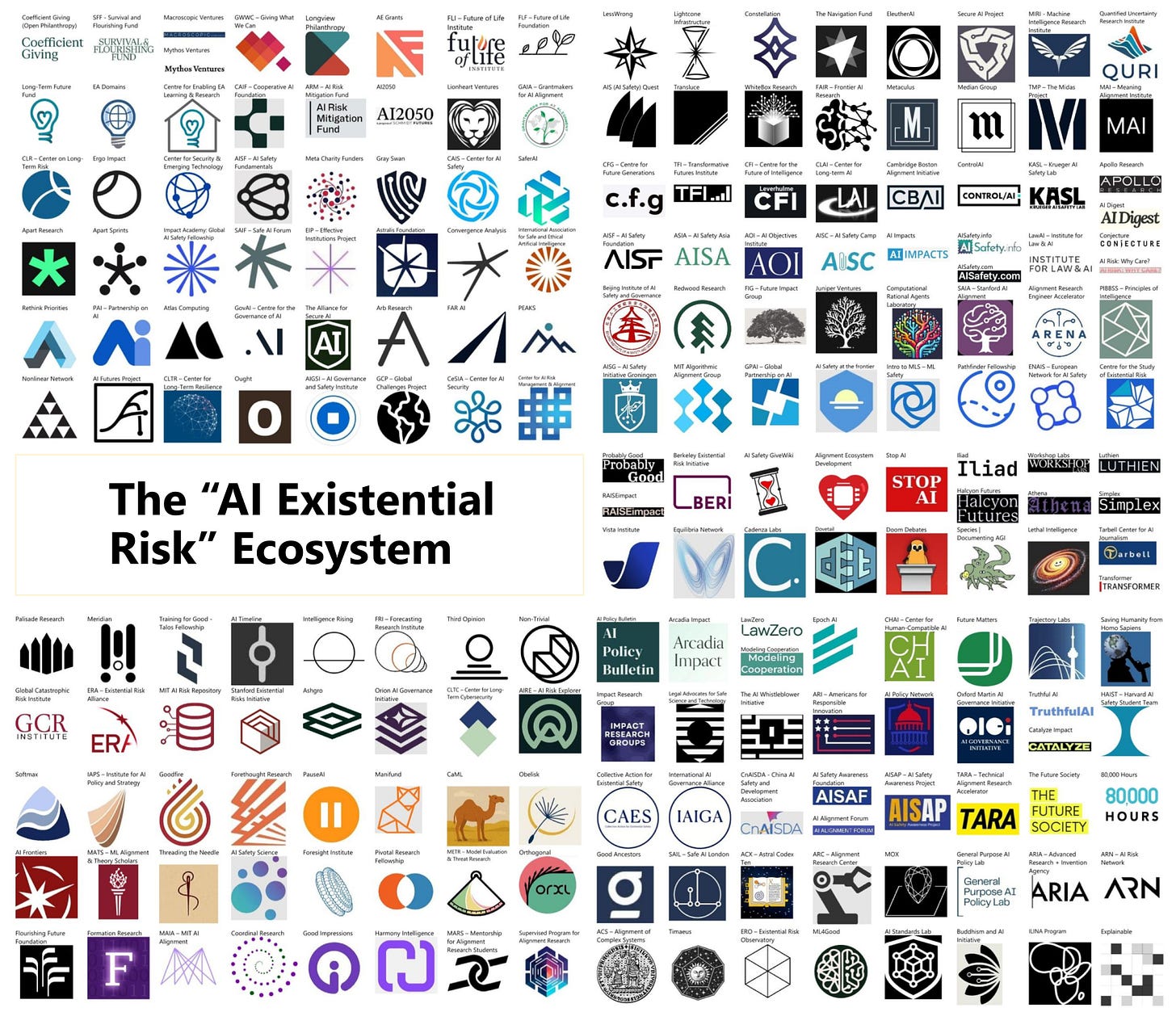

This is your guide to the growing “AI Existential Risk” ecosystem.

The “AI Existential Risk” Ideology

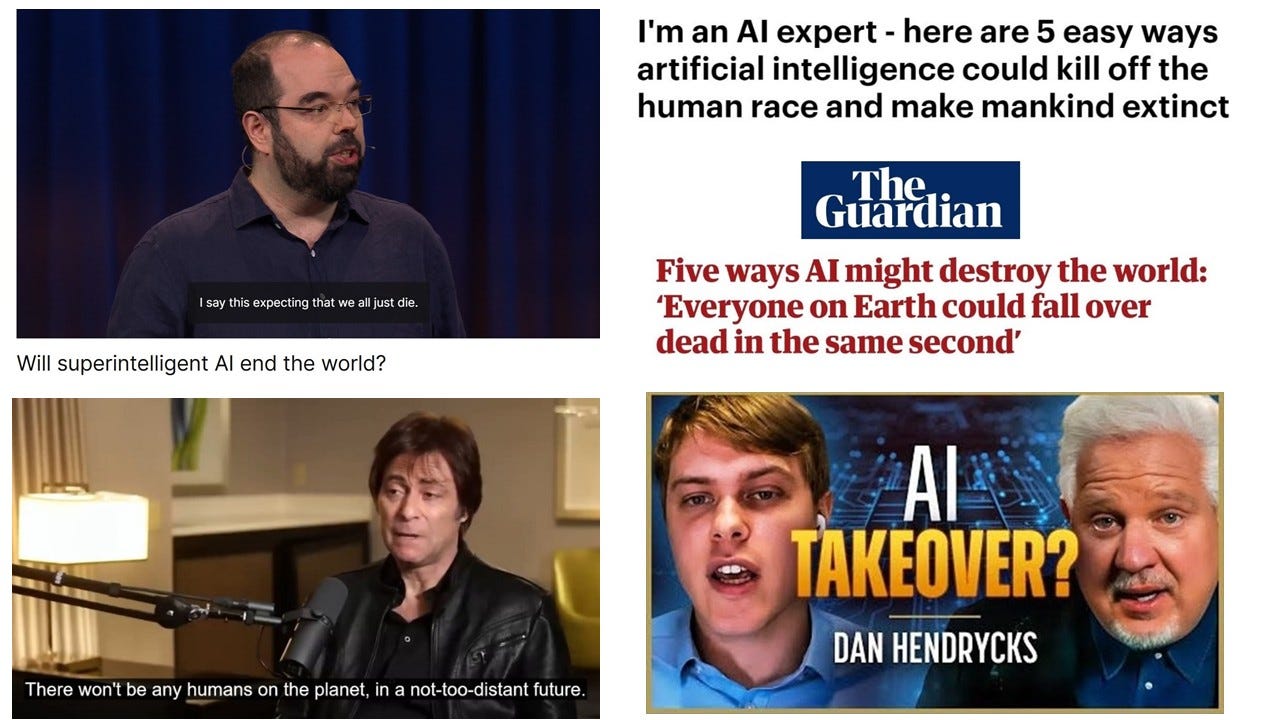

ChatGPT’s launch in 2022 sparked a wave of AI doomerism. The leading voices in the “AI will kill us all” camp have confidently predicted that the END of humanity is near. “We believe humans will be wiped out by Godlike AI.” Though “with enough money and effort, we might be able to save humanity from the impending AI apocalypse.” They talked about an “AI takeover” during their media and policymaking takeover. Since then, we have been in an unprecedented AI panic.

The “AI Existential Risk” Ecosystem’s Funding

Their “AI Existential Risk” ideology had the financial backing of over a billion dollars from a few Effective Altruism billionaires, namely Dustin Moskovitz, Jaan Tallinn, Vitalik Buterin, and Sam Bankman-Fried (yes, the convicted felon).

Moskovitz’s Open Philanthropy (rebranded as Coefficient Giving) is by far the largest donor1 to most of the “AI Existential Risk” organizations.

The “AI Existential Risk” Lobbying

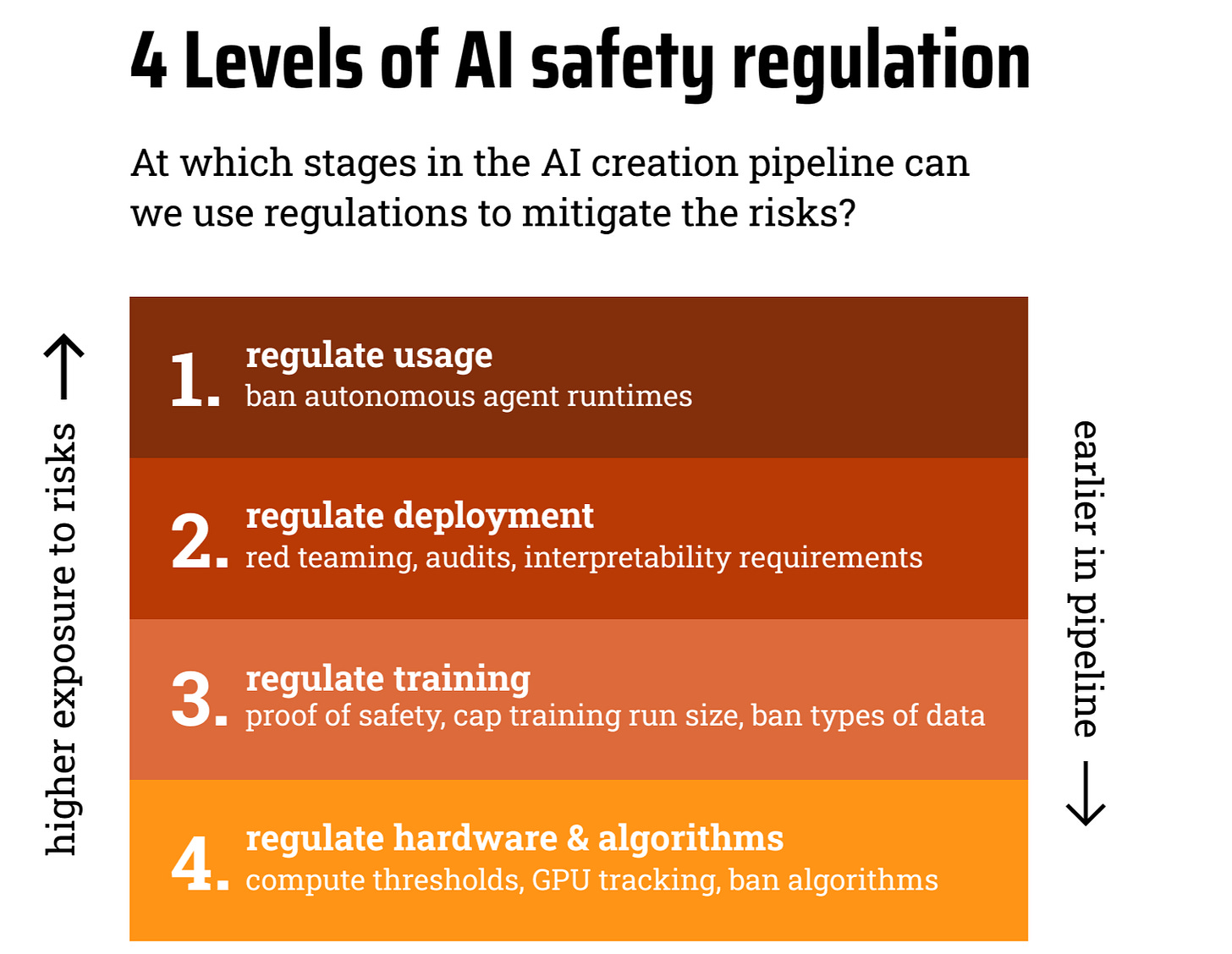

Nowadays, the “AI Existential Risk” ecosystem encompasses hundreds of organizations. Many advocate extreme authoritarian measures to stop/pause AI. They include “requiring registration and verifying location of hardware,” “a strict licensing regime, clamp down on open-source models, and impose civil and criminal liability on developers.”

Recently, Berkeley’s “Machine Intelligence Research Institute” (MIRI) proposed to

· restrict machine learning and artificial intelligence research

· prohibit all training runs above 10^24 FLOP (many such models already exist)

· monitor chip clusters above 16 H100s (Yudkowsky & Soares’ “magic number” was 8).

MIRI acknowledges that all its surveillance mechanisms “enable authoritarianism.”

ControlAI even proposed a 20-year pause, because the “default” is human extinction by Godlike AI, and “two decades provide the minimum time frame to construct our defenses.”

Staying informed about this growing “AI existential risk” ecosystem is important. So, the information below aims to familiarize you with the various players involved.

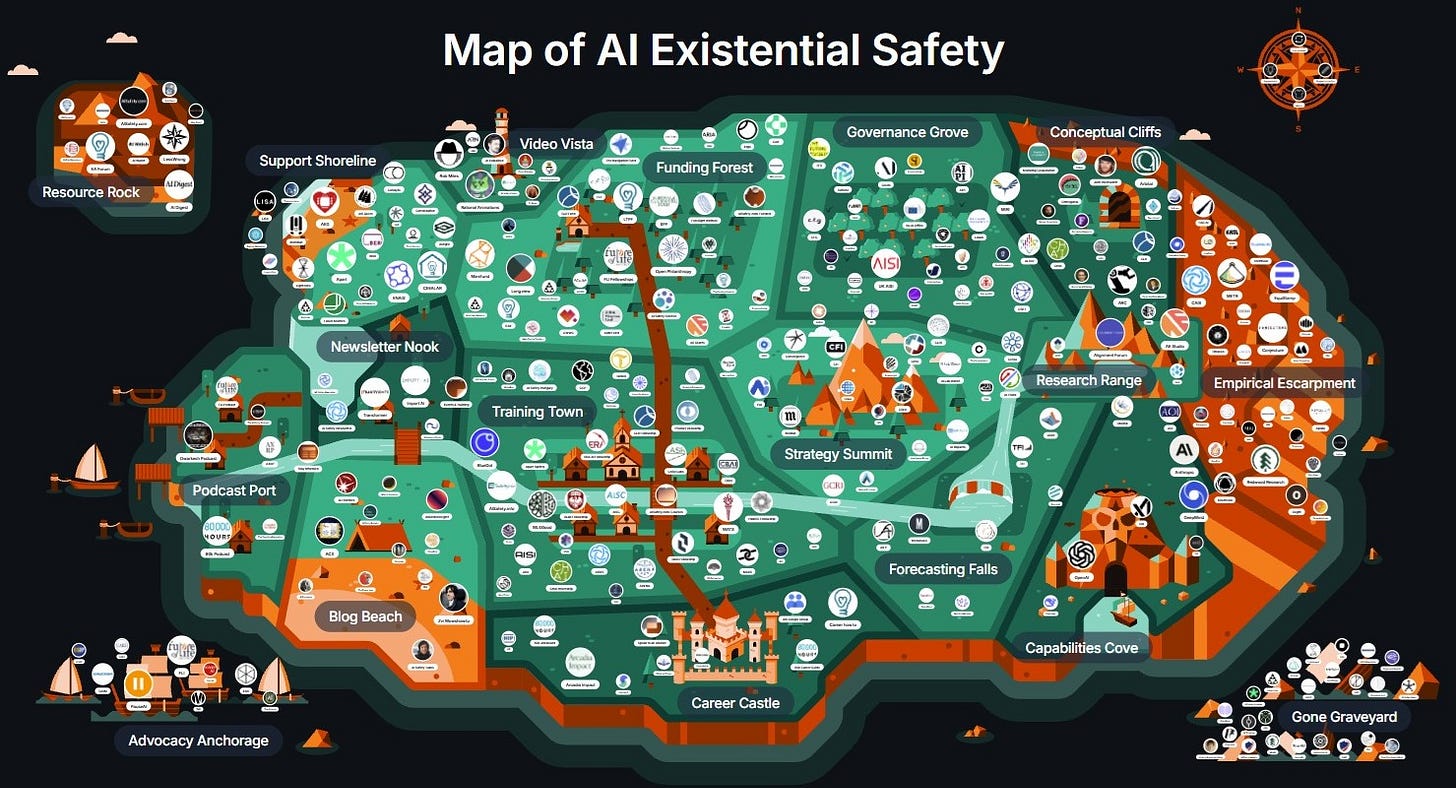

The “AI Existential Risk” Map

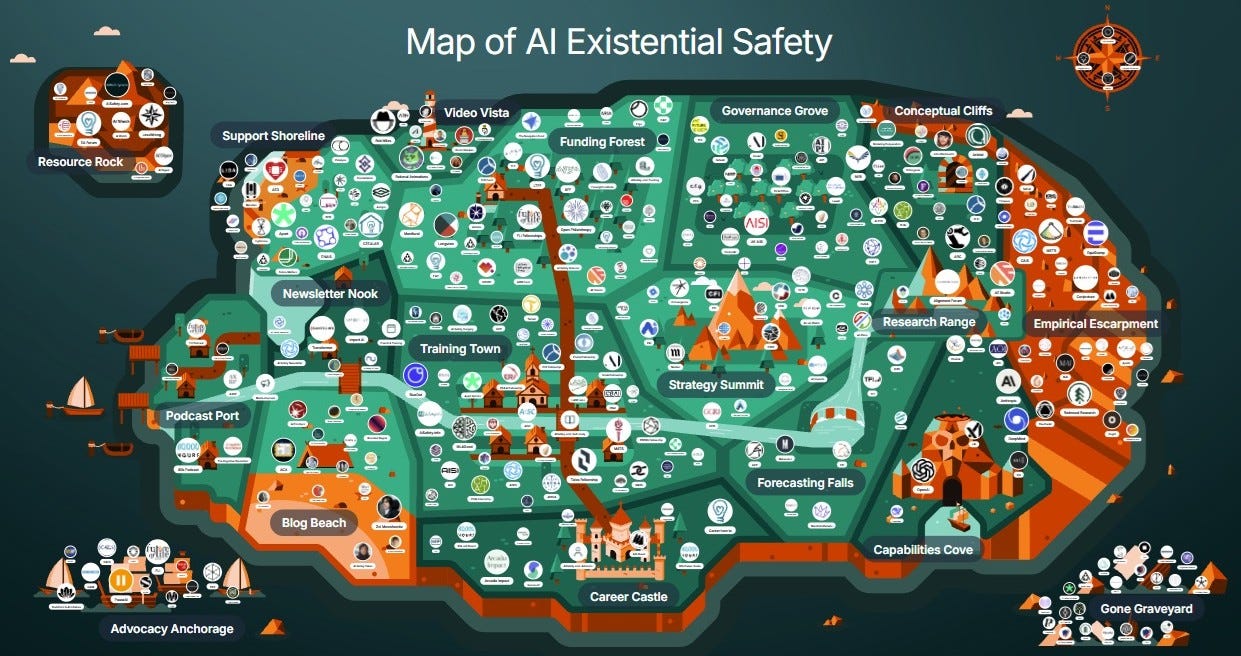

The Map of AI Existential Safety was created by effective altruists so that the epistemic community of AI safety students/researchers/lobbyists could become more acquainted with all potential funding, resources, organizations, and projects.

It is a visual overview of the key organizations, programs, and projects operating in the “AI existential risk” ecosystem.

Since there are so many, the accompanying spreadsheet—with its over 300 rows—really comes in handy.

To make sense of this ecosystem’s expansion, the map offers the following categories: Funding; Governance; Conceptual Research; Capabilities Research; Training and Education; Research Support; Career Support; Resources; Media; Public Outreach/Advocacy; and Blogs/Newsletters.

The criteria for inclusion in the map: “An entry must have reducing AI existential risk as a goal; have produced at least one output to that end; must additionally still be active and expected to produce more outputs.”

I first published this map’s details in the “Ultimate Guide to ‘AI Existential Risk’ Ecosystem” on December 5, 2023. Afterward, I updated it in “Panic-as-a-Business is Expanding” on April 15, 2024.

Since then, 50 new entries have been added to this map.

August 2025 update: Since January, 62 new entries have been added to this map.

November 2025 update: Since August, 36 new entries have been added to this map.

December 2025 update: Since November, 15 new entries have been added to this map.

February 2026 update: Since December 2025, 12 new entries have been added to this map.

Please note:

Each item includes a link and a description (some have endnotes with additional data). The descriptions are from the map’s website.

Not all entries represent the ideology summarized above. There are different levels of AI safety work. This map encompasses all types.

The Key Takeaway from the “AI Existential Risk” Map

One conclusion can be drawn from reviewing the hundreds of links: It’s an inflated ecosystem.

There’s a great deal of redundancy:

The same names/acronyms/logos (with only minor changes)

The same extreme talking points

The same group of people, just with different titles

The same funding source (primarily Coefficient Giving, formerly Open Philanthropy).

This is clearly not a grassroots, bottom-up movement. It is a well-orchestrated top-down movement.

The media and politicians need to open their eyes to this reality.

Funding

Open Philanthropy [rebranded as Coefficient Giving]

The largest funder in the existential risk space.

SFF – Survival and Flourishing Fund2

The second largest funder in AI safety.

Making grants addressing global catastrophic risks, promoting longtermism, and otherwise increasing the likelihood that future generations will flourish.

Community of donors who have pledged to donate a significant portion of their income.

FLI – Future of Life Institute – Grantmaking Work

Fellowships include PhD and postdoctoral fellowships in technical AI safety, and a PhD fellowship in US-China AI governance.

Grant programs include “Request for proposals on religious projects tackling the challenges posed by the AGI race,” “call for proposed designs for global institutions governing AI,” and policy advocacy groups like Encode and the Center for Humane Technology.

Longview Philanthropy [formerly Effective Giving UK]

Devises and executes bespoke giving strategies for major donors.

Regranting and impact certificates platform for AI safety and other cause areas.

CLR – Center on Long-Term Risk – Fund

Financial support for projects focused on reducing current and future s-risks.

GiveWiki [Formerly Impact Markets]

Crowd-sourced charity evaluator, helping people find or promote the best AI safety projects.

EAIF – Effective Altruism Infrastructure Fund

Aiming to increase the impact of effective altruism projects by increasing their access to talent, capital, and knowledge.

Philanthropic initiative supporting researchers working on key opportunities and hard problems that are critical to get right for society to benefit from AI. Proposals by invite only.

SHfHS – Saving Humanity from Homo Sapiens

Small organization with a long history of funding existential risk reduction.

Grant program run by Nonlinear, providing funding to those raising awareness about AI risks or advocating for a pause in AI development.

Funder network for AI existential risk reduction. Applications are shared with donors, who then reach out if they are interested.

GAIA – Grantmakers for AI Alignment

Joinable donor circle for people earning to give or allocating funds towards reducing AI existential risk.

Macroscopic Ventures [formerly Polaris Ventures and the Center for Emerging Risk Research]

Swiss nonprofit making grants and investments focused on reducing long-term suffering.

CAIF – Cooperative AI Foundation

Charity foundation backed by a large philanthropic commitment supporting research into improving cooperative intelligence of advanced AI.

Offers grants to high-impact organizations and projects that are taking bold action and making significant changes.

Network of donors funding charitable projects that work one level removed from direct impact.

VC firm investing in ethical founders developing transformative technologies that have the potential to impact humanity on a meaningful scale.

Aiming to reduce catastrophic risks from advanced AI through grants towards technical research, policy, and training programs for new researchers.

Empowering innovators and scientists to increase human agency by creating the next generation of responsible AI. Providing support, resources, and open-source software.

Helping major donors find, fund, and scale the most promising solutions to the world’s most pressing problems.

Funding projects in 1) automating research and forecasting, 2) security technologies, 3) neurotech, and 4) safe multipolar human AI scenarios.

Aiming to empower founders building a radically better world with safe AI systems by investing in ambitious teams with defensible strategies that can scale to post-AGI.

FLF – Future of Life Foundation3

Accelerator aiming to steer transformative technology towards benefiting life and away from extreme large-scale risks.

Funder housing the science-focused philanthropic efforts of Eric and Wendy Schmidt, which moves large amounts of funding towards AI safety via its AI institute.

ARIA – Advanced Research + Invention Agency

UK government R&D funding agency built to unlock scientific and technological breakthroughs that benefit everyone.

Comprehensive and up-to-date directory of sources of financial support for AI safety projects, ranging from grant programs to venture capitalists.

Regularly-updated guide on how to donate most effectively with the funding and time you have available.

New AI safety funding initiative with $25M annual giving, aiming to unite funders, domain experts, and entrepreneurs to launch and scale ambitious initiatives.

13-week program run by Fifty Years helping scientists and engineers build startups tackling world-scale problems.

VC firm investing in startups helping make AI safe, secure, and beneficial for humanity. Focused on working with exceptional founders at the earliest stages.

AISTOF – AI Safety Tactical Opportunities Fund

Pooled multi-donor fund structured to be fast and rapidly capture emerging opportunities, including in governance, technical alignment, and evaluations. Managed by JueYan Zhang.

Governance

FLI – Future of Life Institute4

Outreach, policy advocacy, grantmaking, and event organization for existential risk reduction.

GovAI – Centre for the Governance of AI

AI governance research group at Oxford, producing research tailored towards decision-makers and running career development programs.

CLR – Center on Long-Term Risk [formerly the EA Foundation]

Research, grants, and community-building around AI safety, focused on conflict scenarios as well as technical and philosophical aspects of cooperation.

CSET – Center for Security and Emerging Technology5

Georgetown University think tank providing decision-makers with data-driven analysis on the security implications of emerging technologies.

Channeling public concern into effective regulation by engaging with policymakers, media, and the public to ensure AI is developed responsibly and transparently.

CLTR – Center for Long-Term Resilience

Think tank aiming to transform global resilience to extreme risks by improving relevant governance, processes, and decision-making.

CFG – Centre for Future Generations7 [Formerly, ICFG - International Center for Future Generations]

Brussels think tank focused on helping governments anticipate and responsibly govern the societal impacts of rapid technological change.

Play-money prediction markets on many topics, including AI safety.

TFI – Transformative Futures Institute

Exploring the use of underutilized foresight methods and tools in order to better anticipate societal-scale risks from AI.

Forecasting platform for many topics, including AI.

FRI – Forecasting Research Institute

Advancing the science of forecasting for the public good by working with policymakers and nonprofits to design practical forecasting tools, and test them in large experiments.

QURI – Quantified Uncertainty Research Institute

Advancing forecasting and epistemics to improve the long-term future of humanity. Writing research and software.

Answering decision-relevant questions about the future of AI, including through research, a wiki, and expert surveys. Run by MIRI.

Research institute investigating key trends and questions that will shape the trajectory and governance of AI.

Research and implementation group identifying pressing opportunities to make the world better.

Building a foundational series of sociotechnical reports on key AI scenarios and governance recommendations, and conducting AI awareness efforts to inform the general public.

Defining, designing, and deploying projects that address institutional barriers in AI governance.

GCRI – Global Catastrophic Risk Institute

Small think tank developing solutions for reducing existential risk by leveraging both scholarship and the demands of real-world decision-making.

CSER – Centre for the Study of Existential Risk

Interdisciplinary research centre at the University of Cambridge doing diverse existential risk research.

CFI – Centre for the Future of Intelligence

Interdisciplinary research centre at the University of Cambridge exploring the nature, ethics, and impact of AI.

GPI – Global Priorities Institute

University of Oxford research center conducting foundational research to inform the decision-making of individuals and institutions seeking to do as much good as possible.

Research nonprofit working on models of past and future progress in AI, intelligence enhancement, and sociology related to existential risks.

Nonpartisan research organization developing policy and conducting advocacy to mitigate catastrophic risks from AI.

Provides strategy consulting services to clients trying to advance AI safety through policy, politics, coalitions, or social movements.

Convening academic, civil society, industry, and media organizations to create solutions so that AI advances positive outcomes for people and society.

IAPS – Institute for AI Policy and Strategy

Research and field-building organization focusing on policy and standards, compute governance, and international governance and China.

LawAI – Institute for Law & AI [formerly, LPP – Legal Priorities Project]

Think tank researching and advising on the legal challenges posed by AI.

AIGS Canada – AI Governance & Safety Canada

Nonpartisan not-for-profit and community of people across Canada, working to ensure that advanced AI is safe and beneficial for all.

CARMA – Center for AI Risk Management & Alignment11

Conducting interdisciplinary research supporting global AI risk management. Also produces policy and technical research.

CLAI – Center for Long-term AI

Interdisciplinary research organization based in China exploring contemporary and long-term impacts of AI on society and ecology.

Platform for connecting junior researchers and seasoned civil servants from Southeast Asia with senior AI safety researchers from developed countries.

CeSIA – Center for AI Security

French AI safety nonprofit dedicated to education, research, and advocacy.

Promoting informed policymaking to navigate emerging challenges from AI through research, knowledge-sharing, and skill building.

A series of proposals developed by ControlAI intended for action by policymakers in order for humanity to survive artificial superintelligence.

AIGSI – AI Governance and Safety Institute

Aiming to improve institutional response to existential risk from future AI systems by conducting research and outreach, and developing educational materials.

IASEAI – International Association for Safe and Ethical Artificial Intelligence

Nonprofit aiming to ensure that AI systems are guaranteed to operate safely and ethically; and to shape policy, promote research, and build understanding and community around this goal.

Beijing AISI – Beijing Institute of AI Safety and Governance

R&D institution dedicated to developing AI safety and governance frameworks to provide a safe foundation for AI innovation and applications.

Nonprofit fighting to keep humanity in control of AI by developing policy and conducting public outreach.

Geneva-based think tank working to foster international cooperation in mitigating catastrophic risks from AI.

Small research nonprofit focused on how to navigate the transition to a world with superintelligent AI systems.

Effective Institutions Project

Advisory and research organization focused on improving the way institutions make decisions on critical global challenges.

CAES – Collective Action for Existential Safety

Nonprofit aiming to catalyze collective effort toward reducing existential risk, including through an extensive action list for individuals, organizations, and nations.

IAIGA – International AI Governance Alliance

Nonprofit dedicated to establishing an independent global organization capable of effectively mitigating extinction risks from AI and fairly distributing its economic benefits to all. IAIGA is an initiative of the Center for Existential Safety (CAES).

Small research group forecasting the future of AI. Created “AI 2027,” a detailed forecast scenario projecting the development of artificial superintelligence.

Interdisciplinary group aiming to identify and understand trends in computing that create opportunities for (or pose risks to) our ability to sustain economic growth.

ARI – Americans for Responsible Innovation

Bipartisan nonprofit seeking to address a broad range of policy issues raised by AI, including current harms, national security concerns, and emerging risks.

CnAISDA – China AI Safety & Development Association

China’s self-described counterpart to the AI safety institutes of other countries. Its primary function is to represent China in international AI conversations.

Australian lobbying organization focused on AI safety policy and other AI-related issues, including cybersecurity and biosecurity. Also runs Australians for AI Safety.

Research nonprofit developing solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier, and more prosperous.

Nonprofit developing and advocating for AI safety principles to be put into practice in US state and federal legislatures.

AIPN advocates for targeted policies that address the unique challenges posed by rapidly advancing AI systems. AIPN engages with members of Congress, congressional staff, federal officials, and media to promote informed, proactive policymaking that prepares America for transformative AI capabilities.

A collaborative effort to bring together Buddhist communities, technologists, and contemplative researchers worldwide to help shape the future of artificial intelligence.

Politically and geographically neutral nonprofit converting insights from the existing literature into ready-made text for AI safety standards.

AIWI – The AI Whistleblower Initiative

Helps AI insiders raise concerns about potential risks and misbehavior in AI development by providing whistleblowing services, expert guidance, and secure communication tools.

Oxford Martin AIGI – AI Governance Initiative

Research center housed in the Martin School of the University of Oxford researching AI governance from both technical and policy perspectives.

GPAI – General Purpose AI Policy Lab

Producing research that helps French institutional actors address the security and international coordination requirements posed by the development of general-purpose AI.

CLAIR – Center for Law & AI Risk

Supporting research at the intersection of law and AI safety, aiming to build a field of legal scholars working to understand how law can reduce catastrophic risks from advanced AI.

Pushing academic and policy discourse forward through research and engagement on horizon scanning, decision making under deep uncertainty, and citizens' assemblies.

Conceptual Research

MIRI – Machine Intelligence Research Institute

The original AI safety technical research organization, co-founded by Eliezer Yudkowsky. Now focusing on policy and public outreach.

ARC – Alignment Research Center

Research organization doing theoretical research focusing on the Eliciting Latent Knowledge (ELK).

A strategy for accelerating alignment research by using human-in-the-loop systems which empower human agency rather than outsource it.

Formal alignment organization led by Tamsin Leake, focused on agent foundations.

ALTER – Association for Long-Term Existence and Resilience

Israeli research and advocacy nonprofit working to investigate, demonstrate, and foster useful ways to safeguard and improve the future of humanity.

Independent researcher working on selection theorems, abstraction, and agency.

CHAI – Center for Human-Compatible AI

Developing the conceptual and technical wherewithal to reorient the general thrust of AI research towards provably beneficial systems. Led by Stuart Russell at UC Berkeley.

Independent researchers trying to find reward functions which reliably instill certain values in agents.

ACS – Alignment of Complex Systems

Studying questions about multi-agent systems composed of humans and advanced AI. Based at Charles University, Prague.

Assistant professor at MIT working on agent alignment.

Author of two books on AI safety, and Professor at the University of Louisville with a background in cybersecurity.

MIT Algorithmic Alignment Group

Working towards better conceptual understanding, algorithmic techniques, and policies to make AI safer and more socially beneficial.

Large team taking a 'Neglected Approaches' approach to alignment, tackling the problem from multiple, often overlooked angles in both technical and policy domains.

Argentine nonprofit conducting both theoretical and empirical research to advance frontier AI safety as a sociotechnical challenge.

Team of researchers pursuing an exploratory, neuroscience-informed approach to engineering AGI.

Nonprofit aiming to reduce lock-in risks by researching fundamental lock-in dynamics and power concentration.

Emmett Shear’s research organization dedicated to developing a theory of “organic alignment” to foster adaptive, non-hierarchical cooperation between humans and digital agents.

CORAL – Computational Rational Agents Laboratory

Research group studying agent foundations to give us the mathematical tools to align the objectives of an AI with human values.

Steve Byrnes’s Brain-Like AGI Safety

Brain-inspired framework using insights from neuroscience and model-based reinforcement learning to guide the design of aligned AGI systems.

Independent LLM agent safety research group launched in 2024, focused on chain-of-thought monitorability.

R&D nonprofit prototyping AI-powered tools to generate formal specifications. Aiming to empower engineers to verify code easily and develop software that’s built for trust.

Developing tools that accelerate the rate human researchers can make progress on alignment, and building automated research systems that can assist in alignment work today.

Mechanistic interpretability research lab aiming to decode neural networks in order to make AI systems more understandable, editable, and safer.

Canadian nonprofit advancing research and developing technical solutions for safe-by-design AI systems based on Scientist AI, a research direction led by Yoshua Bengio.

Developing AI Control in order to increase the probability that effective AI Control systems will be deployed to mitigate catastrophic risks from frontier AI systems when they are developed.

Small team of researchers and engineers aiming to bring the best of physics and computational neuroscience together in order to understand and control AGI.

Nonprofit led by Owain Evans, researching situational awareness, deception, and hidden reasoning in language models.

CLTC – Center for Long-Term Cybersecurity

UC Berkeley research center bridging academic research and practical policy needs in order to anticipate and address emerging cybersecurity challenges.

Group working on foundational mathematics research that gives us an understanding of the nature of AI agents.

Using category theory and agent-based simulations to predict where multi-agent AI systems break down and identify control levers to prevent collective AI behavior from being harmful.

Applied mathematics research nonprofit dedicated to advancing foundational alignment research.

Capabilities Research

Google DeepMind

AI capabilities lab with a strong safety team.

San Francisco-based capabilities lab led by Sam Altman. Created ChatGPT.

Research lab focusing on LLM alignment, particularly interpretability. Featuring Chris Olah, Jack Clark, and Dario Amodei.

San Francisco-based nonprofit conducting safety research, building the field of AI safety researchers, and advocating for safety standards.

Alignment startup born out of EleutherAI, building LLMs and Cognitive Emulation systems.

Nonprofit researching interpretability and alignment.

Open-source research lab focused on interpretability and alignment. Operates primarily through a public Discord server, where research is discussed and projects are coordinated.

Product-driven research lab developing mechanisms for delegating high-quality reasoning to ML systems. Built Elicit, an AI assistant for researchers and academics.

An Oxford-based startup working on safe off-distribution generalization, featuring Stuart Armstrong.

FAR AI19 [Fund for Alignment Research]

Ensuring AI systems are trustworthy and beneficial to society by incubating and accelerating research agendas too resource-intensive for academia but not yet ready for commercialization.

Aiming to detect deception by designing AI model evaluations and conducting interpretability research to better understand frontier models. Also provides policymakers with technical guidance.

Nonprofit researching applications of singular learning theory to AI safety.

AI safety research community based in a small town in Vermont, USA.

Conducting long-term future research on improving cooperation in competition for the development of transformative AI.

Nonprofit research lab building AI tools to defend and enhance human agency – by researching and experimenting with novel AI capabilities.

METR – Model Evaluation & Threat Research20

Evaluating whether cutting-edge AI systems could pose catastrophic risks to society.

ARG – NYU Alignment Research Group

Group of researchers at New York University doing empirical work with language models aiming to address longer-term concerns about the impacts of deploying highly-capable AI systems.

CBL – University of Cambridge Computational and Biological Learning Lab

Research group using engineering approaches to understand the brain and to develop artificial learning systems.

MAI – Meaning Alignment Institute

Research organization applying expertise in meaning and human values to AI alignment and post-AGI futures.

French nonprofit working to incentivize responsible AI practices through policy recommendations, research, and risk assessment tools.

AI safety research group at the University of Cambridge led by David Krueger.

SSI – Safe Superintelligence Inc.

Research lab founded by Ilya Sutskever comprised of a small team of engineers and researchers working towards building a safe superintelligence.

For-profit company developing tools that automatically assess the risks of AI models and developing its own AI models aiming to provide best-in-class safety and security.

Nonprofit research lab building open source, scalable, AI-driven tools to understand and analyze AI systems and steer them in the public interest.

New public benefit corporation working on mitigating gradual disempowerment and the intelligence curse by creating personalized models.

New research nonprofit in Cambridge focused on leading projects with the shortest path to impact for AI safety. Initial work is on chain-of-thought health and monitoring.

Investigating cyber offensive AI capabilities and the controllability of frontier AI models in order to “better understand the risk of losing control to AI systems forever” and to advise policymakers and the public on AI risks.

Researching, benchmarking, and developing lie detectors for LLMs, including using cluster normalization techniques.

For-profit creating defensive cybersecurity products that mitigate AI x cyber risk. Also supports the Australian government with AI safety evals and policymaking.

CaML – Compassion in Machine Learning

Working on research to make transformative AI more compassionate towards all sentient beings and open-minded towards non-humans (animals and digital minds) through new data generation methods.

Founded by Max Tegmark, supports a range of technical AI safety research – including mechanistic interpretability, red-teaming, and guaranteed safe AI.

One of its projects is QAISI (Quantitative AI Safety Initiative).

Working on LM backdoors, real-world evals, and scalable oversight. Published a paper demonstrating cryptographic backdoors that evade detection even with full white-box access.

Training and Education

Runs the standard introductory courses, each three months long and split into two tracks: Alignment and Governance. Also runs shorter intro courses.

MATS – ML Alignment & Theory Scholars program21

Research program connecting talented scholars with top mentors in AI safety. Involves 10 weeks onsite mentored research in Berkeley, and, if selected, 4 months extended research.

3-month online research program with mentorship.

ERA – Existential Risk Alliance – Fellowship [spin off of CERI – Cambridge Existential Risk Initiative]

In-person paid 8-week summer AI safety research fellowship at the University of Cambridge.

PIBBSS – Principles of Intelligence – Fellowship

3-month interdisciplinary program connecting researchers from diverse fields with AI safety mentors, in order to help them transition their careers to AI safety.

Runs AI safety events and training programs in London.

Comprehensive online introductory course on ML safety. Run by CAIS.

GCP – Global Challenges Project

Intensive 3-day workshops for students to explore the foundational arguments around risks from advanced AI (and biosecurity).

HAIST – Harvard AI Safety Student Team

Group of Harvard students conducting AI safety research and running fellowships, workshops, and reading groups.

Student group conducting AI safety research and running workshops and reading groups.

CBAI – Cambridge Boston Alignment Initiative

Boston organization for helping students get into AI safety via upskilling programs and fellowships. Supports HAIST and MAIA.

CLR – Center on Long-Term Risk – Summer Research Fellowship

2–3-month summer research fellowship in London working on reducing long-term future suffering.

HA – Human-aligned AI – Summer School22

4-day program for teaching alignment research methodology.

CHAI – Center for Human-Compatible AI – Internship

Research internship at UC Berkeley for people interested in research in human-compatible AI.

ARENA – Alignment Research Engineer Accelerator

4–5 week ML engineering upskilling program in London, focusing on alignment. Aims to provide individuals with the skills, community, and confidence to contribute directly to technical AI safety.

SERI – Stanford Existential Risk Initiative – Fellowship

10-week funded summer research fellowship for undergrad and grad students (primarily at Stanford).

AISI – AI Safety Initiative at Georgia Tech

Georgia Tech community hosting research projects and a fellowship.

Student group and research community under SERI. Accelerating students into AI safety careers in AI safety, building the community at Stanford, and conducting research.

French collective promoting mission-driven research to tackle global issues. Organizes conferences, hackathons, ML4Good bootcamps, and university reading groups and research projects.

WAISI – Wisconsin AI Safety Initiative

Wisconsin student group dedicated to reducing AI risk through alignment and governance.

AISG – AI Safety Initiative Groningen

Student group in Groningen, Netherlands.

10-day intensive, in-person bootcamps upskilling participants in technical AI safety research.

SPAR – Supervised Program for Alignment Research

Virtual, part-time research program offering early-career individuals and professionals the chance to engage in AI safety research for 3 months.

Monthly hackathons around the world for people getting into AI safety.

Monthly hackathons around the world for people getting into AI safety.

Supports students and professionals in contributing to the safe development of AI.

Annual 9-week program designed to enable promising researchers to produce impactful research and accelerate their careers in AI safety (or biosecurity).

Filipino nonprofit aiming to develop more AI interpretability and safety researchers, particularly in Southeast Asia.

1-year program catalyzing collaboration among young scientists, engineers, and innovators working to advance technologies for the benefit of life.

CAISH – Cambridge AI Safety Hub

Network of students and professionals in Cambridge conducting research, running educational and research programs, and creating a vibrant community of people with shared interests.

LASR – London AI Safety Research – Labs

12-week research program aiming to assist individuals in transitioning to full-time careers in AI safety.

XLab – UChicago Existential Risk Laboratory – Fellowship

10-week summer research fellowship giving undergraduate and graduate students the opportunity to produce high impact research on various emerging threats, including AI.

Impact Academy: Global AI Safety Fellowship

Fully-funded research program connecting exceptional STEM researchers with full-time placement opportunities at AI safety labs and organizations.

Training for Good - Talos Fellowship

7-month program enabling ambitious graduates to launch EU policy careers reducing risks from AI. Fellows participate in one of two tasks: training or placement.

Training for Good – Tarbell Center for AI Journalism23

Nonprofit supporting journalism that helps society navigate the emergence of increasingly advanced AI. Runs fellowships, grants, and residencies. Fellowship: A one-year program for early-career journalists interested in covering artificial intelligence. Fellows secure a 9-month placement at a major newsroom.

MARS – Mentorship for Alignment Research Students

Research program run by CAISH, connecting aspiring researchers with experienced mentors to conduct AI safety (technical or policy) research for 2–3 months.

Runs various projects aimed at education, skill development, and creating pathways into impactful careers.

FIG – Future Impact Group – Fellowship

Remote, part-time research opportunities in AI safety, policy, and philosophy. Also provide ongoing support, including coworking sessions, issue troubleshooting, and career guidance.

8-week mentored research program for London students, where teams explore a research question tackling global challenges – including AI governance and technical AI safety.

Workshop letting decision-makers experience the tensions and risks that can emerge in the highly competitive environment of AI development through an educational roleplay game.

Aiming to empower bright high schoolers to start solving the world's most pressing problems through various research programs.

Orion AI Governance Initiative

London-based talent development scheme designed to equip outstanding students with the knowledge and skills to shape the future of AI governance.

Fellowship run by Kairos for students organizing technical AI safety or AI policy university groups – providing mentorship, funding, and other resources.

Comprehensive, up-to-date directory of AI safety curricula and reading lists for self-led learning at all levels.

Athena Mentorship Program for Women

10-week remote mentorship program for women looking to strengthen their research skills and network in technical Al alignment research.

Annual program providing students and early-career professionals in AI, computer science, and related disciplines with a firm grounding in the emerging field of cooperative AI.

3-month program from the Centre for the Governance of AI designed to help researchers transition to working on AI governance full-time.

Fully-funded program from the Institute for AI Policy and Strategy for professionals seeking to strengthen practical policy skills for managing the challenges of advanced AI.

African-led research program dedicated to building talent, generating research, and shaping policy to advance AI safety.

TARA – Technical Alignment Research Accelerator

14-week part-time program building technical AI safety research skills through the ARENA curriculum. Consists of weekly in-person learning sessions followed by a 3-week project.

Research Support

Maintains LessWrong, the Alignment Forum, and Lighthaven (an event space in Berkeley).

“Means-neutral” AI safety organization, doing miscellaneous stuff, including offering bounties on small-to-large AI safety projects and running a funder network.

CEEALAR – Centre for Enabling EA Learning & Research24 [formerly “EA Hotel”]

Free or subsidized accommodation and catering in Blackpool, UK, for people working on/transitioning to working on global catastrophic risks.

AED – Alignment Ecosystem Development

Building and maintaining key online resources for the AI safety community. Volunteers welcome.

Non-profit AI safety research lab hosting open-to-all research sprints, publishing papers, and incubating talented researchers to make AI safe and beneficial for humanity.

Consultancy for forecasting, machine learning, and policy. Doing original research, evidence reviews, and large-scale data pipelines.

BERI – Berkeley Existential Risk Initiative

Providing free operations support for university research groups working on existential risk.

SERI – Stanford Existential Risks Initiative

Collaboration between faculty and students. Runs research fellowships, an annual conference, speaker events, discussion groups, and a frosh-year class.

Providing consultancy and hands-on support to help high-impact organizations upgrade their operations.

GPAI – Global Partnership on AI

International initiative with 44 member countries working to implement human-centric, safe, secure, and trustworthy AI embodied in the principles of the OECD Recommendation on AI.

ENAIS – European Network for AI Safety

Connecting researchers and policymakers for safe AI in Europe.

Berkeley research center growing and supporting the AI safety ecosystem.

LISA – London Initiative for Safe AI

Coworking space hosting organizations (including BlueDot Impact, Apollo, Leap Labs), acceleration programs (including MATS, ARENA), and independent researchers.

Working to ensure the benefits of data and AI is justly and equitably distributed, and enhances individual and social wellbeing.

Incubating early-stage AI safety research organizations. The program involves co-founder matching, mentorship, and seed funding, culminating in an in-person building phase.

Fostering responsible governance of AI to reduce catastrophic risks through shared understanding and collaboration among key global actors.

Providing a platform that allows companies and individuals to evaluate the capabilities of AI models and therefore know how much they can trust them.

Providing fiscal sponsorship to AI safety projects.

Helping concerned individuals working at the frontier of AI get expert opinions on their questions, anonymously and securely.

Nonprofit running programs to give altruists the tools they need to improve their mental health, so they can have a greater impact on the world.

AI safety coworking and events space in downtown Toronto, Canada, providing a physical space, hosting informative events, and maintaining a collaborative community.

Coworking space and field-building nonprofit based in Cambridge, UK. Houses projects like CAISH and the ERA:AI Fellowship, as well as visiting AI safety researchers.

FFF – Flourishing Future Foundation

Provides engineering teams, compute, and infrastructure to researchers advancing neglected approaches to alignment.

Marketing firm helping socially impactful projects grow, including through consulting and running full-scale campaigns.

Incubator and coworking space in San Francisco aimed at various groups, including those working on AI safety.

Program designed to help AI safety organizations strengthen their management and leadership practices in order to amplify their effectiveness.

Free accommodation and board in Blackpool, England, for individuals doing work related to pushing for a pause to AI development. Located next to CEEALAR.

Coworking space in Zurich, Switzerland, for people working on AI safety or effective altruism. Regularly hosts events – ranging from relaxed gatherings to lightning talks and discussions.

SASH – Singapore AI Safety Hub

Coworking, events, and community space for people working on AI safety. Runs regular talks, hackathons, and networking events.

Career Support

Helps people navigate the AI safety space with a welcoming human touch, offering personalized guidance and fostering collaborative study and project groups.

Field-building organization with an extensive resources list.

Article with motivation and advice for pursuing a career in AI safety.

Helping professionals transition to high-impact work by performing market research on impactful jobs and providing career mentoring, opportunity matching, and professional training.

Curated list of job postings tackling pressing problems, including AI safety.

Effective Thesis – Academic Opportunities

Lists thesis topic ideas in AI safety and coaches people working on them.

Nonlinear – Coaching for AI Safety Entrepreneurs

Free coaching for people running an AI safety startup or considering starting an AI safety org (technical, governance, meta, for-profit, or non-profit).

HIP – High Impact Professionals

Supporting working professionals to maximize their positive impact through their talent directory and Impact Accelerator Program.

Applied research lab helping existential safety advocates to systematically optimize their lives and work.

Directory of advisors offering free guidance calls to help you discover how best to contribute to AI safety, tailored to your skills and interests.

Helps those who want to have a meaningful impact with their careers brainstorm career paths, evaluate options, and plan next steps.

Identifies leaders from business, policy, and academia, and helps them take on new ambitious projects in AI safety.

Working to bridge the gap between frontier AI models and the level of cybersecurity they need by connecting professionals to high-leverage opportunities in AI security.

Resources

How to pursue a career in technical AI alignment

A guide for people who are considering direct work on technical AI alignment.

Interactive FAQ; Single-Point-Of-Access into AI safety. Part of AISafety.info.

Comprehensive database of available training programs and events.

Repository of possible research projects and testable hypotheses. Run by Apart Research.

Database of AI safety research agendas, people, organizations, and products.

Ranked and scored contributable compendium of alignment plans and their problems.

Interactive walkthrough of core AI x-risk arguments and transcripts of conversations with AI researchers. Project of Arkose (2022).

Database of grants in effective altruism.

Website tracking donations to AI safety. Full site planned for launch in July 2026.

Interactive explainers on AI capabilities and their effects. (By Sage Future).

Helps you learn and memorize the main organizations, projects, and programs currently operating in the AI safety space.

Cartoon map showing various organizations, projects, and policies in the AI governance space.

A guide to AI safety for those new to the space, in the form of a comprehensive FAQ and chatbot (Stampy).

The hub for key resources for the AI safety community, including directories of courses, jobs, upcoming events, and training programs, etc.

Collects actions for frontier Al labs to avert extreme risks from AI, then evaluates particular labs accordingly.

Living document aiming to present a coherent worldview explaining the race to AGI and extinction risks and what to do about them – in a way that is accessible to non-technical readers.

Helping ensure domains in cause areas like AI safety are pointed towards high-impact projects.

Directory of local and online AI safety communities.

AISafety.com – Events & Training

Comprehensive database of available training programs and AI safety events.

Visual overview of the major events in AI over the last decade, from cultural trends to technical advancements.

AI safety chatbot that excels at addressing hard questions and counterarguments about existential risk. A project by AIGSI – AI Governance and Safety Institute and AISGF – AI Safety and Governance Fund.

Comprehensive living database of over 1600 AI risks, categorized by their cause and risk domain.

The biggest real-time online community of people interested in AI safety, with channels ranging from general topics to specific fields to local groups.

Online platform monitoring the emergence of large-scale AI risks, featuring curated information on evaluations, incidents, and policies.

Accessible, comic-illustrated series describing the history of AI capabilities, the alignment problem, and potential solutions.

Spanish-language website featuring AI safety educational tools, including a daily‑updated monitor of AI safety research papers.

Media

YouTuber covering the latest developments in AI.

Interviews on pursuing a career tackling pressing problems, including AI safety.

Interviews with AI safety researchers, explainers, fictional stories of concrete threat models, and paper walk-throughs.

Animated videos on effective altruism, rationality, the future of humanity, and AI safety.

Comprehensive index of AI safety video content.

Occasional newsletter from CAIS, focused on applied AI safety and ML.

Weekly developments in AI (incl. governance) written by Jack Clark, co-founder of Anthropic.

ERO – Existential Risk Observatory

Reducing existential risks by informing the public debate.

AXRP – AI X-risk Research Podcast

Interviews with technical AI safety researchers about their research.

FLI – Future of Life Institute – Podcast

Interviews with existential risk researchers.

AI safety explainers in video form.

Newsletter published every few weeks discussing developments in AI and AI safety. No technical background required. Run by CAIS.

YouTube channel explaining (mostly) AI safety concepts through entertaining stickman videos.

ARN – The AI Risk Network [For Humanity podcast]

YouTube channel run by John Sherman, a Peabody and Emmy Award-winning former investigative journalist, aiming to make AI risk a kitchen table conversation.

Biweekly podcast where host Nathan Labenz interviews AI innovators and thinkers, diving into the transformative impact AI will likely have in the near future.

Well-researched interviews with influential intellectuals going in-depth on AI, technology, and their broader societal implications. Hosted by Dwarkesh Patel.

Podcast from the Center for Strategic & International Studies (CSIS) discussing AI regulation, innovation, national security, and geopolitics.

Channel hosted by Liron Shapira featuring in-depth debates, explainers, and live Q&A sessions, focused on AI existential risk and other implications of superintelligence.

Computer science PhD and AI research scientist talking about how AI will likely affect all of us and society as a whole, plus interviews with experts.

Channel produced by 80,000 Hours presenting thoroughly researched, cinematic stories about what’s happening in AI and where the trends are taking us.

The AI safety space is changing rapidly. This directory of key information sources can help you keep up to date with the latest developments.

YouTube channel (by Michael from the AI Risk Network’s “Warning Shots”) raising awareness about the lethal dangers of AGI through explainer videos and podcasts.

Channel run by Drew Spartz educating a general audience about AI risk through high-effort mini-documentaries.

Public Outreach/Advocacy

Campaign group aiming to convince governments to pause AI development – through public outreach, engaging with decision-makers and organizing protests.

Calling on policymakers to implement a global moratorium on large AI training runs until alignment is solved.

Watchdog nonprofit monitoring tech companies, countering corporate propaganda, raising awareness about corner-cutting, and advocating for the responsible development of AI.

AISAF – AI Safety Awareness Foundation

Volunteer organization dedicated to raising awareness about modern AI, highlighting its benefits and risks, and letting the public know how they can help – mainly through workshops.

AISAP – AI Safety Awareness Project

Volunteer organization dedicated to raising awareness about modern AI, highlighting its benefits and risks, and letting the public know how they can help – mainly through workshops.

New communications nonprofit focused on changing the narrative air that policymakers in DC breathe with respect to AI safety.

Non-violent civil resistance organization working to permanently ban the development of smarter-than-human AI to prevent human extinction, mass job loss, and other problems.

Uses legal advocacy in Europe to address risks from frontier technologies, working to foster responsible development and implementation practices.

LASST – Legal Advocates for Safe Science and Technology

Researches ways to use legal advocacy to make science and technology safer, informs legal professionals about how to help, and advocates in the courts and policy-setting institutions.

Empowering organizations, researchers, and creators to translate the future of AI into stories the world understands.

Conducting educational initiatives, research support, and public awareness campaigns. Project of Geoffrey Hinton. Includes “The Hinton Lectures 2025” and the “CJF Hinton Award” for excellence in AI safety reporting.

Nonprofit founded by David Krueger aiming to inform and organize the public around societal-scale risks and harms of AI.

New advocacy org demanding transparency, accountability, and real safeguards to protect humanity from the risks of unchecked AI.

*Currently without a known team. It has the same EIN as Arkose (93-2600745).

New organization, currently promoted by Joe Allen (from Steve Bannon’s War Room) and Jeremy Ornstein (previously an activist against fossil fuels), running Town Halls across the U.S. (March 2026: Florida, Georgia, Tennessee, North Carolina, Virginia).

Blogs/Newsletters

Online forum dedicated to improving human reasoning, containing a lot of AI safety content. Also has a podcast featuring text-to-speech narrations of top posts.

EA – Effective Altruism – Forum

Forum on doing good as effectively as possible, including AI safety. Also has a podcast featuring text-to-speech narrations of top posts.

Central discussion hub for AI safety. Most AI safety research is published here.

Blog about transformative AI, futurism, research, ethics, philanthropy, etc., by Holden Karnofsky.

Blog covering many topics. Includes book summaries and commentary on AI safety.

Wiki on AI alignment theory, mostly written by Eliezer Yudkowsky.

Generative.ink, the blog of janus the GPT cyborg.

Blog by a research scientist at Google DeepMind working on AGI safety.

Bounded Regret – Jacob Steinhardt's Blog

UC Berkeley statistics prof blog on ML safety.

Safety research from DeepMind (hybrid academic/commercial lab).

Blog on AI safety work by a PhD mathematician and AI safety researcher.

Daniel Paleka – AI safety takes

Newsletter on AI safety news, delivered about every two months.

Index of Vox articles, podcasts, etc., around finding the best ways to do good.

Newsletter from Concordia AI, a Beijing-based social enterprise, giving updates on AI safety developments in China.

Blog by Zvi Mowshowitz30 on various topics, including AI.

Blog by Eric Drexler on AI prospects and their surprising implications for technology, economics, environmental concerns, and military affairs.

Weekly briefing and occasional analyses of what matters in AI and AI policy. Written by Shakeel Hashim, ex-news editor at The Economist.

Top forecaster Peter Wildeford forecasts the future and discusses AI, national security, innovation, emerging technology, and the power – real and metaphorical – that shape the world.

Publication by Garrison Lovely on the intersection of capitalism, geopolitics, and AI. Posts about once or twice a month.

Blog from ex-OpenAI (now independent) AI policy researcher Miles Brundage on the rapid evolution of AI and the urgent need for thoughtful governance.

Publishes policy-relevant perspectives on frontier AI governance, including research summaries, opinions, interviews, and explainers.

Platform from the Center for AI Safety (CAIS) posting articles written by experts from a wide range of fields discussing the impacts of AI.

Deep coverage of technology, China, and US policy, featuring original analysis alongside interviews with thinkers and policymakers. Written by Jordan Schneider.

Building Capacity [formerly, the Field Building blog]

Blog about building the fields of AnewI safety and Effective Altruism, discussing big-picture strategy and sharing personal experience from working in the space.

Approximately fortnightly blog by Anton Leicht about charting a course through the politics of rapid AI progress.

Can We Secure AI With Formal Methods?

Current-events newsletter for keeping up to date with FMxAI (formal methods and AI) with a gear toward safety, doing a mix of shallow technical reviews of papers and movement updates.

Johannes Gasteiger, an alignment researcher at Anthropic, selects and summarizes the most interesting AI safety papers each month.

Blog by Helen Toner (director of CSET and former OpenAI board member) offering analysis on navigating the transition to advanced AI systems.

Final Note

I recently published on Techdirt a recap of 2024: “AI Panic Flooded the Zone, Leading to a Backlash.” It explains how the AI doomers went too far and why it backfired. The two cautionary tales were the EU AI Act and California’s SB-1047.

However, considering this ecosystem’s massive funding, it is expected that the efforts to stop/pause AI will continue in 2025. The fight for the future of AI development is far from over.

Endnotes

This new visualization of Open Philanthropy’s funding shows that the existential risk ecosystem (“Potential Risks from Advanced AI” + “Global Catastrophic Risks” + “Global Catastrophic Risks Capacity Building,” different names to funding Effective Altruism AI Safety organizations/groups) has received ~ $780 million.

On November 18, 2025, Open Philanthropy rebranded to Coefficient Giving.

Jaan Tallinn’s SFF (The Survival and Flourishing Fund) donates “to organizations concerned with the long-term survival and flourishing of sentient life.” Between 2019 and 2023, SFF donated ~$76 million. An additional ~$24 $41 million was donated in 2024, bringing the total to $100 ~$117 million.

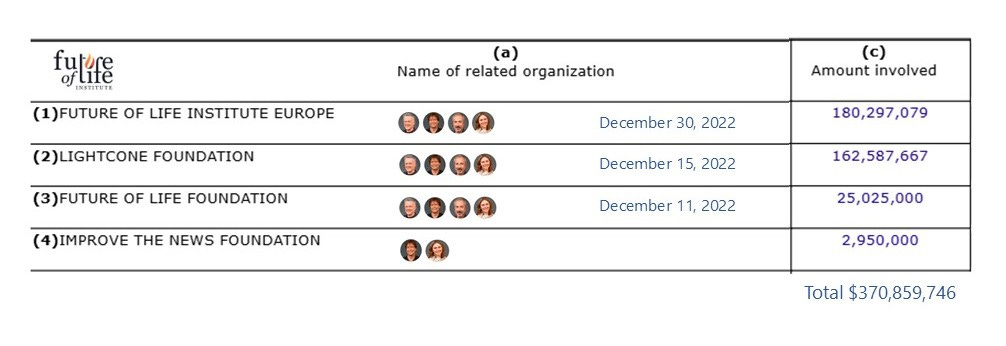

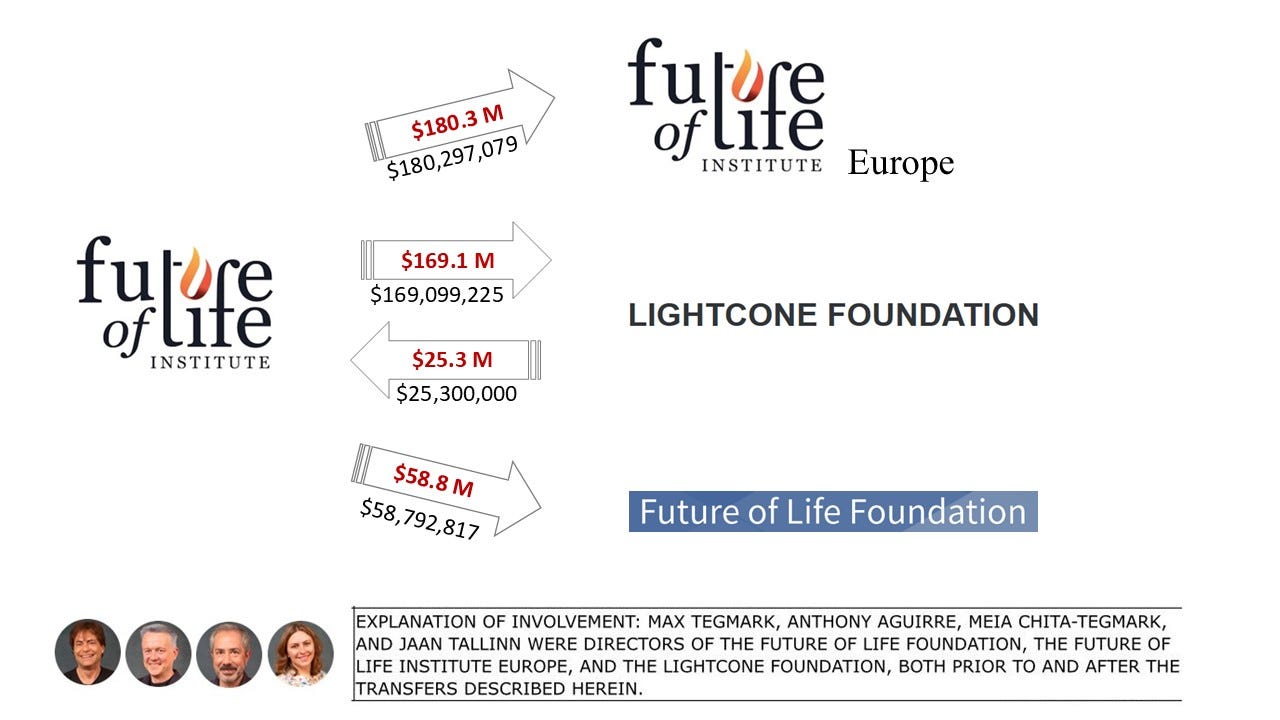

The Future of Life Institute (FLI), through the Future of Life Foundation (FLF), aims “to help start 3 to 5 new organizations per year.” The initial transfer from FLI to FLF in 2022 was $25,025,000. Since then, FLI has given FLF an additional $15,681,663 in 2023 and $18,086,154 in 2024. So, the total comes to $58,792,817.

In 2024, we found out that the Future of Life Institute (FLI) was no longer a $2.4-million organization but a $674-million organization. Max Tegmark’s organization managed to convert a cryptocurrency donation (Shiba Inu tokens) to $665 million (using FTX/Alameda Research).

In its advocacy, FLI proposed stringent regulation on models with a compute threshold of 10^25 FLOPs, explaining it “would apply to fewer than 10 current systems.”

Between December 11 and December 30, 2022, FLI transferred $368 million to three entities, all governed by the same four people: Max Tegmark, Meia Chita-Tegmark, Anthony Aguirre, and Jaan Tallinn.

1. Future of Life Institute Europe (FLIE) = $180.3 million.

2. Lightcone Foundation = $162.6 million.

3. Future of Life Foundation (FLF) = $25 million.

Lightcone Foundation: The initial transfer from FLI to Lightcone Foundation in 2022 was $162,587,667. Since then, there has been a two-way flow between them: FLI reported sending $6,511,558 to Lightcone (in 2023) and receiving $25,300,000 from Lightcone (in 2023 and 2024).

CSET, the Center for Security and Emerging Technology at Georgetown University, got from Open Philanthropy more than $100 million. Its director of foundational research grants is Helen Tuner. Her background includes: GiveWell, Center for the Governance of AI, and Open Philanthropy as a senior research analyst. In September 2021, she replaced Open Philanthropy’s Holden Karnofsky on OpenAI’s board of directors. Due to the attempted coup at OpenAI, she is no longer on its board.

Throughout 2024, the AI Policy Institute (AIPI), which aims to promote policies to “mitigate the risk of extinction,” published numerous polls to “shift the narrative in favor of decisive government action.” It was a successful campaign, as the polling results were frequently quoted in Politico, Axios, TIME magazine, and The Hill and were used by politicians to justify their bills. The mainstream media failed to criticize AIPI’s “push polling” even when the wording was designed to sway the respondents’ views in a specific desired direction. The media also didn’t provide context about its founder, Daniel Colson, and his ties to Leverage Research.

Hopefully, in 2025, we will have better and unbiased polling about AI.

The International Center for Future Generations (ICFG) proposed that “open-sourcing of advanced AI models trained on 10^25 FLOP or more should be prohibited.”

AI Impacts‘ annual survey is very problematic, as I pointed out several times.

The Future Society got $627,000 from Jaan Tallinn’s SFF and $365,000 from Jaan Tallinn’s FLI.

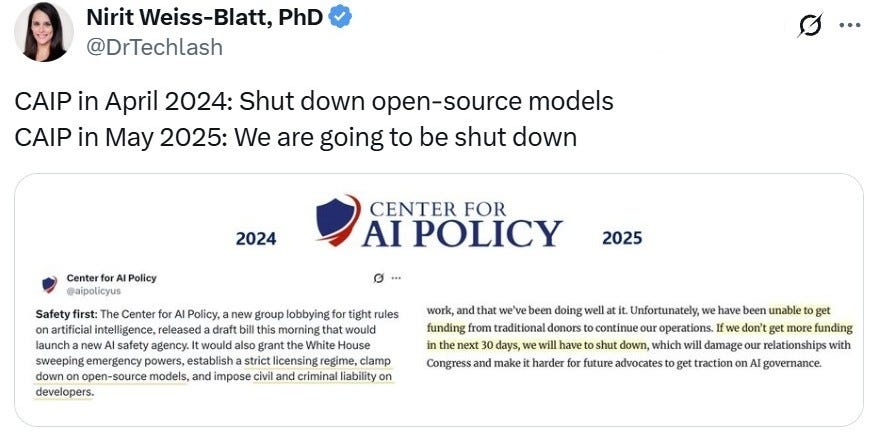

The Center for AI Policy (CAIP) released a draft bill on April 9, 2024, describing it as a “framework for regulating advanced AI systems.” This “Model Legislation” would “establish a strict licensing regime, clamp down on open-source models, and impose civil and criminal liability on developers.” Considering the board of directors of CAIP, it’s no surprise that its proposal was called “the most authoritarian piece of tech legislation”: David Krueger from the Centre for the Study of Existential Risks, MIRI’s Nate Soares and Thomas Larsen, and Palisade Research’s Jeffrey Ladish (who also leads the AI work at the Center for Humane Technology, and said, “We can prevent the release of a LLaMA 2! We need government action on this asap”). Together, they declared war on the open-source community.

Update: No Longer Active.

CARMA (Center for AI Risk Management & Alignment) is an affiliated organization of the Future of Life Institute. It aims to “help manage” the “existential risks from transformative AI through lowering their likelihood.” This new initiative was founded by FLI thanks to Vitalik Buterin’s Shiba Inu donation.

The Narrow Path proposal started with “AI poses extinction risks to human existence” (according to an accompanying report, The Compendium, “By default, God-like AI leads to extinction”). Instead of asking for a six-month AI pause, this proposal asked for a 20-year pause. Why? Because “two decades provide the minimum time frame to construct our defenses.”

RAND received from Open Philanthropy (Coefficient Giving) over $42 million.

Roman Yampolskiy updated his prediction of human extinction from AI to 99.999999%. That’s a bit higher than Eliezer Yudkowsky’s probability of >95%.

Yann LeCun said it is “quickly becoming indistinguishable from an apocalyptic religion”:

Yoshua Bengio’s LawZero is funded by Coefficient Giving (formerly, Open Philanthropy), the Future of Life Institute (a donor-advised fund of Silicon Valley Community Foundation, SVCF), SVCF via Jaan Tallinn and the Survival and Flourishing DAF (a donor-advised fund of SVCF), Gates Foundation, and Schmidt Sciences.

Dan Hendrycks from the Center for AI Safety expressed an 80% probability of doom and claimed, “Evolutionary pressure will likely ingrain AIs with behaviors that promote self-preservation.” He warned that we are on “a pathway toward being supplanted as the Earth’s dominant species.”

Hendrycks also suggested “CERN for AI,” imagining “a big multinational lab that would soak up the bulk of the world’s graphics processing units [GPUs]. That would sideline the big for-profit labs by making it difficult for them to hoard computing resources.”

In that same Boston Globe profile, Hendrycks also said that only multinational regulation will do. And with China moving to place strict controls on artificial intelligence, he sees an opening. “Normally it would be, ‘Well, we want to win as opposed to China, because they would be so bad and irresponsible,’” he says. “But actually, we might be able to jointly agree to slow down.” He later speculated that AI regulation in the U.S “might pave the way for some shared international standards that might make China willing to also abide by some of these standards” (because, of course, China will slow down as well… That’s how geopolitics work!).

In 2023, CAIS proposed to ban open-source models trained beyond 10^23 FLOPs. Llama 2 was trained with > 10^23 FLOPs and thus would have been banned.

CAIS’s funding [Updated on June 9, 2025]: $12,485,288 from Dustin Moskovitz’s Open Philanthropy. $6,418,000 from Jaan Tallinn’s SFF (Survival and Flourishing Fund). $1,455,200 from SVCF (Silicon Valley Community Foundation). $22,000 from the Future of Life Institute and ~ $528,000 in Bitcoin donations. Out of the $6.5 million from SBF’s FTX Future Fund, CAIS eventually returned $5.2 million to FTX Debtors (Case 22-11068-JTD, June 2024). Thus, more than $22M from EA billionaires.

CAIS sponsored Senator Scott Wiener’s SB-1047, and the headline collage below illustrates the criticism of the bill as it would strangle innovation, AI R&D, and the open-source community in California and around the world.

The bill was eventually rejected by Gavin Newsom’s veto. The governor explained that there’s a need for an evidence-based regulation.

Conjecture CEO, Connor Leahy, shared that he “do not expect us to make it out of this century alive; I’m not even sure we’ll get out of this decade!”

No, this is not an Apocalyptic Cult. Not at all.

Redwood Research got $25,420,000 from Open Philanthropy and $2,372,000 from Jaan Tallinn’s SFF.

FAR AI got $3,266,243 from Open Philanthropy and $4,079,000 from Jaan Tallinn’s SFF.

METR (Model Evaluation Threat Research) got $1,515,000 from Open Philanthropy and $5,426,000 from Jaan Tallinn’s SFF.

MATS (ML Alignment & Theory Scholars) got $9,832,176 from Open Philanthropy and $442,000 from Jaan Tallinn’s SFF (through BERI – Berkeley Existential Risk Initiative).

Recruit new EAs: Missing from the “Training and Education” section are the high-school summer programs. There’s a lesser-known fact that effective altruists recruit new members at an early age and lead them into the “AI existential risk” realm.

Thanks to the “Effective Altruism and the strategic ambiguity of ‘doing good” research report by Mollie Gleiberman (University of Antwerp, Belgium, 2023), I learned about their tactics:

“To give an example of how swiftly teenagers are recruited and rewarded for their participation in EA: one 17-year-old recounts how in the past year since they became involved in EA, they have gained some work experience at Bostrom’s FHI; an internship at EA organization Charity Entrepreneurship; attended the EA summer program called the European Summer Program on Rationality (ESPR); been awarded an Open Philanthropy Undergraduate Scholarship (which offers full funding for an undergraduate degree); been awarded an Atlas Fellowship ($50,000 to be used for education, plus all-expenses-paid summer program in the Bay Area); and received a grant of an undisclosed amount from CEA’s EA Infrastructure Fund to drop out of high-school early, move to Oxford, and independently study for their A-Levels at the central EA hub, Trajan House, which houses CEA, FHI, and GPI among other EA organizations.”

Tarbell’s main funders: Open Philanthropy (Coefficient Giving) = $999,000 + $816,000 + $2,888,000 = $4,703,000. Jaan Tallinn’s Survival and Flourishing Fund (SFF) = $520,000 + $583,000 + $200,000 = $1,303,000 + Future of Life Institute (FLI) $150,000 = $1,453,000.

December 2025 update from Semafor: Tarbell fellows have joined various newsrooms, including TIME magazine, NBC News, Los Angeles Times, Bloomberg, The Verge, The Guardian, MIT Technology Review, The Information, and Platformer.

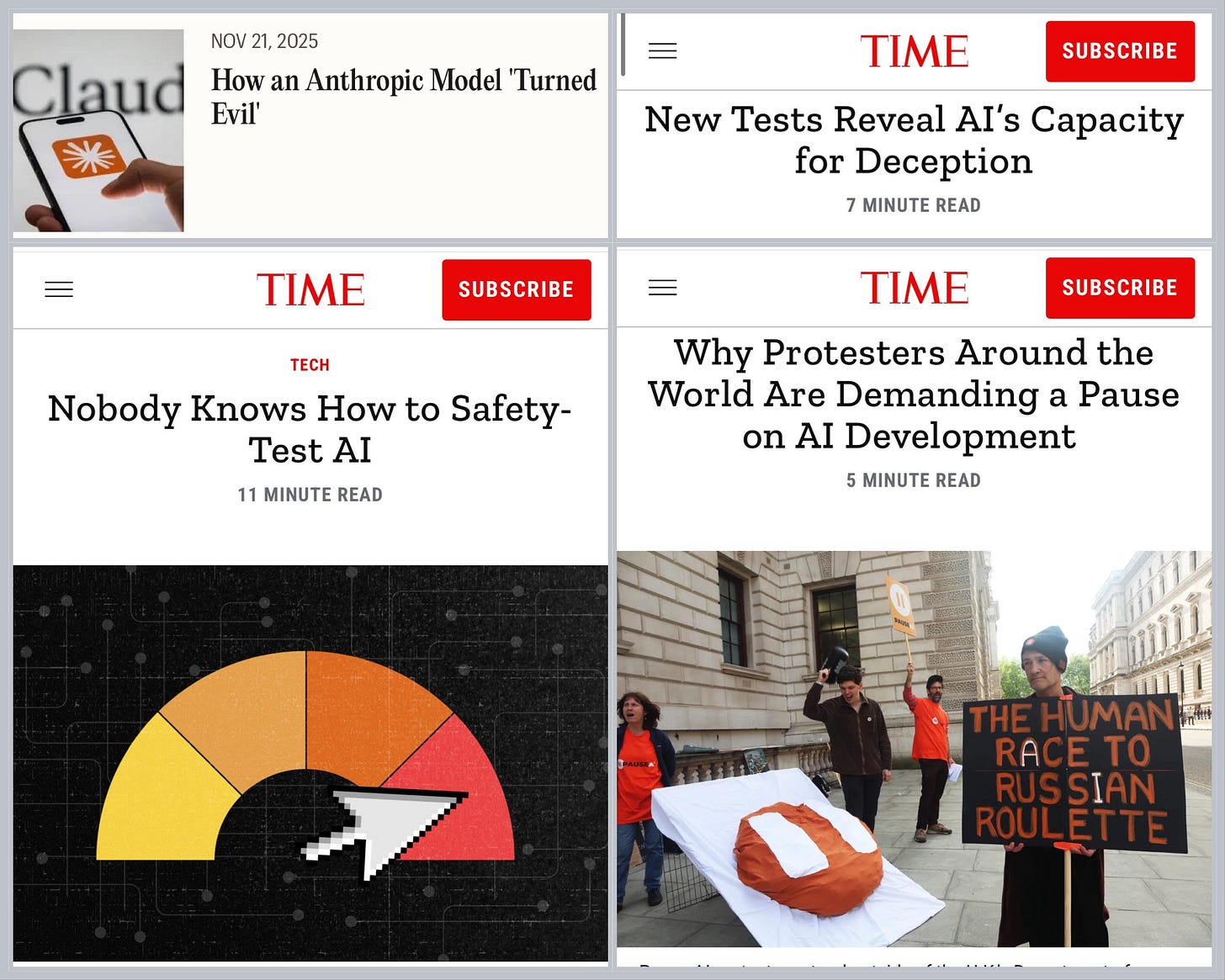

Examples of Tarbell fellows’ TIME magazine headlines:

The EA Hotel (CEEALAR) is the Athena Hotel in Blackpool, UK, which Greg Colbourn acquired in 2018 for effective altruists’ “learning and research.” He bought it for £100,000 after cashing in his cryptocurrency, Ethereum.

But why stop with hotels, if the EA movement can have mansions and castles?

The research report by Gleiberman also gave me valuable insight into effective altruism expenditures:

For effective altruists, retreats, workshops, and conferences are so important that they justify spending more than $50,000,000 on luxury properties.

Wytham Abbey (Oxford, England) = $22,805,823 for purchase and renovations.

Rose Garden Inn (Berkeley, CA) = $16,500,000 + $3,500,000 for renovations.

Chateau Hostacov (Czech Republic) = $3,500,000 + $1,000,000 for operational costs.

Lakeside Guest House (Oxford, England) = $1.8 million.

Bay Hill Mansion (Bodega Bay, north of San Francisco, CA) = $1.7 million.

Apparently, it’s “rational” to think about “saving humanity” from “rogue AI” while sitting in a $20 million mansion. Elite universities’ conference rooms are not enough (they are for the poor).

Effective Ventures UK listed the Wytham Abbey mansion for sale. It was only because it was forced to return a $26.8 million donation to the victims of the crypto-criminal Sam Bankman-Fried.

EA Domains’ principles: “We aim to prevent hassle and cost for project-starters by holding relevant domains before domain squatters get to them.” It’s FREE for EA projects. Among the 300 domains: stronglongtermism.com, rationalitycommunity.com, and apocalypticism.org.

Rational Animations got $4,265,355 from Open Philanthropy. Its videos serve a specific purpose: “In my most recent communications with Open Phil, we discussed the fact that a YouTube video aimed at educating on a particular topic would be more effective if viewers had an easy way to fall into an ‘intellectual rabbit hole’ to learn more.”

Sam Bankman-Fried planned to add $400,000 but was convicted of seven fraud charges for stealing $10 billion from customers and investors in “one of the largest financial frauds of all time.”

“Species | Documenting AGI” is one of the worst propaganda channels on AI risk and has gathered more than 21 million views. For example, it described Anthropic’s blackmail study in the most misleading way, saying, “No, the researchers didn’t tell it to do this,” “they didn’t bias the AI in any way” (in a more recent video, he also claimed it revealed the Shoggoth, “it was the mask slipping”). But, in fact, this fearmongering was debunked. Anthropic’s researchers iterated “hundreds of prompts to trigger blackmail in Claude.” “The details of the blackmail scenario were iterated upon until blackmail became the default behavior of LLMs.” You can read more about it in “AI Blackmail: Fact-Checking a Misleading Narrative.”

StopAI’s activists chained themselves to OpenAI’s gate in SF, blocked the entrance, and got arrested. Guido Reichstadter ended a 30-day hunger strike in front of Anthropic on October 3, 2025.

In December 2025, StopAI co-founder Sam Kirchner abandoned its commitment to nonviolence and disappeared. His threats prompted OpenAI to lock down its San Francisco offices. You can read more about it in: “Radicalized Anti-AI Activist Should Be A Wake-Up Call For Doomer Rhetoric.”

In a Telegraph article, Zvi Mowshowitz claimed:

“Competing AGIs [artificial general intelligence] might use Earth’s resources in ways incompatible with our survival. We could starve, boil or freeze.”

The news about FLI’s $665M donation sparked a backlash against its policy advocacy. In response, Mowshowitz wrote: “Yes, the regulations in question aim to include a hard compute limit, beyond which training runs are not legal. And they aim to involve monitoring of large data centers in order to enforce this. I continue to not see any viable alternatives to this regime.”

With even a basic understanding of media and the philosophy of technology it’s clear that safety often evolves alongside technological progress. Yes, there are unpredictable and sometimes painful consequences but that’s true of any major innovation. What’s truly absurd is the media hysteria claiming AI is taking over our lives and needs to be urgently regulated!! by whom? A handful of millionaires. The irony is staggering. If you haven’t bought into far-left fear-mongering, this article is a real eye-opener.

I recommend putting the companies into a table instead of their own paragraphs.