THE RATIONALITY TRAP

You can listen to the 6,000 words via ElevenLabs

Prologue

Jessica Taylor joined the Machine Intelligence Research Institute (MIRI) in August 2015.

Located in Berkeley, California, it was the epicenter of a small community that calls itself the rationalist community. Its leader is writer Eliezer Yudkowsky, the founder of the LessWrong forum and MIRI, who hired her as a research fellow. MIRI was preoccupied with the idea of “friendly” artificial intelligence (AI). Taylor had an MSc in computer science from Stanford and wanted to work on software agents “that can acquire human concepts.”

Two years later, she had a psychotic break. “I believed that I was intrinsically evil,” she would later write, “[and that I] had destroyed significant parts of the world with my demonic powers.”

After she shared the details of her experience on the community’s forum in 2021, other testimonies surfaced.

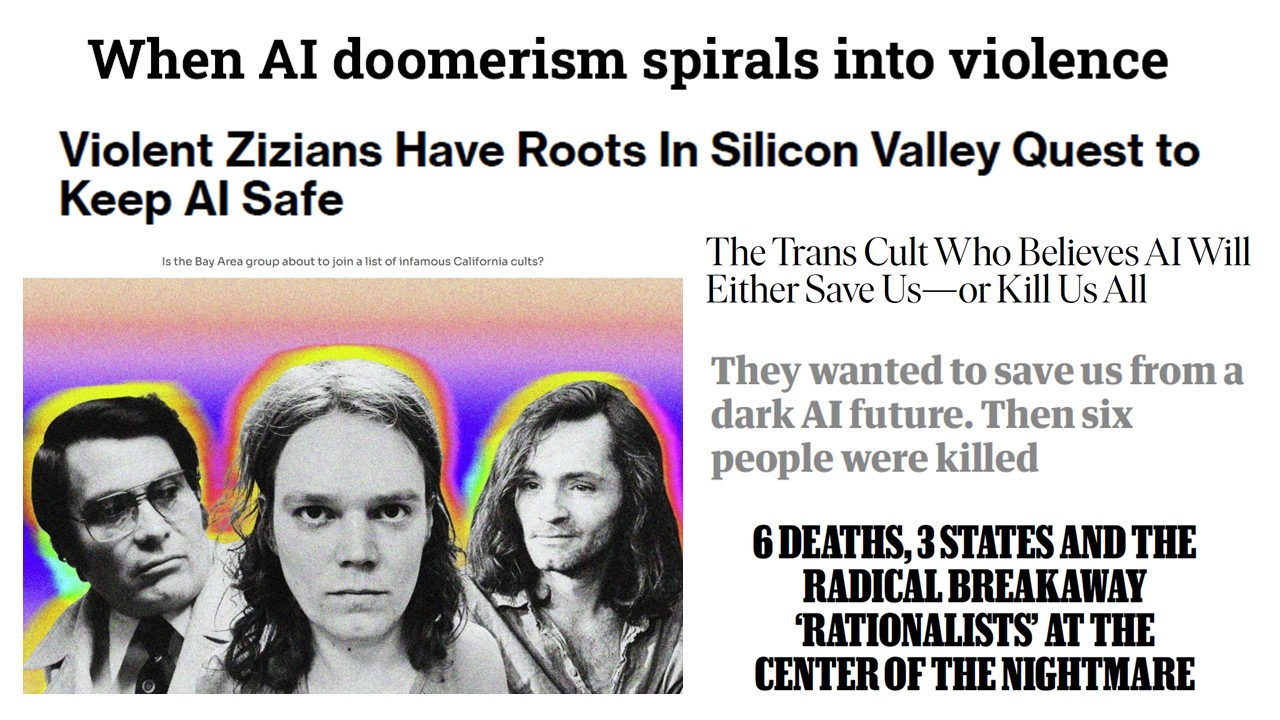

In 2023, Bloomberg gave the wider public a glimpse with “The Real-Life Consequences of Silicon Valley’s AI Obsession.”

“Taylor’s experience wasn’t an isolated incident. It encapsulates the cultural motifs of some rationalists, who often gathered around MIRI or CFAR employees, lived together, and obsessively pushed the edges of social norms, truth, and even conscious thought,” reported Bloomberg journalist Ellen Huet. “They referred to outsiders as normies and NPCs [non-player characters]. At house parties, they spent time ‘debugging’ each other, engaging in a confrontational style of interrogation that would supposedly yield more rational thoughts.” It didn't stop there. “Sometimes, to probe further, they experimented with psychedelics and tried ‘jailbreaking’ their minds, to crack open their consciousness and make them more influential, or ‘agentic.’” Taylor shared that several people in her sphere had similar psychotic episodes. “One died by suicide in 2018, and another in 2021.”

When Taylor joined the rationalist community, it had a seductive promise: we are the select few, who are so intelligent that we could save the world through our superpowered reasoning. They preached a new gospel from the heart of Silicon Valley: Superintelligent AI is coming, and we are the only ones clear-eyed and wise enough to stop the apocalypse before it’s too late. But reality proved very different: a trail of traumatic events, psychotic breakdowns, high-control groups, and, in the most extreme manifestation, a murder cult.

What Happened to the Rationalists?

A wave of new articles, by reporters and insiders, is asking that very question: What went wrong with the rationalist movement? It began with an optimistic promise: if we learn to think better, we can save the world. Today, the mindset is captured by the title of Yudkowsky’s new book: “If Anyone Builds It, Everyone Dies. Why Superhuman AI Would Kill Us All.”

The recent articles - including “The Rise of Silicon Valley’s Techno-Religion” by the New York Times tech reporter Cade Metz, “Why Are There So Many Rationalist Cults?” by the rationalist Ozy Brennan, and “Rationalist Cults of Silicon Valley” by Ian Leslie – discuss the groups that spun out of the rationalist movement and, in several notorious cases, veered into extremism.

The renewed scrutiny of rationalists started earlier this year, following the dark breaking news regarding the Zizians, a group widely described in coverage as a ‘murder cult.’ They were frequently depicted as an atypical offshoot of Berkeley’s rationality movement. However, as we’ll see in this piece, they aren’t a one-off. The rationality movement has repeatedly fostered radical factions. “As I’ve said previously, I would call myself a rat,” said the rationalist, Nathan Young, “but rationalism creates adjacent cults like nothing I know, often trying to harm rationalists.”

In the early 2010s, the rationality community merged with a twin sibling movement, Effective Altruism (EA). Together, they were centered around human extinction from AI. Their members grew to believe and fear that we are on the verge of an AI apocalypse, with AI wiping out humanity. In a setting dominated by such extreme beliefs, a leader can emerge with the ultimate plan to “save humanity,” and that person can then recruit followers and create, even unintentionally, a “high-demand group”/“high-control group,” or in other words, a cult. With the Bay Area rationalists, it happened more than once or twice.

The Center for Applied Rationality (CFAR) president and co-founder, and MIRI board member, Anna Salamon, told NBC News in 2025: “We didn’t know at the time, but in hindsight we were creating conditions for a cult.” It’s hard to square that with over a decade of internal debates flagging those very conditions.

The “Rationality Trap” combines various sources and testimonies and explains how this movement has created conditions that allow cults to flourish. The focus is on the specific factors people have raised, and the steady stream of practices and ideas that ended so badly. The red flags and warnings were everywhere. It should not surprise us that it has ended in blood. The clues were scattered along the way like breadcrumbs for anyone who wanted to see them.

This is a story about the authors of the mythos, the designers of the methods, the stewards of the world-ending stakes, and the funders who scaled it all up.

They bear responsibility for these conditions, even when they later disavow the worst outcomes.

And it all started with a guy who described himself as humanity’s only hope.

The Mythos

“I’m the only one who can make the effort”

Eliezer Yudkowsky, August 2000, personal website sysopmind

In an autobiographical essay, written exactly twenty-five years ago, at the age of 21, Eliezer Yudkowsky detailed his “plans for the future” and his work for the organization he founded (initially named the Singularity Institute for Artificial Intelligence, SIAI, then the Singularity Institute, and eventually MIRI).

Yudkowsky’s manifesto from August 2000 states:

“It would be absurd to claim to represent the difference between a solvable and unsolvable problem in the long run, but one genius can easily spell the difference between cracking the problem of intelligence in five years and cracking it in twenty-five - or to put it another way, the difference between a seed AI created immediately after the invention of nanotechnology, and a seed AI created by the last dozen survivors of the human race huddled in a survival station, or some military installation, after Earth’s surface has been reduced to a churning mass of goo.

That’s why I matter, and that’s why I think my efforts could spell the difference between life and death for most of humanity, or even the difference between a Singularity and a lifeless, sterilized planet. I don’t mean to say, of course, that the entire causal load should be attributed to me […] Nonetheless, I think that I can save the world, not just because I’m the one who happens to be making the effort, but because I’m the only one who can make the effort.”

In reality, Yudkowsky’s organization did not create the desirable “friendly AI.” “Nearly all of said research,” according to WIRED’s Evan Ratliff, “was highly theoretical philosophizing about AI, rather than building or changing it.”

In an update Yudkowsky sent out on behalf of his Singularity Institute in April 2003, through the SL4 [Shock Level 4] mailing list, he wrote: “I think I may have to split my attention one more time, hopefully the last, and write something to attract the people we need. My current thought is a book on the underlying theory and specific human practice of rationality.” Yudkowsky explained, “I don’t think we’ll find the people we need by posting a job opening. Movements often start around books; we don’t have our book yet.”

“This collection serves as a long-form introduction to formative ideas behind LessWrong, the Machine Intelligence Research Institute, the Center for Applied Rationality, and substantial parts of the effective altruist community.”

LessWrong, Rationality: A-Z (or "The Sequences")

Yudkowsky founded LessWrong as a community blog dedicated to improving “reasoning and decision-making,” and developing “the art of human rationality.” Many members of LessWrong share Yudkowsky's interests in transhumanism, AI existential risk, the Singularity, and cryonics. It has become a vibrant forum and the beating heart of the rationality community.

In “2009 SIAI Accomplishments,“ Yudkowsky’s organization wrote:

“Less Wrong is important to the Singularity Institute's work towards a beneficial Singularity in providing an introduction to issues of cognitive biases and rationality relevant for careful thinking about optimal philanthropy and many of the problems that must be solved in advance of the creation of provably human-friendly powerful artificial intelligence. […] Less Wrong is also a key venue for SIAI recruitment. Many of the participants in SIAI's Visiting Fellows Program first discovered the organization through Less Wrong.”

The LessWrongers’ common vocabulary, their shared extensive jargon, comes from the much-revered “Sequences,” a long series of essays by Yudkowsky (also known as “Rationality: A-Z”). This collection of Yudkowsky’s blog posts from 2006 to 2009 comprises over a million words.

The LessWrong forum introduces it to readers as follows: “This collection serves as a long-form introduction to formative ideas behind LessWrong, the Machine Intelligence Research Institute, the Center for Applied Rationality, and substantial parts of the effective altruist community.” According to MIRI’s website, Yudkowsky wrote it “as part of his field-building efforts.”

His other canonical book is a Harry Potter fanfic, “Harry Potter and the Methods of Rationality” (also known as “HPMoR”), which contains approximately 660,000 words. The author Tara Isabella Burton, in Rational Magic, describes HPMoR as the “most effective recruiting text,” and explains: “Part of the appeal of rationality is the promise of self-overcoming, of becoming more than merely human. Harry, we learn, ‘wants to discover the laws of magic and become a god.’ Yet it is rationality, in the end, that gives Harry the godlike powers of understanding, and shaping, his world.”

The ”new user’s guide to LessWrong” encourages reading both the Sequences and HPMoR. These books gained a “required reading” status, in part, prompted by the same cognitive dissonance that helps perpetuate exclusive clubs: “It was hard to read them all, but I did it. Therefore, they must be good.” AKA, “Effort Justification.”

HPMoR chapters link readers to LessWrong and CFAR. The flow became: Fanfiction → forum → training → recruitment.

The Method

Anna Salamon “led many people in ‘internal double cruxes around existential risk,’ which often caused fairly big reactions as people viscerally noticed, ‘we might all die.’”

LessWrong comment by CFAR’s president, Anna Salamon, October 2021

“Having attracted a growing cadre of young techies to the cause of grappling with AI’s existential threats, Yudkowsky and others began expanding the community into more personal realms,” describes the journalist Evan Ratliff in “When AI Doomerism Spirals into Violence.”

The discussions about rationality led to the formation of CFAR in 2012. CFAR organized costly multi-day retreats and summer camps to pass on methods of teaching “Rationalism” to others. The implicit rule was “human thinking is flawed and biased,” but the special plea was “...except for ours. We have a unique method of seeing things clearly.” It was an overestimation of their own competence.

CFAR homepage states the goal: “Developing clear thinking for the sake of humanity’s future.” According to its founder, Anna Salamon, who was a research fellow in Yudkowsky’s SIAI in 2008, “CFAR's mission is to improve the sanity/thinking skills of those who are most likely to actually usefully impact the world.” A New York Times Magazine reporter, who attended a CFAR workshop in 2016, heard from one of the participants: “Self-help is just the gateway. The real goal is: Save the world.”

When CFAR turned the canonical writings into “mind-tech” workshops, the special methods it offered did not just focus on an external goal (solving AI risk), but also offered to solve your internal problems: “Double Crux” for disagreements, and “Internal Double Crux” for inner conflicts. It gave inherent permission to intervene in the most intimate parts of a member’s life and psyche.

CFAR and MIRI had side-by-side office spaces, a lot of people in common, and both organizations were “explicitly concerned with navigating existential risk from unaligned artificial intelligence.” They shared the sense that the end of the world might not be far off. Several first-person accounts describe using CFAR’s “mind-tech” techniques to push people toward “AI risk” beliefs. In 2021, Anna Salamon herself said she “led many people in ‘internal double cruxes around existential risk,’ which often caused fairly big reactions as people viscerally noticed, ‘we might all die.’”

“Within the group, there was an unspoken sense of being the chosen people smart enough to see the truth and save the world, of being ‘cosmically significant’”

Qiaochu Yuan, a former rationalist, quoted in Bloomberg, March 2023

“Some insiders call this level of AI-apocalypse zealotry a secular religion. One former rationalist calls it a church for atheists,” says the Bloomberg article on the rationalists. “It offers a higher moral purpose people can devote their lives to, and a fire-and-brimstone higher power that’s big on rapture.” Unsurprisingly, “Within the group, there was an unspoken sense of being the chosen people smart enough to see the truth and save the world, of being ‘cosmically significant.’”

Diego Caleiro, a biological anthropologist who collaborated with CFAR, Oxford’s Future of Humanity Institute, and the Center for Effective Altruism, addresses this in his blog post, “AGI – When Did You Cry About The End?” He met Geoff Anders in 2012 while a visiting scholar in Yudkowsky’s Singularity Institute. He joined Leverage Research because Geoff “was there for the same reason I was, with the same intensity.” “I realized how rare I was. How few of us there were,” Caleiro writes. “You may think it is fun to be Neo, to be the One or one of the Chosen ones. I can assure you it is not […] The stakes of every single conversation were astronomical. The opportunity cost of every breath was measured in galaxies.”

The community norm of maximal openness/no topic off-limits widened the Overton window, including tolerating fringe ideologies. In 2014, Peter Wildeford, the co-founder of Rethink Priorities and the Institute for AI Policy and Strategy (IAPS), acknowledged that some things in the community “could be a turn-off to some people”:

“While the connection to utilitarianism is ok, things like cryonics, transhumanism, insect suffering, AGI, eugenics, whole brain emulation, suffering subroutines, the cost-effectiveness of having kids, polyamory, intelligence-enhancing drugs, the ethics of terraforming, bioterrorism, nanotechnology, synthetic biology, mindhacking, etc., might not appeal well.”

“More and more rationalists and fellow-travelers were yearning to address personal existential crises alongside global existential risks,” the article “Rational Magic” explains. “The realm of the ‘woo’ started to look less like a wrong turn and more like territory to be mined for new insights.” An Effective Altruist (currently working in AI alignment) addressed this dynamic in the “EA Corner” Discord channel:

“I think there’s a difference between ‘aesthetically weird,’ like circling and cuddle puddles and rationalist choir and even psychedelics, and ‘aesthetically woo’ like going around dressed up like a Sith Lord and trying to become ‘double good’ by sleeping with only one brain hemisphere, or ‘debugging’ people and casting/removing ‘demons’ from them. Mainstream rationalism had a bunch of aesthetically weird stuff, but less aesthetically woo stuff that fringier rationalist adjacent spaces.”

In 2023, Oliver Habryka published a LessWrong post trying to understand “How do you end up with groups of people who do take pretty crazy beliefs extremely seriously?” Habryka is the CEO at Lightcone Infrastructure/LessWrong, fund manager at the Long-Term Future Fund (LTFF), and director of Nick Bostrom’s organization, Macrostrategy Research Initiative. In this LessWrong post, Habryka discusses “what is going on when people in the extended EA/Rationality/X-Risk social network turn crazy in scary ways.” His central thesis was: “People want to fit in.” “The people who feel most insecure tend to be driven to the most extreme actions, which is borne out in a bunch of cult situations,” Habryka writes.

“There are also a ton of other factors that I haven’t talked about (willingness to do crazy mental experiments, contrarianism causing active distaste for certain forms of common sense, some people using a bunch of drugs, high price of Bay Area housing, messed up gender-ratio and some associated dynamics, and many more things).”

Even sympathetic observers still inside the movement, like the rationalist Ozy Brennan, now assert that “people who are drawn to the rationalist community by the Sequences often want to be in a cult.” The promise (“learn the art of thinking, become part of the small elite shaping the future”) over-selects people who want a transformative authority and a grand plan.

Then, it intensifies when the plan centers on human extinction from AI.

The Stakes

“‘AI is likely to kill us all’ is a kind of metaphorical flypaper for people predisposed to craziness spirals”

LessWrong comment by AI alignment researcher John Wentworth, June 2023

Now, layer on AI Doomerism. John Wentworth, an independent AI alignment researcher (partly funded by LTFF), wrote that his default model is that “some people are very predisposed to craziness spirals.” “‘AI is likely to kill us all’ is definitely a thing in response to which one can fall into a spiral-of-craziness, so we naturally end up ‘attracting’ a bunch of people who are behaviorally well-described as ‘looking for something to go crazy about.’” Wentworth added that “other people will respond to basically-the-same stimuli by just… choosing to not go crazy.” “On that model,” he concluded, “insofar as EAs and rationalists sometimes turn crazy, it’s mostly a selection effect. ‘AI is likely to kill us all’ is a kind of metaphorical flypaper for people predisposed to craziness spirals.”

Bloomberg’s extensive investigation also addressed this issue. “[Qiaochu] Yuan started hanging out with the rationalists in 2013 as a math Ph.D. candidate at the University of California at Berkeley,” the Bloomberg article exemplifies. “Once he started sincerely entertaining the idea that AI could wipe out humanity in 20 years, he dropped out of school, abandoned the idea of retirement planning, and drifted away from old friends who weren’t dedicating their every waking moment to averting global annihilation.” Yuan told the reporter, “You can really manipulate people into doing all sorts of crazy stuff if you can convince them that this is how you can help prevent the end of the world. Once you get into that frame, it really distorts your ability to care about anything else.”

“The extreme edge of rationalism and EA reveal a dynamic that runs through both of them – Apocalyptic predictions, asserted with a confidence buttressed by weaponized misuse of ‘Bayesian’ logic – is driving young people insane by convincing them that they must take extreme steps to halt an imagined doom,” wrote the journalist David Z. Morris.

“There’s this all-or-nothing thing, where AI will either bring utopia by solving all the problems, if it’s successfully controlled, or literally kill everybody,” CFAR’s Anna Salamon told the New York Times. “From my perspective, that’s already a chunk of the way toward doomsday cult dynamics.”

The sad part is that all the problematic dynamics were debated from the start. The public warnings came from within the community. The following red flags were mentioned (by a member named “JoshuaFox”) in a LessWrong post from 2012, “Cult impressions of LessWrong/Singularity Institute”:

“Eliezer addressed this in part with his ‘Death Spiral’ essay, but there are some features to LW/SI that are strongly correlated with cultishness, other than the ones that Eliezer mentioned, such as fanaticism and following the leader:

● Having a house where core members live together.

● Asking followers to completely adjust their thinking processes to include new essential concepts, terminologies, and so on, to the lowest level of understanding reality.

● Claiming that only if you carry out said mental adjustment can you really understand the most important parts of the organization’s philosophy.

● Asking for money for a charity, particularly one which does not quite have the conventional goals of a charity, and claiming that one should really be donating a much larger percentage of one’s income than most people donate to charity.

● Presenting an apocalyptic scenario including extreme bad and good possibilities, and claiming to be the best positioned to deal with it.”

Since then, the apocalyptic talk has intensified. Currently, AI Doomers debate how many months humanity has left on planet Earth before AI will wipe it out of existence. In extreme cases, people are “making extra trips to see people” because they are “worried it’ll be the last time.”

“The rationalist community is not very good at de-escalating, or even noticing brewing radical extremism,” pointed out Octavia (Zephyr Nouzen), a Zizians-adjacent rationalist. “Eliezer advocates bombing datacenters and shit like that,” they added. “The intensity is upstream of the schism” (referring to his infamous 2023 TIME magazine OpEd).

The leadership rhetoric sets the tone. In 2024, Yudkowsky told The Guardian that “our current remaining timeline looks more like five years than 50 years. Could be two years, could be 10,” and “We have a shred of a chance that humanity survives.”

MIRI president Nate Soares, who co-authored the new book on how AI “would kill us all,” told The Atlantic that he doesn’t set aside money for his 401(k). “I just don’t expect the world to be around.”

Effective Altruism’s Funding Scaled Everything Up

“In the rationalist world, CFAR provided the grand thinking, MIRI the technical know-how, and EA the funding to save humans from being eradicated by runaway machines”

Evan Ratliff, WIRED, February 2025

In the early 2010s, the Effective Altruism movement joined the scene and accelerated the “field-building.” Rationalists and effective altruists “founded the field of AI Safety, and incubated it from nothing.” The overlapping communities were scholarly defined as the AI Safety Epistemic Community.

“There was pretty early on a ton of overlap in people who found the effective altruism worldview compelling and people who were rationalists,” describes Vox’s journalist Kelsey Piper on the Good Robot podcast (episode 3). “Probably because of a shared fondness for thought experiments with pretty big real-world implications, which you then proceed to take very seriously.”

“I don’t think it’s a cult, but it’s a religion. Most of the world’s recorded religions have developed ideas about how the world ends, what humanity needs to do to prepare for some kind of final judgment. In the Bay Area and on Oxford’s campus, effective altruists started to hear from rationalists they were in community with about what an apocalypse could look like. And of course, since a lot of rationalists thought that AI was the highest-stakes issue of our time, they started trying to pitch people in the effective altruism movement. Like, look, getting AI right is a major priority for charitable giving.” —Kelsey Piper.

The movements were also similar in casting their members as world-saving protagonists. In the four-part series of Behind the Bastards on the Zizians, Robert Evans observes:

“Rationalists and effective altruists are not ever thinking like, hey, how do we as a species fix these major problems? They’re thinking, how do I make myself better, optimize myself to be incredible, and how do I fix the major problems of the world alongside my mentally superpowered friends? These are very individual-focused philosophies and attitudes. And so, they do lend themselves to people who think that, like, we are heroes who are uniquely empowered to save the world.”

Eliezer “is not a cult leader, but he’s playing with some of these cult dynamics, and he plays with them in a way that I think is very reckless and ultimately leads to some serious issues.” … “Yudkowsky and his followers see themselves as something unique and special, and there’s often a messianic aureole to this. We are literally the ones who can save the world from evil AI. Nobody else is thinking about this or is even capable of thinking about this because they’re too illogical.”

“In the rationalist world, CFAR provided the grand thinking, MIRI the technical know-how, and EA the funding to save humans from being eradicated by runaway machines,” summarizes WIRED’s Evan Ratliff in “The Delirious, Violent, Impossible True Story of the Zizians.” For over two years, Ratliff was tracking each twist and turn of the Zizians’ alleged descent into mayhem and death.

“The MIRI, CFAR, EA triumvirate promised not just that you could be the hero of your own story but that your heroism could be deployed in the service of saving humanity itself from certain destruction. Is it so surprising that this promise attracted people who were not prepared to be bit players in group housing dramas and abstract technical papers? That they might come to believe, perhaps in the throes of their own mental health struggles, that saving the world required a different kind of action—by them, specifically, and no one else?”

EA added centralized funding. A small set of grantmakers (especially Open Philanthropy) created strong incentives to align with funder worldviews to get money. It turned a niche subculture into a career track. This structure meant power for the funders along with thin governance/guardrails.

The FTX collapse, alongside its “Future Fund,” then showed the whiplash risk of such patronage.1

The EA movement funded Oxford/Bay Area hubs, retreat centers (some were actual castles and mansions), fellowships, grants, think tanks, residencies, and group houses. It was covered by Bloomberg as “a culture of free-flowing funding with minimal accountability focused on selective universities.” It helped build a dense social infrastructure.

EA also helped mainstream existential risk framing in AI policy, concentrating attention and money on AI apocalypse scenarios.

Worst Outcomes

“The rationalist community has hosted perhaps half a dozen small groups with very strange beliefs (including two separate groups that wound up interacting with demons).”

Ozy Brennan, Asterisk magazine, August 2025

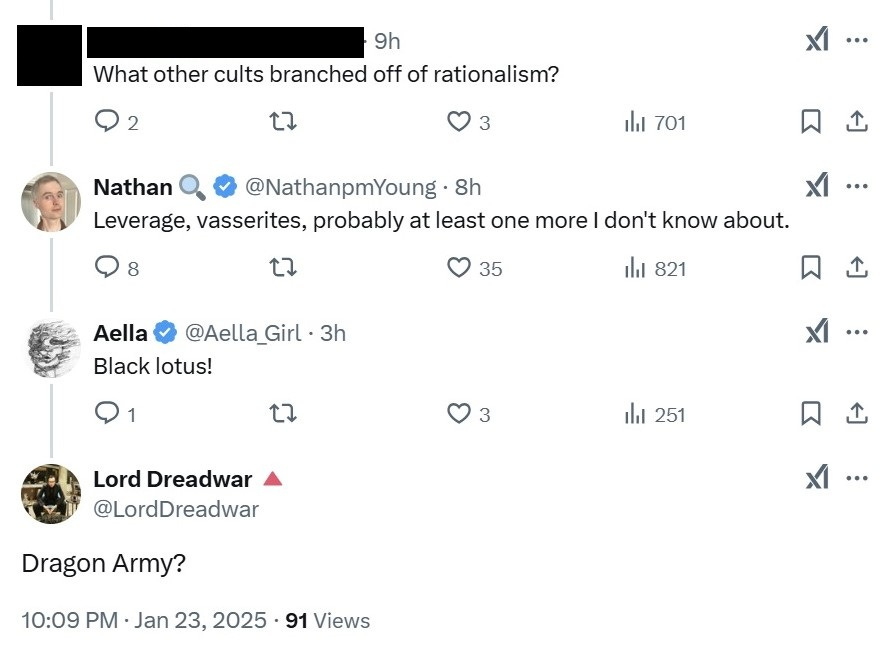

After the Zizians entered the public discourse, following a new murder spree (the killing of a border patrol agent and the stabbing to death of an old man who miraculously survived a previous stabbing with a Katana sword), one Twitter user asked: “What other cults branched off rationalism?” The answers mentioned other groups, all of which are not as deadly as the Zizians, but suffer from various harms.

The Zizians are famously the rationalists’ trans, vegan murder cult. Their leading figure was Jack “Ziz” Lasota. They were the most extreme (and deadly) manifestation of the rationalists’ cultish features to date. Some of their rituals included dangerous mind hacks and sleep deprivation. The members first bonded over Yudkowsky’s fanfic, Harry Potter and the Methods of Rationality. They “experienced the book as a real-life Hogwarts letter, an invitation to join a community of intellectuals trying to save the world.” They discussed Yudkowsky's ideas extensively in their own writings. They participated in CFAR’s workshops and were convinced that the most important thing to work on is AI safety/AI alignment. Over time, they came to believe that the mainstream rationality and effective altruism movements were not pushing far enough (regarding AI Doomerism, veganism, and “mental tech” experiments).

Their alleged body count is eight: six homicides and two suicides. Arrests and charges were made, and all incidents (murder, attempted murder, weapon offenses, and trespassing) were under investigation at the time of publication. (Their court cases are spread across different states: California, Pennsylvania, Vermont, and Maryland). For more disturbing details, I recommend WIRED, Rolling Stone, and New York magazine’s articles. The events were so staggering that they read like a true crime novel.

Leverage Research, which also includes Paradigm Academy and Reserve, was located in Oakland and recruited members from the “rationality” and EA scenes. It was led by Geoff Anders, who described their purpose and goals at the onset: “One of our projects is existential risk reduction. We have conducted a study of the efficacy of methods for persuading people to take the risks of artificial general intelligence (AGI) seriously.” The second project was “intelligence amplification.”

In 2021, former member Zoe Curzi published a detailed testimony about her traumatizing experience there. Since leaving, she has shared a cluster of almost every symptom on “the list of post-cult after-effects.” Beyond the alleged abusive psychological experiments, their Pepe-Silvia-looking plan, included “successfully seek enough power to be able to stably guide the world.” According to Curzi, people genuinely thought, “We were going to take over the US government.”

Geoff Anders apologized and took responsibility, and later, Leverage Research published a “Public Report on Inquiry Findings,” which did not dispute Curzi’s account. It said approximately three to five members “may have had very negative experiences overall.” Following this turmoil, the organization pivoted from “Leverage 1.0” to “Leverage 2.0.” You can read more about it in AI Panic.

Inspired by Curzi’s demons-and-mental-invasion post, Jessica Taylor published her own recollections of traumatic moments inside MIRI and CFAR, and a subgroup dubbed the Vassarites (after Michael Vassar). Like Curzi, Taylor shared she experienced most of the trauma symptoms on the “list of cult after-effects.”

Dragon Army was Duncan A. Sabien’s group house experiment that he ran in Berkeley for six months. He was the curriculum director and COO at CFAR.

Black Lotus was a rationalists’ Burning Man camp led by alleged abuser Brent Dill. The public allegations against him are detailed in this blog post and three Medium posts. CFAR’s first reaction protected him: “Brent also embodies a rare kind of agency and sense of heroic responsibility.” The second reaction admitted that “CFAR messed up badly here.” Eventually, he was banned from the community.

Allegations of sexual harassment and abuse in the EA/rationality scenes were also the subject of investigative reporting. In 2023, TIME magazine (interviewed 30 people) and Bloomberg (interviewed 8) depicted a dynamic that “favored accused men over harassed women.” “Under the guise of intellectuality, you can cover up a lot of injustice,” an interviewee pointed out. “The movement’s high-minded goals can create a moral shield, allowing members to present themselves as altruists committed to saving humanity regardless of how they treat the people around them.” “Women who reported sexual abuse, either to the police or community mediators, say they were branded as trouble and ostracized while the men were protected.”

This paints a dissonant picture. The “rationalists” speak of safeguarding humanity while not keeping their own members safe.

“As Auric Goldfinger might say: ‘Mr. Bond, they have a saying in Eliezer’s hometown. Once is happenstance. Twice is coincidence. The third time, it’s enemy action.”

Jon Evans, “Gradient Ascendant,” Extropia’s Children, Chapter 2, October 2022

“These surreal tragedies are both a mesmerizing story and irresponsible to ignore,” the author and startup founder Jon Evans concluded, after investigating this “demon-haunted world.”

Within the community, these groups were not ignored but heavily debated, and the fringe groups were disavowed. People argued openly. The insiders were the sharpest critics of the worst dynamics. Some left and published elaborate accounts. Others tried, from within, to build brakes.

However, it happened after recruitment and social integration had already occurred, which made backtracking extremely difficult. At this point, the members of the subgroups were already high on their own supply. These “rationalists” felt like heroes, “like some of the most important people who ever lived.” As if the fate of the “lightcone”, the entire future of the observable universe, literally depended on the success of their tiny group’s work and their “world-solving plan.”

One consistent pattern among these groups is the practice of “mental tech” for “debugging irrationality.” They “spiraled into self-perpetuating cycles of abuse.” According to the Asterisk magazine article, “In those research groups, everyone was a victim and everyone was a perpetrator. The trainer who broke you down in a marathon six-hour debugging session was unable to sleep because of the panic attacks caused by her own.”

In groups like Leverage Research or the Zizians, the group leader was perceived as a special genius, and their theories were the operating principles of the groups.

“It’s so perversely fascinating to see these people who pride themselves on their intelligence and their analytical ability specifically to put themselves into these positions of basically subservience and loyalty to what are clearly very flawed individuals,” noted David Z. Morris on The Farm podcast. His related book about Sam Bankman-Fried and the fraud at FTX, “Stealing the Future,” will be out in November.

Yudkowsky called the story of the Zizians “sad.” He told the New York Times journalist, Chris Beam, “A lot of the early rationalists thought it was important to tolerate weird people, a lot of weird people encountered that tolerance and decided they’d found their new home. And some of those weird people turned out to be genuinely crazy and in a contagious way among the susceptible.”

In a LessWrong discussion on the Zizians’ murder spree, “Thread for sense-making on recent murders and how to sanely respond,” one LessWronger wondered if the Zizians are the product of seeing the world too starkly through rationalist eyes. Another LessWronger suggested: “Perhaps there should be some ‘pre-rationality’ lessons. Something stabilizing you need to learn first, so that learning about rationality does not make you crazy.” A noteworthy suggestion, as the rationalists’ founding aspiration was, “raise the sanity waterline.”

SFGATE compared the Zizians to the most famous cults, Jones’ Peoples Temple, Children of God, Heaven’s Gate, NXIVM, Scientology, and Charles Manson. It was due to the characteristics associated with cults. Mind-altering practices, dictating how members should live, shame and guilt, elitism, preaching that the leader is on a special mission to save humanity, isolation (including cultivating an “us versus them” mentality), and socializing only within the group, loaded language (including inventing extensive insider jargon), and radical totalizing beliefs (like “End of the World” prophesies).

The ”Rationality Trap” exhibits nearly all of these characteristics.

We can go a step further into the characteristics of a dangerous cult:

(1). Narcissistic personalities: Dangerous cult leaders usually hold grandiose notions of their place in the world.

(2). Claims of special powers: If a leader claims he’s smarter, holier, and more pure than everyone else, think twice about signing up.

(3). Ability to read others: Cult leaders “have the ability to size you up, and realize your weaknesses and get to your buttons.”

(4). Confession: Dangerous cults are notorious for making members confess to past sins, often publicly, then using those confessions against them.

(5). Isolation: Separating group members from family and friends forces them to rely on fellow cultists for all emotional needs. This is why many cults are based around communal living.

(6). Language Control: To enforce isolation, many groups replace common words with special jargon, or create new words to describe complicated abstractions. This makes conversations with outsiders tedious.

The recent Asterisk magazine piece, “Why are there so many rationalist cults?” discusses several contributing factors: (1). Long sessions of self-improvement, inward-focused psychological “work.” (2). Apocalyptic, time-urgent mission and consequentialist justifications override ordinary ethics and limits. (3). Social isolation. Bay Area costs increase dependency. Life outside the group shrinks as housing, colleagues, friends, and dating are all inside the scene. (4). Selection effects & vulnerability: Attracting vulnerable, young, neurodivergent, and/or trans people seeking belonging and a transformative ideology.

For individuals concerned about joining a dysfunctional group, Brennan recommends “Maintain multiple social groups. Ideally, have several friends who aren’t rationalists. Keep your work, housing, and therapy separate […] The longer and more abstract a chain of reasoning is, the less you should believe it. This is particularly true if the chain of reasoning concludes: a. that it’s okay to do something that hurts people. b. That something is of such overwhelming importance that nothing else matters.”

Among the suggestions for the general rationalist community are: “Give people realistic expectations about whether they will ever be able to get a job in AI safety. Don’t act like people are uncool or their lives are pointless if they can’t work in AI safety. Consider talking about ‘ethical injunctions:’ things you shouldn’t do even if you have a really good argument that you should do them. (Like murder.)”

The journalist Max Read has different advice: “The ability to dismiss an argument with a ‘that sounds nuts,’ without needing recourse to a point-by-point rebuttal, is anathema to the rationalist project. But it’s a pretty important skill to have if you want to avoid joining cults.”

The Four Elements of the “Rationality Trap”

The accumulation of the quotes above leads to one clear realization: Yudkowsky, MIRI, CFAR, EA, and related groups created the conditions for cult-adjacent spin-offs inspired by the core of their beliefs.

● The rationalists’ canonical writings supplied the mythos: Combining heroic identity with “take ideas seriously” and the utilitarian “shut up and multiply.” It led readers to prioritize minuscule-probability existential risk scenarios.

● CFAR built the method & milieu: Turning ideas into intense “mind-tech” training in insular settings that funneled participants toward the “AI existential risk” cause.

● MIRI set the stakes & tone: Apocalyptic consequentialism, pushing the community to adopt AI Doomerism as the baseline, and perceived urgency as the lever. The world-ending stakes accelerated the “ends-justify-the-means” reasoning.

● EA funding built the social infrastructure and grew the ecosystem faster than guardrails could keep up.

Combining these elements was a recipe for disaster. Things were bound to go wrong (not “less wrong,” just “wrong”).

Of course, most participants never joined any spin-offs. Many of the rationalists and EAs you meet are often bright and sincere about wanting to improve themselves and the world. The trouble is that you can be earnest and still build a greenhouse filled with poisonous plants. The real question shouldn’t be “How did those bad apples get in?” but “Why does our movement keep growing them in the first place?”

Epilogue

A new recruitment text is going to be published on September 16, 2025.

Steven Levy, WIRED’s editor-at-large, has described it as the “Doom Bible.”2

How long before it creates the next radical group?

Endnote

CFAR, for example, was required by FTX debtors to return the approximately $5 million it received before the collapse of November 2022. $3,405,000 was transferred from FTX Foundation to CFAR between March and September 2022, and an additional $1,500,000 was transferred on October 3, 2022, in ten separate transactions. (See case 24-50066-JTD). CFAR refused and filed a “Limited Objection and Reservation of Rights” in July 2024. In September 2024, CFAR withdrew its objection, probably settling the matter. (See FTX bankruptcy case 22-11068-JTD, October 2024).

November 13, 2025, update: Why the “Doom Bible” Left Many Reviewers Unconvinced.

“[W]e are the select few, who are so intelligent that we could save the world through our superpowered reasoning.”

Aeschylus would have recognized this as hubris 2500 years ago.

Thank you, well researched.