Why The “Doom Bible” Left Many Reviewers Unconvinced

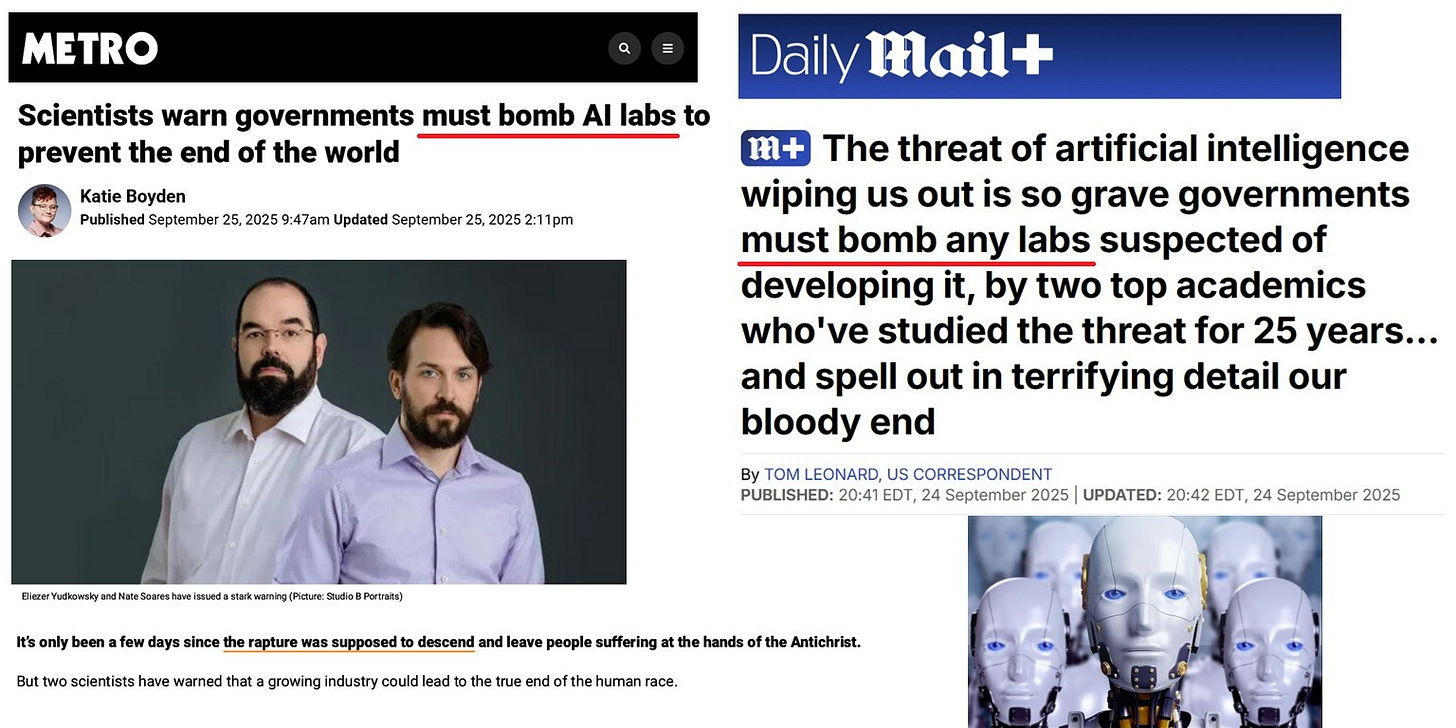

In the past few weeks, media outlets have been flooded with book reviews of “If Anyone Builds It, Everyone Dies. Why Superhuman AI Would Kill Us All.” The book launch in September 2025 was accompanied by billboards and subway ads with the slogan “We Wish We Were Exaggerating.”

The New York Times profiled the book’s co-authors, Eliezer Yudkowsky and Nate Soares, of Berkeley’s Machine Intelligence Research Institute (MIRI). The book’s stated goal is “to have the world turn back from ‘superintelligence’ AI,” so that “we get to not die in the immediate future.” During the following media tour, they relentlessly raised the alarm about humanity’s impending extinction.

“We are currently living in the last period of history where we are the dominant species. Humans are lucky to have Soares and Yudkowsky in our corner,” said the musician Grimes. “A landmark book everyone should get and read,” Steven Bannon of The War Room recommended. Actor Stephen Fry said IABIED is “The most important book I’ve read for years.” Tim Urban, co-founder of Wait But Why, wrote that it “may prove to be the most important book of our time.” Max Tegmark, co-founder of the Future of Life Institute, called it “The most important book of the decade.”

Criticism of the Book—Summarized

While praised by some for its uncompromising presentation of the AI Doom scenario, the book has drawn significant criticism, focusing on the overconfident, binary worldview; resistance to empiricism; dramatic sci-fi writing; the disconnect between the catastrophic predictions and the observable reality of AI development; and the policy proposals that are detached from reality.

In order to explore these claims, the quotes below are compiled from 16 outlets: Asterisk magazine, Astral Codex Ten, Bloomberg, LessWrong, New Scientist, New York Times, Semafor, The Atlantic, The New Statesman, The Observer, The Spectator, Transformer, Understanding AI, Vox, Washington Post, and WIRED.1

Taken together, these quotes capture the most prevalent criticisms found in reviews of IABIED.

Flawed Binary Viewpoint

Several critics argue that the book’s case relies on a Before/After dichotomy that oversimplifies the process of technological development. The book posits a rigid distinction between the current period (“Before”), when AI failures are observable and survivable, and a “superintelligence” era (“After”), in which a single, first mistake leads directly to human extinction. According to critics, reality is far more continuous. The claim that there is a single, explosive “game over” threshold is less convincing, as it doesn’t align with humanity’s track record of managing risks incrementally.

The authors also equate capability with control, treating “superintelligence” as some magic that can bypass all social, physical, and logistical constraints.

Understanding AI says the book’s doom case is “not very convincing,” noting it doesn’t bridge the gap from today’s models to inevitable, uncontainable artificial “superintelligence.” It finds the book entertaining and energetic but unpersuasive about the inevitability of doom. “They wildly overestimate transformation and underestimate how easy it is to maintain control.”

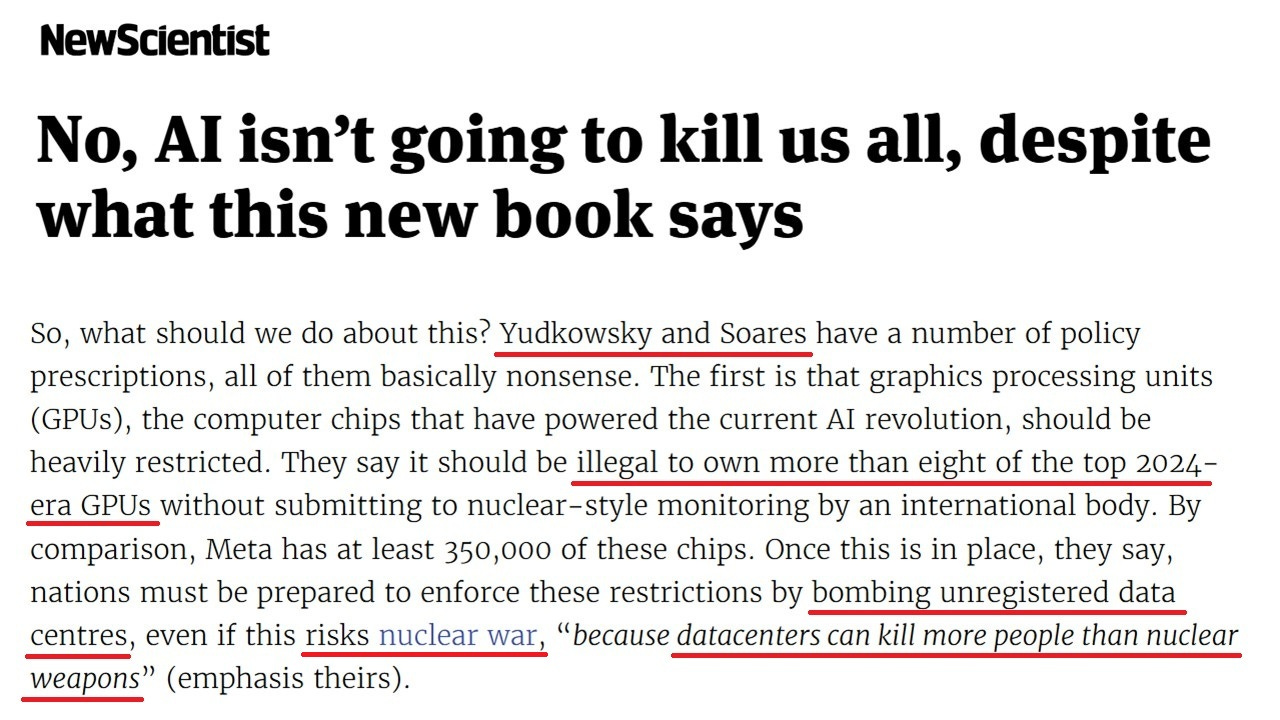

The New Scientist headline reads, “No, AI isn’t going to kill us all, despite what this new book says.” It calls the argument “superficially appealing but fatally flawed.” It leaps over crucial technical steps. It doesn’t show why current techniques must lead to uncontrollable “superintelligence” or why alignment is impossible in principle.

“Before we even realize what’s happening, humanity’s fate will be sealed and the AI will devour Earth’s resources to power itself, snuffing out all organic life in the process,” describes a Semafor review. “With such a dire and absolute conclusion, the authors leave no room for nuance or compromise.”

They did leave room, though, for a lot of parables.

Speculations Mistaken for Inevitability

Several reviewers say the core “Everyone Dies” claim relies on stacked hypotheticals and parables rather than empirical evidence or testable predictions. The overreliance on fables and analogies does not prove the thesis. This emphasis on rhetoric over rigor draws frequent criticism.

According to The Atlantic review, the sweeping claims aren’t backed by verifiable science. It calls the book “tendentious and rambling […] not an evidence-based scientific case.”

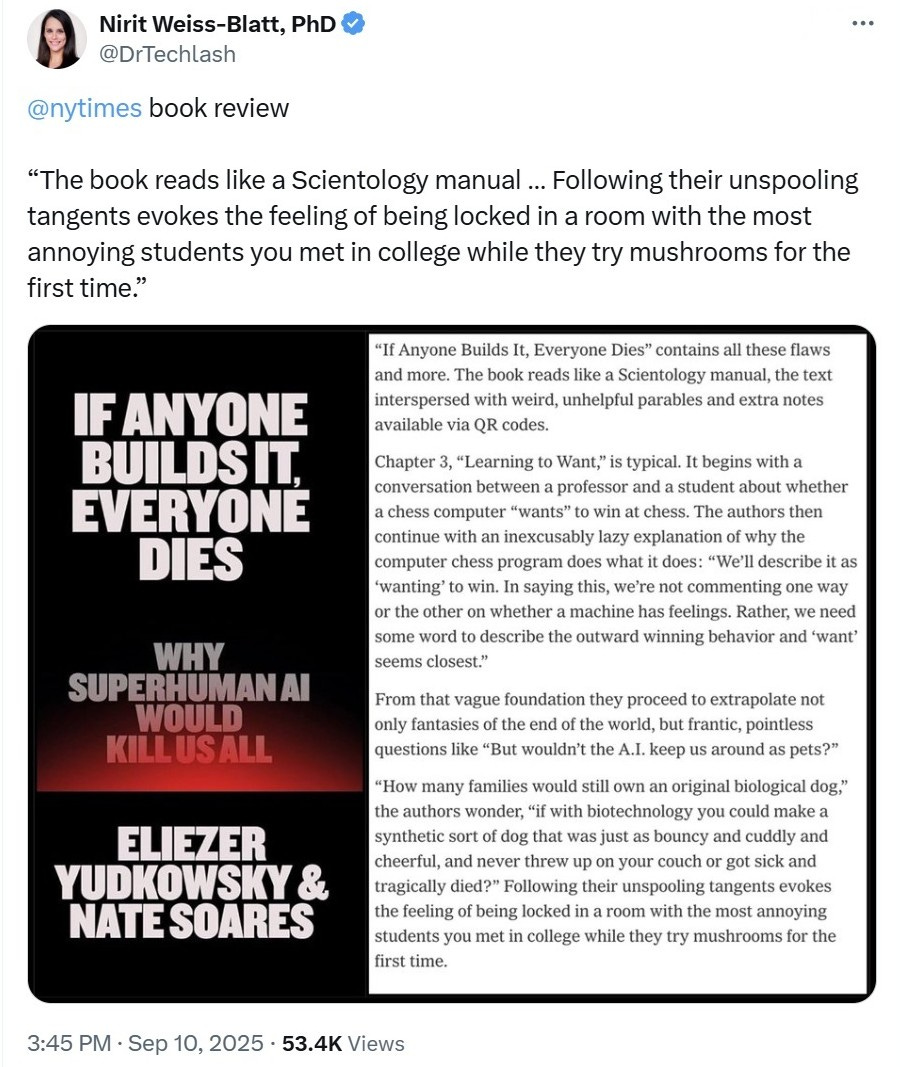

The New York Times complains about the book’s “weird, unhelpful parables” and likens the book to “a Scientology manual.” The critique is quite descriptive: “Following their unspooling tangents evokes the feeling of being locked in a room with the most annoying students you met in college while they try mushrooms for the first time.”

The Transformer’s review calls the book a “chore to read.” “They assert that by default a ‘superintelligence’ would have goals vastly different from our own, but they do not satisfactorily explain why those goals would necessarily result in our extermination.”

Astral Codex Ten’s review is more positive, though still mixed, describing IABIED as “a compelling introduction to the world’s most important topic.” But it also criticizes the book’s scenario design, as the fast takeover story reads like sci-fi with under-justified twists: “It doesn’t just sound like sci-fi; it sounds like unnecessarily dramatic sci-fi.”

Asterisk magazine finds it less coherent than the authors’ earlier writings and ill-suited to persuading newcomers. “The book is full of examples that don’t quite make sense and premises that aren’t fully explained.” It notes that the book rarely grapples with empirical evidence from modern systems.

On Yudkowsky’s LessWrong forum, a book review observes, “Simply stating that something is possible is not enough to make it likely. And their arguments for why these things are extremely likely are weak.”

Traditional media outlets have taken issue with IABIED’s “nonfiction” categorization.

The Observer describes the book as a “science-fiction novel” and states that “fiction might be the best way to think of this book.”

The Washington Post calls it “less a manual than a polemic. Its instructions are vague, its arguments belabored, and its absurdist fables too plentiful.”

The New Statesman says, “If Anyone Builds It is science fiction as much as it is polemic […] The plan with If Anyone Builds It seems to be to sane-wash him [Yudkowsky] for the airport books crowd, sanding off his wild opinions.”

Extreme and Impractical “Solutions”

WIRED says the Doom Bible’s proposed policies—a global halt to advanced AI development, including international monitoring of GPU clusters, bombing data centers, and a ban on publishing research—are impractical and extreme. They are politically and ethically radioactive, weakening the book’s practical relevance. “The solutions they propose… seem even more far-fetched than the idea that software will murder us all.”

Bloomberg highlights the book as a “new gospel of AI doom” rather than a governing blueprint. “The apocalyptic tone of the book is intentional. It aims to terrify and jolt the public into action.” “But in calibrating their arguments primarily for policymakers […] Yudkowsky and Soares have appealed to the wrong audience at the wrong time.”

The Spectator‘s review argues, “If Anyone Builds It, Everyone Dies blends third-rate sci-fi, low-grade tech analysis, and the worst geopolitical assessment anyone is likely to read this year.”

Vox frames it as a worldview rather than an argued case. “The problem with a totalizing worldview is that it gets you to be so scared of X that there’s no limit to the sacrifices you’re willing to make to prevent X. But some sacrifices shouldn’t be made unless we have solid evidence for thinking the probability of X is very high.”

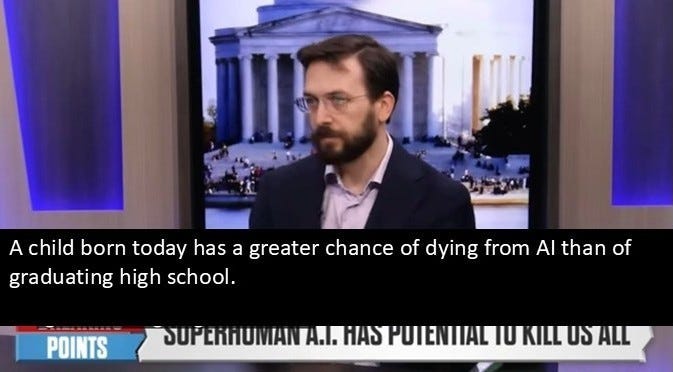

For example, when asked about the risk of concentration of political power, Nate Soares dismisses the concern about centralization. He replies, “That really seems like the sort of objection you bring up if you don’t think everyone is about to die.”

Context

In 2024, the Machine Intelligence Research Institute published a communication strategy aimed at convincing the world to shut down AI development. “We don’t want to shift the Overton window, we want to shatter it,” the MIRI team wrote. “The main audience we want to reach is policymakers.” They need to “get it” and understand that AI “will kill everyone, including their children,” because it’s the “central truth.”

The book “If Anyone Builds It, Everyone Dies” is MIRI’s major effort towards this goal. But “shattering the Overton window” doesn’t mean your case is persuasive; it means it is debated. And, now, it’s time to conclude: many reviewers found the book unconvincing.

My Thoughts

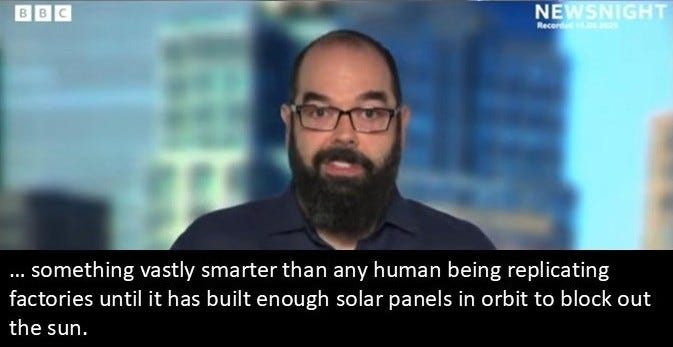

The main criticisms are summarized above, and reading the book clarified them. As I read the lengthy discussion of alien birds about the optimal number of stones in nests, I realized there is no way this storyline appeals to a general audience. Similarly, when I heard Yudkowsky’s warning that “superintelligence” AI will “build enough solar panels in orbit to block out the sun,” I had the same realization: there is little chance that “Normies” will be persuaded by such scenarios.

They read like claims “insiders” (in the Rationalists and Effective Altruism communities)2 might appreciate, but newcomers less so. Thus, it seems to me that the book is more suitable for and tailored to the “true believers” in the authors’ existential risk ecosystem.

The book’s binary view—either we halt frontier AI everywhere or we all die—omits the domain where most safety is built: the middle, the slow, evidence-based governance that actually reduces harm. Complex and risky systems get safer through layered, iterative processes across design, deployment, observation, and corrective actions. We stumble, we learn, we retrofit guardrails. Reviewers say the book declines to engage with this real-life continuity. And when everything short of a global stop is framed as “pathetic,” it repels potential collaborators. They don’t reject safety; they reject this unjustified, totalizing worldview.

Conclusion

If you’re a policymaker or journalist, don’t mistake “If Anyone Builds It, Everyone Dies” for a scientific case or an actionable plan. Consider it a window into a specific subculture’s priors. And keep your focus on the practical middle layer where safety actually takes place.

Endnotes

You can read more about Yudkowsky’s long history with AI Doomerism and the “Rationality” and Effective Altruism movements in “The Rationality Trap.”

Thank you for your well researched, well documented analysis.

As someone who took the MIRI-adjacent BlueDot AI Safety Fundamentals course in 2024 https://bluedot.org/, and participated in the Existential Risks Persuasion Tournament in 2022 https://forecastingresearch.org/xpt, I have some familiarity with those folks. Something that stands out in my mind is their dearth of publications in refereed journals. In neither of those X-risks activities in which I participated, did the extremists present refereed papers to support their contentions. Clearly, the AI extremists are adverse to peer reviewers. That said, for a view of what the peer-reviewed moderates are saying: https://forecastingresearch.org/publications.

for the average reader, the evident lack of self-awareness and failure to accurately anticipate how the tone and style of their message will be received by the target audience isn’t exactly reassuring about their grasp on reality.